Sander Greenland calls it “dichotomania,” I call it discrete thinking, and linguist Mark Liberman calls it “grouping-think” (link from Olaf Zimmermann).

All joking aside, this seems like an interesting question in cognitive psychology: Why do people slip so easily into binary thinking, even when summarizing data that don’t show any clustering at all:

It’s a puzzle. I mean, sure, we can come up with explanations such as the idea that continuous thinking requires a greater cognitive load, but it’s not just that, right? Even when people have all the time in the world, they’ll often inappropriately dichotomize. I guess it’s related to essentialism (what isn’t, right?), but that just pushes the question one step backward.

As Liberman puts it, the key fallacies are:

1. Thinking of distributions as points;

2. Inventing convenient but unreal taxonomic categories;

3. Forming stereotypes, especially via confirmation bias.

P.S. In a follow-up post, Liberman links to slides from a talk he gave with several good examples of bad dichotomization in science reporting.

What I find is that binary thinking crops up particularly when people are predisposed towards one conclusion or another, particularly in issues where they’re ideologically focused.

It’s not a uniform tendency. In other words, when we’re driven towards a particular conclusion, confirmation bias/essentialism/fundamental attribution error/motivated reasoning lock in to drive people towards reducing uncertainty to a more clearly discrete way of looking at an issue.

You mean, the discrete category of those with an ideological focus vs. those without?

I don’t have an answer (rather, I have too many to speculate meaningfully), but I’ll note a corollary. It has been said that marketers see the world as 2×2 matrices – marketing textbooks are full of such examples. Binary thinking squared.

For examples, see https://managementconsulted.com/2×2-matrix/ or https://www.christopherspenn.com/2021/06/find-new-marketing-strategies-with-2×2-matrix/. The second is very interesting as it cites the 2×2 matrix as a way to overcome binary thinking.

Dale said,

“The second is very interesting as it cites the 2×2 matrix as a way to overcome binary thinking.”

I can see how this has some degree of logic to it: a 2X2 matrix can be used to describe a quaternary model, whereas a binary model has just two “classifications” rather than four.

I’ve only met one person who practiced either IRL; else, generality by whichever means is not [and I do think this is already a long standing pursuit, the other way around]

One answer might be that people use binary thinking as a model to make sense of the world. The model is wrong (as all models are, according to George Box), but perhaps it is useful.

Another explanation may be that binary thinking is easier to communicate to laypeople. If you want to get press attention on NPR, it’s better to say there are two kinds of people in the world than to say there is a linear relationship between two variables.

It is rooted in Popper (actually “Popper0”) vs Lakatos on science vs. pseudoscience. Unlike in math/logic, the simple idea of falsificationism does not work in practice, because there is always a “sea of anomalies.”

Instead, different explanations are more or less relatively likely as described by Bayes’ rule. These posteriors are of course continuous.

Now, a decision is discrete. But rational decision-making requires a cost-benefit analysis. This step goes ignored and is typically too uncertain to be useful anyway in research. Fisher talked about this regarding “type II errors”. The concept has no use in science.

Q: What is the cost of “incorrectly failing to reject a false hypothesis”?

A: Somewhere between zero and positive infinity.

The idea of falsificationism is not meant to work in practice in way you suggest. Falsificationism requires that scientific theories should in principle be falsifiable by empirical evidence as well as specifying what evidence would be required to falsify them. Whether that evidence is or can be available is besides the point. And falsificationism is primarily geared toward distinguishing science from non-science, not to adjudicate between scientific theories.

The “sea of anomalies” term is so vague it lacks meaningful conceptual content, and who decides what constitutes anomalies for a particular theory? The same goes for progressive vs. degenerative research programs. Who decides if a program is progressive or degenerative?

The notion that “different explanations are more or less relatively likely” leads to an unsustainable epistemological relativism.

Really this deserves its own post by andrew imo, but see eg:

https://en.wikipedia.org/wiki/Duhem%E2%80%93Quine_thesis

Verification is impossible (affirming the consequent) and falsification is also impossible because there are always “auxiliary” assumptions that can be adjusted to protect the core theory.

If you find this controversial, that is very interesting, and I would love to discuss further.

Fine. I’ll do some pop evolutionary psychology. Hunters need to make decisions: left or right? stay or go? flee or fight? And even when there are more than two choices, the brain eliminates choices until it’s down to a single choice and each of the discarded choices is eliminated one at a time by comparison with everything else. Evolution favors those capable of reducing things to Yes or No and then picking the right answer.

Remember, if you think this answer is bullshit, you’re thinking dichotomously. (I think it’s 87.325% bullshit, but I’m highly evolved.)

Indeed, sometimes* making no decision (or taking too long) is worse than anything else. Rather than ~50/50, the hunter would get stuck at the fork until the prey was lost.

I’ve got some magic eightballs in a shoebox I fall back on. If I can’t rationally choose between options on an important decision, may as well not pretend that is whats going on.

* but other times doing nothing is better than your other options

Jonathan said, ” (I think it’s 87.325% bullshit, but I’m highly evolved.)”

Well, perhaps at this point, you’re just highly involved. ; ~)

Language is essentially discrete. Summarising a number of entities (or actions, or whatever) under one word is very basic. Also in many situations a finite number of potential actions is on the table and a decision rule what action to take when produces a grouping. Real data are in fact always discrete and not continuous, as are brain cells and neurons, and there’s the binary “all-or-none law” of nerve stimulation. “Continuous thinking” is hard and could be seen as to some extent unnatural.

I don’t want to defend “categorical thinking” too much and there are good reasons to fight it in many places. Also sometimes far more categories could make sense than what people think of. Still, categorisation is quite essential in many places, hard if not impossible to avoid in others, and has certain practical advantages in even others.

I’m all in favour of thinking in categories occasionally or even often, and then to question the categories and to be aware that they don’t always do the situation justice. Categories can benefit from being confronted with “continuous thinking”, and “continuous thinking” will ultimately need some categorisation in order to be interpreted in language.

I think an interesting example of this is color perception and memory. Humans may be able to report specific color values during short term memory tasks, but we are heavily biased towards certain focal colors (and biased away from the boundaries between these colors). So rather than holding the precise estimate of the color which can vary continuously, we just remember the color category (e.g. light red, dark red, purple, etc.).

Now, speculating a bit, but since short term memory is very limited, it does seem easier/more efficient/more useful to remember a handful of color gists than 1 color really precisely. So discrete thinking may be beneficial here. You can read more about this in Bae, Olkkonen, Allred, and Flombaum (JEP Gen, 2015).

a few more folk explanations I just made up to add to the pile:

1) Compression — when you cleave a continuous distribution w/ whatever separating hyperplane into discrete binaries, discarding nuance or uncertainty, you reduce the amount of cognitive load necessary to recall the location of a particular sample. Instead of requiring however many arbitrary # of bits to recall a value to an arbitrary degree of precision, you can just recall one. This also streamlines decision-making considerably, since you can rely on cached decision-making heuristics to guide your actions instead of having to evaluate on the fly, or caching a much larger degree of advisable actions.

Even when there is no “natural” separation in state-space, any error sustained by misclassifying locations at the boundary is more than made up for by reduced overhead, since the world is large and misclassification costs scale non-linearly greater at the extremes (eg, kids lumping humanity into bad scary strangers vs. good nice friends may lose them some opportunity to eat tasty candy or pet cute puppies, but goes a long way towards ensuring they don’t get kidnapped! or bamboozled tying to reason out a person’s trustworthiness case-by-case).

2) Opposition — wars, battles, fights, conflicts, etc. are fought between two opposing sides, and conflicts between more than two agents are unstable, favoring the formation of alliances of convenience until the mutual foe can be defeated and a new sundering can occur. “The enemy of my enemy is my friend” — if you find yourself in an oppositional context, tribal us vs. them, in-group vs. out-group, etc. thinking offers the greatest stability and chance of success — and if you don’t ally with one of your enemies, your other enemy will. Or you can temporarily retreat and let your enemies destroy each other, letting you mop up the pieces, also binarizing the central conflict. So you should try to suss out lines to draw ASAP before someone else draws them for you.

3) Feedback — even when there exists no direct conflict along a spectrum, like-prefers-like: humans associate with those similar to themselves, and that association has a within-group homogenizing effect to better signal that association. You do what your friends do, and your friends do what you do, both intentionally and unintentionally, merely through ongoing exposure. Behind the veil of ignorance, maybe some feature isn’t bimodal, but when e.g. fans of X and fans of Y interact with each other more than they do members of the complementary group, the strength of their respective fandom grows. Anticipating this before it’s occurred is just being ahead of the curve.

4) Projection — lots of things in human nature come in twos. Just as our 10 fingers and 10 toes engender preference for a base-10 counting system, our bilateral symmetry, sexual reproduction, possible states of living vs. not living, large & easily visible heavenly bodies, etc. come in twos, and it’s tempting to project that outwards even when inappropriate.

That’s enough of that for now. Can probably invent lots more with a few more moments’ thought!

I’m pulling an old quote from Andrew from the first link in the post above:

“But I’d argue, with Eric Loken, that inappropriate discretization is not just a problem with statistical practice; it’s also a problem with how people think, that the idea of things being on or off is “actually the internal working model for a lot of otherwise smart scientists and researchers.”

This fits with my understanding, but with a big caveat. Psychologists still live in a world where statistical significance is the coin of the realm, even if that is slowly slipping away. How much of the dichotomous thinking is driven by the need to show statistical significance? I have a family member who is a prominent psych researcher, and he and I have discussed causation on a few occasions. Once, I was explaining how I did causation at work, building logic trees and testing every potential cause. In most cases we were able to prove a single root cause – despite repeated assertions on this blog that this cannot happen – by developing strong evidence for one root cause and strong negative evidence for every other cause that anyone could think of. The psych researcher responded that my approach was simply not possible in psychology because of complexity, and anyway it is unnecessary because a p value below the threshold is extremely good evidence if the researcher was careful in their methodology.

In short, he was forced to think dichotomously to get his papers published, and so that is indeed how he thinks.

Wow! Fallacies about fallacies!

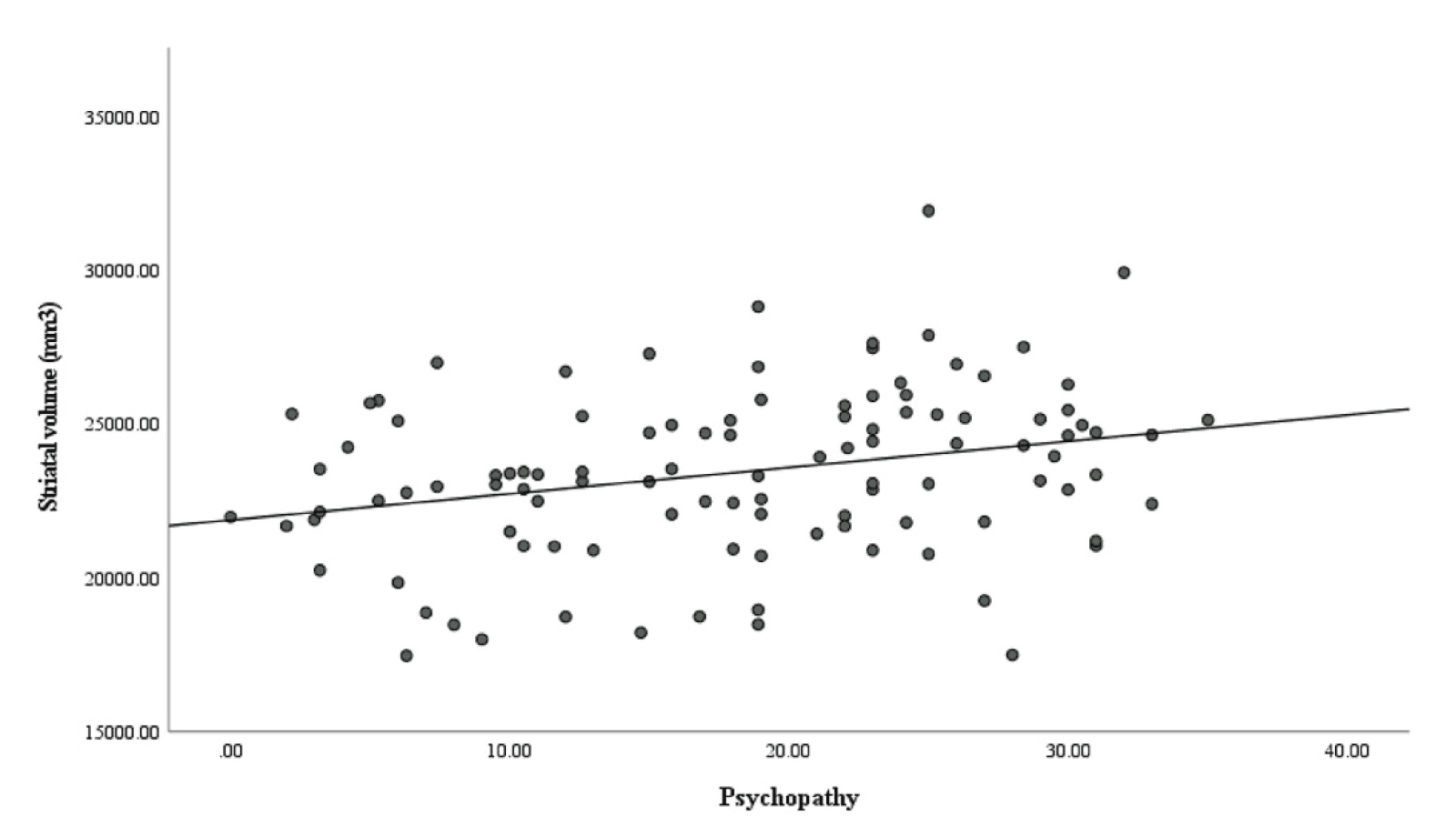

“each an amalgam of several measurements or evaluations, without any strong indication of clustering”

Excellent! This is correct.

“These fallacies are all natural and nearly inevitable aspects of human thought. ”

I have ***NO*** idea how this falls out of anything in this paper or the larger world. It’s flat out wrong.

Humans think continuously all the time! If we couldn’t think continuously, we sure as hell wouldn’t be able to drive! Our steering wheel turns continuously; our gas pedal moves continuously; we merge with, pass, and are passed by other traffic all moving at different speeds. We don’t jump from one lane to another or turn in 15-degree increments.

Beyond that it’s not clear if Liberman’s criticism of the researchers is totally justified:

Liberman’s post quotes the researchers directly about how they did their measurements, but it doesn’t quote their description of the results. First it quotes the press release (“This represents a clear biological distinction…” – but this is *not* a quote from the researchers), then Liberman provides his own summary: “But what the researchers found was two weakly-correlated variables”.

It’s not clear that the researchers ever said the words “clear biological distinction”, and when Choy is quoted in the press release, her statement is much less definitive: “it is important to consider that there can be differences in biology, in this case, the size of brain structures, between antisocial and non-antisocial individuals.” Without reading the paper I can’t assess the validity of this statement, but it’s certainly not claiming a “clear biological distinction”.

Maybe they make stronger claims in the paper, but the plot certainly doesn’t have any labels or boxes showing the “clear biological distinction” quoted in the press release, and if they do make stronger claims, why doesn’t Liberman quote them?

So overall I’m not sure what to make of it.

Maybe what Liberman uncovered here isn’t some natural tendency of humans to think discontinuously, but rather the other natural tendency of university PR departments to hype the research of their faculty.

Oh! Dale:

“This represents a clear biological distinction between psychopaths and non-psychopathic people.”

“These fallacies are all natural and nearly inevitable aspects of human thought. ”

How do these false statements relate to your view that companies in particular are more misleading than other organizations or individuals in society? Are these outside the ordinary for researchers or university PR departments?

Huh? I didn’t say either of those two quotes nor did I express the views you ascribe to me. Did I miss something here? I do disagree with you much of the time, but this seems way off base.

Go Chip’n’Dale – Rescue Rangers in action!

I feel like I’m watching someone progressively forget how to read while somehow retaining the ability to write.

Where exactly does Liberman criticize the researchers or the original research article? Liberman says “so that press release and its mass-media uptake exemplify a cluster of fallacies.”

Where does Liberman claim that humans cannot ever think continuously? Liberman makes no comment as to the frequency of the error. Imagine if someone were manually transcribing old handwritten data tables into an excel spreadsheet for 6 hours until they made one transposition error, switching 68 into 86. They say “these kinds of errors are inevitable.” Does the 6 hours of correct transcription disprove that transposition errors are inevitable?

On your own driving example, people do taxonomize anyways when communicating. You don’t say “34 degrees left in 102 feet.” Directions go “sharp left”, “slight left”, “keep left at the fork”. At the tightest resolution, you might say “11 o clock”, but should never say “10:47”.

“I feel like I’m watching someone progressively forget how to read while somehow retaining the ability to write.”

Really! Whenever you criticize what I write, I feel like I’m watching someone with his face pressed so hard into the bark he doesn’t know he’s standing next to a tree.

If there’s nothing behind the bark, is it even a tree?

What’s left of an argument if all the supporting facts are completely made up?

What’s left of your criticism if you take out all the parts that don’t apply to what was actually written? That one is a real question; what point do you think you made here that actually responds to text that Liberman wrote? Because it’s very bizarre to imagine someone taking time out of their day to respond to something they are too uninterested in to read.

I’ve wondered about the dichotomization phenomenon for a long time. What strikes me is that it shows up not only in perception and belief, as in the OP, but also valuation. In this latter context it takes the form of the “good begets good” heuristic, according to which good actions have only good consequences and vice versa. There’s some research evidence to support this, and as a teacher I’ve seen it in my students countless times.

I suspect there is a relationship to cognitive dissonance avoidance at work. Making a factual claim about the world or a value claim about an actual or hypothetical action imparts an element of self-identification, and to the extent a more complex, differentiated view undermines that identity, it can cause psychic discomfort.

Then again, to avoid the dichotomization trap myself, I should admit there is a wide distribution of such tendencies across people and contexts. It’s just one factor among many.

I think there are two kinds of people in the world: those who dichotomize and those who don’t

Sorry, there is a third kind – those that aren’t sure (like me).

Yes, I have also heard that a few times.

And Dale Lehman’s rebuttal forms a kind of recursive proof (since DL refuses to dichotomize)

I suppose this is off-topic by definition (sorry), but in lawyering, we often see the opposite tendency—which goes all the way back to Socrates, yet I don’t know if there’s a term for it—which is to insist that boundary cases and line-drawing problems undercut the reality of common sense distinctions. I sometimes say there are people who would argue that the existence of beaches calls into question the concepts of land and sea, or turns land/sea into a “spectrum.” (No.) A legal example could be arguing that you can’t tell whether someone took an action unless you can say exactly when they started it.

Having lived in the vicinity of the Wattenmeer, I can definitely accept that land and sea are a spectrum. But I get your point.

The point is the gap between quantitative and qualitative. Quantitative is more feared. Qualitative is about impressions and less prone to SSS (statistical stress syndrome).

For a simple tool making the bridge see a short video in https://gedeck.github.io/mistat-code-solutions/ModernStatistics/#chapter-1-analyzing-variability-descriptive-statistics

Andrew: You could’ve started closer to home with your sister, who’s an expert in how humans categorize the world. For everyone else, there’s a rich and thoughtful literature spanning the social and cognitive sciences, including cognitive anthropology, cognitive psychology, psycholinguistics, sociolinguistics, and philosophy, to name just a few of the disciplines with something to say on this topic.

As a computer scientist, I like simple examples. The equivalent of “hello, world!” for linguistic categorization is the concept of color. It’s uncontentious that languages contain a discrete set of nouns that are used to carve the continuous space of colors into categories. For example, high frequency English color words are “red” and “blue”. Then we have less frequent narrower categories of colors, like “burgundy” or “teal”. And then we have adjectival modifiers, like “blood red” and “sky blue”.

If I were to ask Andrew what his school colors were, he might just look at me quizzically, or he might say “blue” or he might say “sky blue”. A fan of Ivy League sports or a student at the institution might say “Columbia Blue,” but that’s only a valid description for a small subset of the population. A designer might say “Pantone 290c,” but a web developer might discretize to the closest integer point in RGB space and say “185, 217, 235.”

It’s like if you ask me where I am right now. I might say “Europe,” or “France,” or “Paris”, or I might say “on Canal St. Martin,” or “Quai de Jemmappes,” or even send an address, depending on the context. If I’m geocaching or calling for a rescue on a mountain, I might pull out a continuous scale and give you an (approximate) latitude and longitude.

The cool part about color terms is that different languages use different high frequency words to break the spectrum into discrete categories. That’s why machine translation is fundamentally intractable—it can take a lot of words in one language to describe what’s done with one word in another because the boundaries, despite being vague (think Wittgenstein’s family resemblance), are different. But before getting too carried away and claiming the Inuit have a hundred (or a thousand or a million) words for snow, check out Geoff Pullum’s popularization of Laura Martin’s work, “The great Eskimo vocabulary hoax” (couldn’t find a non-pirated, non-paywalled link).

Bob – your comments are related to generalizability. Given the context you provide, what is the impact on statistical methods such as classification tools. There is a group of terms that have meaning equivalence and terms outside this group we labeled with surface similarity. They might sound similar but they mean something different. See https://link.springer.com/article/10.1007/s11192-021-03914-1 or https://papers.ssrn.com/sol3/Papers.cfm?abstract_id=3035070

I actually came here to post a similar comment (and *also* to suggest this would be a good question for his sister, haha!). So I’ll just post below yours.

Concept categorization is a *huge* part of human cognition and development. Think about how we teach kids about different concepts: “What’s this? That’s a raven! Yes, ravens are black.” (<- joke example for the Bayesian epistemologists in the room, I suppose). So the process of concept categorization facilitates learning and communication.

I suppose one could ask about the extent to which such categorization processes are intrinsic vs, say, an artifact of language and how we teach it, but as it seems if we are trained to classify and categorize concepts from a young age as a fundamental mode of learning, maybe it's not too surprising if we sometimes slip into that mode too easily or too early. :)

And I think one could make an argument that more intrinsic cognitive pressures to categorize things may exist: e.g., automatic chunking in long-term memory, episode segmentation,etc., which could bring their own pressures to over-categorize/dichotomize when not appropriate.

“http://www.lel.ed.ac.uk/~gpullum/EskimoHoax.pdf”

Sorry about the first one, but I normally sendlinks with angeld brackets, which doesn’t seem to work here.

My astigmatism is getting the better of me … I should wear glasses when I’m typing.

One problem that often arises is that decisions or actions have to be discrete, even if

the underlying data is continuous. For instance the standard of care for a physician

may be that one prescribes medication if and only if systolic blood pressure exceeds 130

on some scale. Two patients measuring 129 and 131 get quite different treatments although

there is little difference in their health risks. Not really logical, but decisions have to

be made.

In the old days, statistics is all about discrete points, and randomization therein: 2 by 2 table, permutation test, matching, factorial design, bootstrap… Observations are treated as discrete points and it does not matter if they are from a larger population or a fixed design.

But then the trend changes and statistics becomes more continuous: here I am not talking about continuous outcome or continuous parameters, but a continuous/population view that replaces the nonparametric/discrete randomization.

Both view works in practice. Arguably here discrete thinking needs to mental construction. Will the discrete view come back?

I think about this every time I watch news reporters covering a big storm about to hit the US coast: “Sustained winds are at 128mph. If they get to 130, it will be a Category 4 hurricane, and then serious damage becomes inevitable.”