This is Jessica. Last week I walked through some results from a recently published paper by Kenny, Kuriwaki, McCartan, Rosenman, Simko, and Imai that describes how the new Census disclosure avoidance system (DAS, which uses differential privacy) may affect redistricting and make it harder to conform with legal standards like One Person, One Vote, and the Voting Rights Act. I promised a separate post on the last set of analyses that the paper reports, which look at how the new DAS affects one’s ability to predict voter race and ethnicity. Such predictions are relevant to voting rights cases where one must provide evidence that race is correlated with candidate choice.

There are a couple ways of establishing a link between race and vote choice. One, which Kenny et al. examine, is to obtain outside information on names and locations of individuals, then use published Census data to predict race. The authors look at how accurately you can impute race/ethnicity for individuals given voter registration data (available from third-party companies) combined with Census releases using Bayesian Improved Surname Geocoding (BISG). First a prior on the probability of race given location is derived from Census 2010 or one of the Census 2010 demonstration datasets with different levels of noise injected — DAS-4.5, DAS-12.2, DAS-19.61. Then posterior probabilities for each race can be calculated for surnames in voter files by combining the geographical prior with probability of race given surname (obtained by combining voter files from southern states—AL, FL, GA, LA, NC, SC—where race is included in voter files, with Census data on the racial distribution of 151,671 surnames occurring at least 100 times in the 2000 Census, and 12,500 common Latino surnames). Kenny et al. evaluate the results of applying this method to 5.8 million voters in a 2021 North Carolina voter file for which self-reported race is known, trying surnames only, first name and surname, and first, middle and surname.

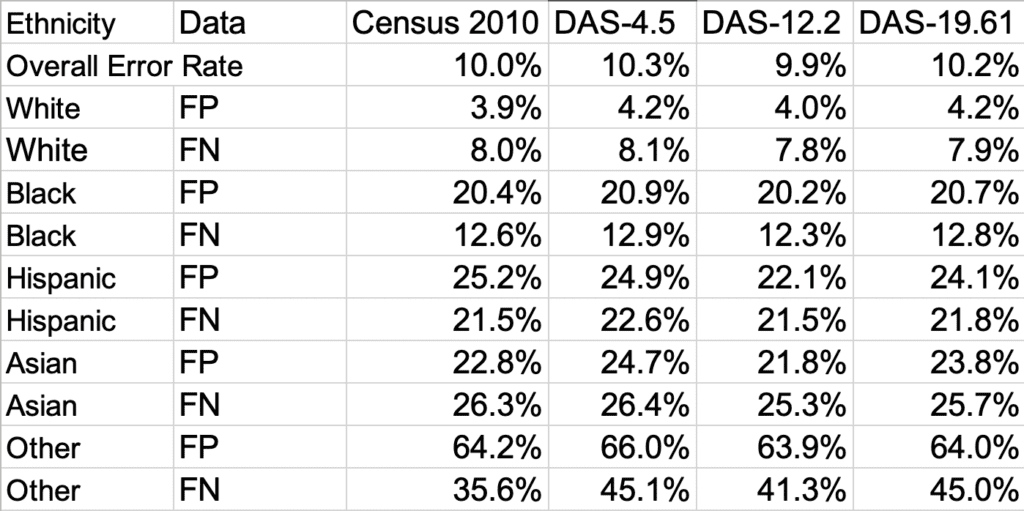

The results are impressive in aggregate. With the most name information, AUC is 90% – 95% for White, Black, Hispanic and Asian voters and 70% for ‘other’ voters when the prior comes from Census 2010 data (treating membership in each group as a binary prediction). Classification error rates from assigning each person the race with highest probability by BISG are similar, with average classification error being about 10% for all three names.

DAS-4.5 reduces accuracy slightly relative to Census 2010, most notably in predicting ‘Other’ as race. Curiously, DAS-12.2 leads to slightly higher accuracy than Census 2010 in terms of FPs and FNs across most races. The authors speculate that it may be because the added noise somehow aligns with the actual population shift (since the file they test on is more recent data), or because the added noise makes the data more reflective of the registered voter population. They nod to limitations of AUC for capturing changes to individual probabilities. This brings to mind possibilities like certain individuals (who have been assigned higher risk based on their combination of attributes) being harder to predict for under the new DAS, but in aggregate these differences get washed out. Hard to say.

In contrast to the slight improvements in accuracy from DAS-12.2, DAS-19.61 doesn’t change things much over the 2010 Census. Here’s a table comparing all four cases.

So what to make of this? There’s a lot to unpack, but first let’s acknowledge a few caveats. One is that the Census 2010 data had swapping applied already. Swapping should have a higher probability of affecting people who were more unique in their blocks. So the lower accuracy we see as we move from White to Black to Hispanic to Asian to Other across the different data sources seems in line with the Census’s intentions in disclosure limitation.

It’s also worth noting that the analysis was restricted to North Carolina data only, and the probability models were based on data from southern states as well. I don’t know enough about how naming is affected by geography to predict how this might affect accuracy on the entire country, but wouldn’t be surprising if it goes down as the population being predicted becomes more diverse than the population the model is trained on. I also couldn’t tell from the paper whether, in learning the model, there was any attempt to exclude 2010 records for the 5.8 million voters from NC 2021 files that were used to test accuracy.

Returning the high level question Kenny et al. pose–whether ecological inference for voting rights cases will be threatened by the new DAS–the answer seems to be that it could be slightly reduced, but only with much lower epsilon than the Census has chosen for 2020. This conclusion is in line with that of another analysis by Cohen et al., who looked at ecological regression (the alternative way to establish the race/vote choice link) and also concluded that the new DAS doesn’t hamper ability to establish these links.

On the other hand, the final section of the paper also takes a closer look at one small NY school board case of redistricting, where at-large elections had led to all white elected officials despite 35% of the voting-eligible population being Black or Hispanic. A voting rights case using BISG led to the division of the district into seven geographical election districts. By using BISG to impute race for voters (as of 2020) in the affected area, Kenny et al. find that the predicted proportion of white voters per block tends to be higher with DAS-12.2, while predicted proportions of black and hispanic voters are lower with the new DAS than with Census 2010 data, especially in cases where they are a majority. This could affect proposals for redistricting, and similar to their previous results, they find underestimation of the number of majority minority districts (MMDs, defined using 50% as a hard threshold) with DAS-12.2 compared to Census 2010 when they run simulations looking for MMDs. Undercounting persists with DAS-19.61. The point they make here is that local governments may see unpredictable racial effects when using BISG on results subject to the new DAS.

Does this reflect badly on the new Census DAS?

Putting aside the single school board case, when I first read Kenny et al.’s BISG results it seemed clear that the elephant in the room is the seeming contradiction between the perceived ‘goals’ of differential privacy, and the new DAS’s relative lack of reduction of aggregate predictive accuracy for race at the individual level.

I have to admit my initial, naive impression upon learning how well BISG does was to wonder why the bureau hasn’t acknowledged this kind of approach in their official communications, since on the surface BISG can seem like a database reconstruction attack: it involves obtaining individual records with name and location from some external source then using Census data to fill in the missing info. Compared to the 17% of individuals for which the Census’s database reconstruction attack (which I described in detail here) was able to accurately recover race and other attributes, and even the 58% you get if you use Census-quality name and address data, BISG seems more impressive.

That thinking however overlooks some key technicalities. Like most questions about the implications of the new DAS, it’s more complicated than it might at first appear. As Cynthia Dwork and others conveyed in a statement posted about the Kenny et al paper pre-publication (when a version of it apparently concluded “the DAS data may not provide universal privacy protection”), BISG is not a threat to individual privacy, because it operates using aggregate data only. No individual information is compromised in the use of the Census tables to infer probability of race given geography. In a database reconstruction attack, the published tables alone are being used to infer a system of dependencies with a limited solution space, whereas in a case like BISG we’ve learned something from population-level relationships between name, race, and location, and are simply applying that to new data. BISG is a qualitatively different type of beast than the database reconstruction attack the Census used to motivate the new DAS. (Seeing BISG described alongside the Census’s database reconstruction attack can help make this clear).

But … I have to admit I agree with Kenny et al. that this seems like a relevant comparison when trying to weigh the trade-off between privacy and accuracy. It may be beyond the technical definition of privacy loss (as defined under differential privacy), but I don’t think the authors are off base in questioning how the new DAS might affect BISG even outside of its relevance to voting rights cases. Formal privacy protection is a new concept for many, and the need to (at least in some ways) deter attackers from inferring race and other individual attributes has figured into the Census’s motivation of the new DAS. As a result, reading and thinking about Kenny et al.’s results, and the response from privacy experts, has me concerned.

My biggest worry is about how hard this kind of thing makes the PR problem the Census faces in getting buy-in to the new DAS. That we can control compromises to individual-level privacy with more sophisticated techniques (i.e., DP) is great. But how do we keep moving forward when for so many questions that a stakeholder might have about the new DAS, there’s a complicated answer that requires having to differentiate things that might seem, to non-privacy experts, a lot alike? I’m also thinking of Ruggles and Van Riper here, whose argument I didn’t find at all convincing, but apparently many other people did.

The one constant I see among those who support the switch to DP at the Census (a group I consider myself part of) is a belief in the value of scientific progress in privacy protection – differential privacy is superior on a technical level to the kinds of techniques that came before it. And with the technical improvements comes the chance to improve to how we do inference from Census data. I want to see uncertainty from disclosure avoidance techniques accounted for in analysis, rather than ignored because the methods are opaque. I also badly want to see the government move away from heavily reliance on incredible and conventional certitude when it comes to the statistics they produce, because I think this type of collective ignorance supports other forms of obfuscation through data. Using differential privacy is a step toward that kind of future.

But it hasn’t been easy for many to see it this way, and I can understand why. For example, the Census has not released the noisy counts, despite requests among privacy experts and some data users that they do so. Why? This makes it much harder to argue that we need differential privacy in the name of scientific progress, because there are biases induced by post processing that can prevent proper accounting for the added noise in inference. Another is how easy it is, in trying to make sense of what’s going on with the new DAS, to get stuck in the details. So much of the back and forth between proponents/privacy researchers on the one hand and opponents to the new DAS on the other has been reactive rather than strategic. I haven’t perceived much focus on how to frame these issues so they are palatable to broader audiences (and in particiular, how to frame them so that people don’t develop intuitions about what the new DAS does that then get violated when new results like the BISG results come out). danah boyd is an exception here, as she seems to have been working pretty tirelessly to draw broader attention to what’s going on (I’ve literally watched her give two plenaries that addressed this just in the last week).

Kenny et al.’s BISG results also make me think we have to be careful about implying that through the new DAS, we are protecting people from attackers inferring sensitive attributes about them. There’s a difference between publishing statistics that make what was supposed to be confidential information public (what a database reconstruction attack is concerned with), and an attacker inferring certain attributes from overall patterns. Yet it’s hard to expect someone to simultaneously believe that a) the fact that an attacker with names and addresses and access to Census 2010 could identify race and other attributes for 17%-58% of the population is scary and requires a significant change in privacy protection and b) the fact that an attacker with names and addresses and access to Census 2010 or the new DAS could predict race for an even larger portion of the population says nothing about how well the new DAS is protecting sensitive attributes. It may be true, but it’s not an argument I would hang myself on.

Maybe readers have ideas on where the source of the disillusionment lies, how to make some of these distinctions less tedious to explain, or on the specific findings of Kenny et al.

PS: Thanks to Priyanka Nanayakkara and Abie Flaxman for reading a draft of this post.

I think this goes to the heart of the issue some skeptics have with DP, which is that it’s very hard to convey what, exactly, is being protected in exchange for all the very real disadvantages. What data about you are we worried about DB reconstruction attacks exposing? It’s

– Not your name, address, sex, or age (the attackers have this ex hypothesi)

– Not your race (BISG can do this with more accuracy than a database reconstruction attack)

– Not your household size or who lives with you (if they have your address they probably also have the address of the other people in your house)

– Not household ownership status (this is literally a matter of public record! yes, some people use shell companies but come on!)

I think that’s all the questions on the decennial census? What am I missing?

Like, imagine I live in a house with a roommate. I’m in charge of securing the front door and my roommate’s in charge of securing the back door. I take my job very seriously and my roommate doesn’t — he just leaves the back door wide open. In my Quarterly Front Door Security Audit, it is revealed that, despite all my precautions, attackers can make it through the front door? How? They have their friend stroll into the back door, walk through the house, and unlock the front door from the inside.

What should my response to this be? Because the Census Bureau’s answer seems to be bricking up the front door.

I don’t disagree. For me the relevant point is that they are obligated to protect it whether or not its available elsewhere, so if you approach it from the purely technical standpoint, it makes sense. If you try to weigh the risk relative to risks involved with the wider data universe, then yeah, it’s puzzling. If you think about it in the context of the history of harmful uses of Census data, it can also be puzzling.

Yes, obligated to protect it but to what extent? Obviously bricking up the door (from the example above) also fulfills the obligation to protect against entrance, but at what cost?

I appreciate all that you’ve written on this–and maybe I’m just missing the thread–but at this point my perception of all of this is probably close to Jacob’s above.

> obligated to protect it whether or not its available elsewhere, so if you approach it from the purely technical standpoint, it makes sense

Disagree — if you assume there’s no technical benefit, there’s definitely technical cost so you don’t do it.

> is a belief in the value of scientific progress in privacy protection – differential privacy is superior on a technical level to the kinds of techniques that came before it.

> want to see uncertainty from disclosure avoidance techniques accounted for in analysis, rather than ignored because the methods are opaque.

Are the proposals to replace these existing disclosure avoidance techniques with differential privacy (instead of adding differential privacy on top)?

> makes the PR problem the Census faces in getting buy-in to the new DAS

Why is this a PR thing? Is this the sort of thing that filters through congress?

On differential privacy making sense from a technical standpoint, I am speaking from a relatively narrow problem definition. *If* you accept the premises that 1) Census is obligated to protect individual-level records, and 2) the modern definition of what it means to protect individual records means to limit what can be learned from database reconstruction attacks (which I think is how the average computer scientist would approach this, e.g., not necessarily questioning the premise but thinking about risk more as a theoretical/hypothetical thing), then differential privacy is the better technical solution over what has come before (swapping), because it parameterizes the amount of random noise to the data, so that you can (provably) limit the amount of information that some statistic provides about any individual. This provides a well-defined way to represent the privacy-accuracy trade-off, and allows you to publish the details of the specific approach you use (noise distribution and privacy budget) and someone analyzing the data can then account for the added error when doing inference. With swapping, the old technique, the Census could not reveal details of how they chose who to swap attributes for, nor even what percentage of the data they had swapped. So as a data user you basically have to treat the data as-is.

>Are the proposals to replace these existing disclosure avoidance techniques with differential privacy (instead of adding differential privacy on top)?

Yes, no more swapping, just this new system (in its entirely called TopDown). But, there are aspects of the new system that make it hard to account for the added noise cleanly in analysis the way a standard differentially private approach would allow you to… the post processing the Census does after noising the data using DP (including adjusting the counts so that they sum up properly in various ways, making sure none are negative, etc). How TopDown works is not a secret like the old system (Census has released code) but it’s much harder to separate out the expected contributions of the added noise in analysis the way you could if the system simply added some calibrated amount of noise then released those counts without adjusting.

>Why is this a PR thing? Is this the sort of thing that filters through congress?

At some point courts will have to make decisions about whether standards like one person one vote or voting rights act need to be modified somehow now that we have this intentionally added noise in the data (some think it will go to the supreme court). Not sure about congress, but there are many people who will need to have some basic understanding of how the new approach is and isn’t that different from what came before, so that they can decide whether it’s ok to keep continue using the data in the same ways (e.g., using the counts as if they are the true counts). More generally to even get the information they need from analysts about how they are using the data (since everyone agrees that you should understand the analytical tasks when trying to set the privacy budget), the Census needs people to have some basic understanding and buy-in to what they are doing. I think there have been failures even in that very first step of managing the public/stakeholder perceptions, for example people having different ideas about what the premise should be (eg what sense of risk are we actually concerned about). So that’s what I meant by a PR issue over a technical issue.

Thanks, Jessica. This is a very helpful comment. I think I had missed that TopDown would replace swapping, and I do see how (in theory) this could be beneficial for the reasons you outlined.

Agreed, +1. Thank you for the very thorough answers!

Just possibly for surnames and more likely for first names, has anybody done a study on perceived discrimination as revealed by choice of name? Calling yourself (or naming your child) Ruairí Ó Brádaigh is a declaration of allegiance to a tradition that Peter Roger Casement Brady is not. An increase over time in the number of identifiably ethnic names among a community might suggest that they no longer fear discrimination.

Do you have a link to the statement that Dwork and others made prior to publication of this paper?

this might be it

https://differentialprivacy.org/inference-is-not-a-privacy-violation/

Yes, thanks, I had intended to link that in the post. Just added.

Yes, that’s the one. We revised parts of our working paper (https://arxiv.org/abs/2105.14197) after reading it.

Is it possible to define DAS the first time you use it in the article? I’m generally interested in the subject but don’t have the technical jargon.

Done. If you’re interested in the new DAS (TopDown approach), section 2.2 of this paper gives a good high level description: https://mggg.org/publications/DP.pdf