A few months ago I (Jessica) wrote about how the Census Bureau is applying differential privacy (DP) to 2020 results, and been sued for it by Alabama. Prior to this, data swapping techniques were used. The (controversial) switch to using differential privacy was motivated by database reconstruction attacks that the Census simulated using published 2010 Census tables. I didn’t go into detail about the attacks in my last post, but since their adoption of DP remains controversial and a new paper critiques the value of the information that their database reconstruction experiment provides, it’s a good time to discuss.

For their reconstruction experiment (see Appendix B here), the Census took nine tables from 2010 results, reporting 6.2 billion of a total 150 billion statistics in the 2010 Census. They used the tables to infer a system of equations for sex, age, race, Hispanic/Latino ethnicity, and Census block variables then solved it using linear programming. Because the swapping method used in 2010 required the total and voting age populations to not vary at the block level, they were able to reconstruct over 300 million records for block location and voting age (18+).

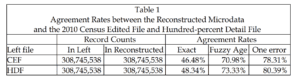

Next they used the data on race (63 categories), Hispanic/Latino origin, sex, and age (in years) from the 2010 tables to reconstruct individual-level records containing those variables. They calculated their accuracy using two internal (confidential) Census files: Census Edited File (CEF, the confidential data) and Hundred-percent Detail File (HDF, the confidential swapped individual-level data before tabulation). The table shows the results of the join, where Exact means exact match on all five variables (block, race, Hispanic/Latino origin, sex, age in years). Fuzzy age means age agreed within one year, and one error means one variable other than block (which they started with) was wrong (for age meaning off by more than 1 year).

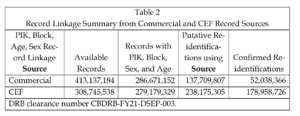

From here they simulated a re-identification attack using commerical data containing name, address, sex and birthdate that they purportedly acquired around the time of the 2010 Census. After converting the names and addresses in the commercial database to the corresponding Census key (PIK) and matching each address to Census block, they do a form of greedy match consisting of two loops through the reconstructed data: On a first pass they take the first record in the commercial data that matches exactly on block, sex, and age, and then on a second pass they try to match the remaining unmatched records in the reconstructed data, taking the first exact match on block and sex with age matching +/-1 year. The successful matches from both are the putative re-identifications (not yet confirmed as correct), which link the reconstructed data on block, sex, age, race, and ethnicity to a name and address.

This process identified 138 million (45% of the 2010 Census resident population of the U.S.) putative re-identifications, 52 million of which were confirmed to indeed have matches in the confidential Census data (17% of the 2010 Census resident population). These figures are suggested to be conservative because the commercial data used were obtainable at the time, and so if an attacker had access to some better quality data that the Census wasn’t able to purchase in 2010, they might do better. To get worst-case stats, they can pretend the attacker has the most accurate known information, namely the names and addresses from the confidential Census CEF file. Under this assumption, the reconstructed data would produce 238 million putative re-identifications and roughly 180 million confirmed re-identifications, which means 58% of the 2010 Census resident population.

Is this alarming? John Abowd, Chief Scientist at the Census who provided the official details on the reconstruction experiments in court, has called these results “conclusive, indisputable, and alarming.” A recent paper called “The Role of Chance in the Census Bureau Database Reconstruction Experiment,” published in Population Research and Policy Review by Ruggles and Van Riper argues that they are not. The paper describes a simple simulation that does, in aggregate, approximately as well in matching rate (specifically, they are talking about the 46.5% exact matching rate calculated against CEF in the first table I shared above). The authors conclude therefore that “The database reconstruction experiment therefore fails to demonstrate a credible threat to confidentiality”, and argue multiple times that what the Census has done is equivalent to a clinical trial without a control group. (In fact this is said five times; the writing style is a bit heavy-handed).

Ruggles and Van Riper describe generating 10,000 simulated blocks and populating them with random draws from the 2010 single-year-of-age and sex distribution, accounting for block population size (at least I think that’s what’s meant by “the simulated blocks conformed to the population-weighted size distribution of blocks observed in the 2010 Census.”) They then randomly drew 10,000 new age-sex combinations and searched for each of them in each of the 10,000 simulated blocks. In 52.6% of cases, they found someone in the simulated block who exactly matched the random-age-sex combination.

At this point I have to stop and remark that despite how “simple” this procedure is, even writing the above description was annoying. The Ruggles and Van Riper paper is sloppily worded to the point where it’s hard to say what they did (though they do provide code, and anyone with the inclination to look at it should feel free to help fill in the details, like what 2010 tables exactly they were using). Even to write the high level description above, I had to search around online to disambiguate the matching and ultimately found a description on Ruggles’ website that clarifies they were searching for each of the new 10,000 random age sex combinations in each of the 10,000 simulated blocks, which is not what the paper says. Argh. The authors did at least confirm that in terms of “population uniques” (individuals who are unique in their combination of Census block, sex, and age in years) their simulated population was similar to the actual uniques in 2010 (reported to be 44%).

They then try assigning everyone on each simulated block the most frequent race and ethnicity in the block according to 2012 Census data, and find that race and ethnicity will be correct in 77.8% of cases (again, the language in the paper is a little loose but I assume that means match on both). They use “that method to adjust the random age-sex combinations” and find that 40.9% of cases would be expected to match on all four characteristics to a respondent on the same block, hence not so far off from that 46.5% reported by the Census.

So, is this alarming? Before getting into that, perhaps I should mention that I personally welcome all of the “attacks” on database reconstruction attacks, because it’s the database reconstruction attack theorem and these experiments that are leading to big decisions like the Census deciding DP is the future for privacy protection. We do need to question all the assumptions made in motivating applications of DP, as it is certainly complex and unintuitive in various ways (my last post aired what I see as some of the major challenges). In other words, I see it as very important to understand the reasons we should be wary.

However, this paper does not in my opinion provide a good one, for several reasons. Sloppiness of explication aside, the biggest is that there is a difference in the results of the Bureau’s reconstruction attacks, and it’s directly related to the Census’ concerns about using the old data swapping technique (which required block totals to be invariant).

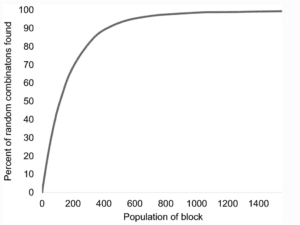

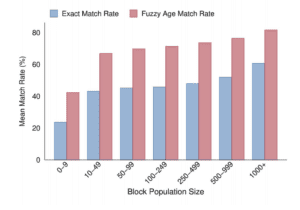

Here are two figures, one from Abowd’s Declaration and one from Ruggles and Van Riper. Abowd’s reaction to Ruggles and Van Riper’s analysis points out (as Ruggles and Van Riper do in their paper) that the Census reconstruction attack does considerably better on reconstructing records in low population census blocks. (Normally, I might ask why the Ruggles and Van Riper’s figure omits any axis ticks, as this really doesn’t aid in comparison, but that’s a question for another day). In text they do acknowledge that their random simulation guessed age and sex correctly in only 2.6% of cases with fewer than ten people, while the Census’ re-identification rate was just over 20%, a pretty big difference.

Abowd also points out that while 2010 swapping focused primarily on the small blocks, “for the entire 2010 Census a full 57% of the persons are population uniques on the basis of block, sex, age (in years), race (OMB 63 categories), and ethnicity. Furthermore, 44% are population uniques on block, age and sex.” So differential privacy is a way to attempt to simultaneously address both.

So is it all worth worrying about? On some level you could say that’s a religious question. I personally don’t worry about my data leaking from the Census, but some people do, and it’s worth noting that the Census is mandated by law to not “disclose or publish any private information that identifies an individual or business such, including names, addresses (including GPS coordinates), Social Security Numbers, and telephone numbers.” There’s some room for interpretation here, and the database reconstruction theorem provides a perspective. My own conclusion is that when you start thinking about protecting privacy and you want to do so with maximum guarantees, you end up somewhere close to differential privacy. There’s a lot of back and forth in the declarations and other proceeding descriptions I read from the trial that imply that there were some misunderstandings on Ruggles’ part about what aspects of the Bureau’s interpretation of compromised privacy have changed versus stayed consistent; there’s too much nuance to summarize concisely here.

My problems with the Ruggles and Van Riper paper aside, there are important questions one might ask of the actual privacy budget used by the Census, including how exactly accuracy and risk trade-off across different subsets of the population with respect to common analytical applications of Census data. I don’t know all of what’s been discussed among privacy circles here, but I’ve heard that the Census 2020 epsilon is somewhere around 17 (looks like there are details here). Priyanka Nanayakkara, who provided comments as I was writing this post, tells me that it was initially much lower (around 4) but feedback from stakeholders about accuracy requirements led to the current relatively high level. I also don’t know enough about the post-processing that comprises part of their TopDown Algorithm either, but it seems Cynthia Dwork, DP creator, has commented that she doesn’t really like some of those steps.

There is also a valid question of how the external attacker would verify that they got the re-identifications correct (on Twitter, Ruggles states that the Census Bureau “has reluctantly acknowledged” that “an outside intruder would have no means of determining if any particular inference was true.” In his declaration, Abowd acknowledges that an attacker would: “have to do extra field work to estimate the confirmation rate—the percentage of putative re-identifications that are correct. An external attacker might estimate the confirmation rate by contacting a sample of the putative re-identifications to confirm the name and address. An external attacker might also perform more sophisticated verification using multiple source files to select the name and address most consistent with all source files and the reconstructed microdata.” Assuming the worst about the attacker’s capabilities is a perspective that aligns well with a mindset that seems common in privacy/security research. At any rate Ruggles’ assertion about the Census admitting there’s no way doesn’t seem quite right.

Finally, I just don’t get the reasoning in Ruggles and Van Riper’s clinical trial analogy. From the abstract: “To extend the metaphor of the clinical trial, the treatment and the placebo produced similar outcomes. The database reconstruction experiment therefore fails to demonstrate a credible threat to confidentiality.” It’s as though Ruggles and Van Riper want to be comparing results of a reconstruction attack made on differentially private versions of the same 2010 Census tables to non-differentially private versions, and finding that there isn’t a big difference. But that’s not what their paper is about, and I haven’t seen anyone attempt that. Priyanka had the same reaction and informs me that the Census has released DP-noised 2010 Census “demonstration data” corresponding to different levels of privacy preservation however, so it seems within the realm of possibility. Bottom line is that showing that a more random database reconstruction technique matches fairly well in aggregate does not invalidate the fact that the Census reconstructed 17% of the population’s records.

P.S. I read through a lot of relevant parts of Abowd’s declarations in writing this (thankfully his descriptions of what the Census did were quite clear). Overall, some of it is pretty juicy! Ruggles apparently testified in court, so there are pages of Abowd directly addressing Ruggles’ criticisms, which appear to be based on the same simulated data reconstruction attack he and Van Riper report in the recent paper. At one point Abowd points out that Ruggles’ has misinterpreted the implementation of DP that the Census uses (their TopDown Algorithm) with DP itself. (Differential privacy is not an algorithm, it’s a mathematical definition of privacy that some algorithms satisfy) I can sympathize: I recall making this mistake at least once when I first encountered DP. What I can’t quite imagine is getting to the point where I’m making this mistake while publicly taking on the Census Bureau.

P.P.S. In response to Ruggles’ response to my post I crossed out part of my P.S. above. I can’t easily verify whether what Abowd said about the misinterpretation is true, and it’s irrelevant to my main points anyway. So I guess now my title is ironic!

If we’re gonna talk about graphs, let me complain about the graph on the right above. It would be so much cleaner as a line plot: a blue line for the exact match rate, a red line for the fuzzy match rate, and just label the lines directly. Then they can have a directly readable x-axis as well as direct comparisons of the curves.

It makes me soooo annoyed when people do this bar plot, given that it’s dominated by the line plot in all respects.

Also, once you think about the line plot, you can think about improvements, such as having small multiples, one for each region of the country.

Fair enough. Though in this particular discussion, there is a lot of focus on the blocks on the small end, so the figure on the left was more annoying to me.

I think much of the discussion around differential privacy and the Census has avoided discussing the concrete harms that the Bureau is trying to avoid. Their reconstruction attack requires knowing a person’s age, sex, and address, in order to ‘leak’/re-identify their race/ethnicity. Their experiment shows that this is possible at most 58% of the time (though you don’t know when you are right or wrong, unless you are the Bureau and can check against the underlying data).

But as noted in the post, you can be right 78% of the time just by guessing the most common race/ethnicity. You can improve this rate to around 90% by doing some basic Bayes with people’s first and last names (which are distributed differently by race/ethnicity). Now, the types of people who are re-identified by this statistical technique are going to be different than the ones re-identified by the reconstruction attack. So there needs to be a discussion about the privacy risk borne by different groups. But ultimately all of this is about protecting people’s race/ethnicity from being identified. Of course, in many states this information is already public as part of voter files.

I agree that the reconstruction experiment needs to be done on the new DP Census data, especially given the very high epsilon (it’s on a logarithmic scale & is generally recommended to keep it below 1.0). But only the Bureau can do this, since you need the CEF to validate. And they have chosen not to share any information publicly about whether they have done such an experiment and what the results were.

So we don’t know if their new TopDown/DAS algorithm actually protects privacy in a meaningful way. This is especially concerning given the accuracy problems their algorithm (and especially its post-processing component) causes, as our team and others have noted (https://arxiv.org/abs/2105.14197).

Ultimately the use & implementation of DP in the Census is a public policy decision that requires much better information about the practical harms & benefits of every point along the privacy-accuracy spectrum. It was up to the Bureau to provide this information, and they did not. What we’re left doing is writing papers like these, which at best can provide only a limited examination of these tradeoffs and harms.

Yep, these are great points. I for one would love to know how Abowd honestly appraises the use of differential privacy now that all is said and done (and the epsilon for the persons file is at 17).

Cory basically nails what we know about this so far – database reconstruction works *worse* than other methods that are *still possible*.

As a working social scientist, I can’t publicly state my view on what Abowd is really going for here, so I will do it under the partial anonymity of this username: this is a power grab. DP will make census data releases useless for researchers, and thus concentrate publications and status among those with access to RDCs or connections to the census itself like Abowd. Some prominent researchers have already basically admitted as much, saying things like “it’s no big deal because all the top departments have RDCs.”

I do not actually work with census data at all, so I don’t have a dog in that fight – but I am furious that the census bureau is materially changing legislative apportionments as a result of this effort. Because of the zero lower bound on populations, DP will skew representative in the House of Representatives and state legislatures toward rural areas, exacerbating the already awful imbalance of power in favor of reactionary whites. This is unacceptable.

I respond to Hullman’s post here:

https://users.pop.umn.edu/~ruggles/Hullman_Response.html

Steven:

Thanks! It’s good to have this sort of dialogue.

Agreed with Andrew on the value of dialogue – thanks for responding.

Do you now acknowledge the need for a control group when conducting a scientific experiment?

Hi Steven,

I am an experimentalist, so yes, of course. What I’m not convinced of is that the comparison you did, between aggregate match rate from a random simulator plus heuristics to aggregate match rate of the Census db reconstruction attack, is the appropriate control for the claims you make in the paper. I do however think it’s broadly useful to know the match rate you can obtain with a process like the one you present, and I read your paper with interest for this reason. Combined with further heuristics like those suggested by McCartan’s comment above, it is surprising how well one could do, and it does pose questions about how we should evaluate database reconstruction attacks. But from what I’ve seen of the Census’ communication around their decision, their concerns about database reconstruction attacks seem only tangentially addressed by knowing the aggregate match rate from a random simulation. I would welcome further analysis along the lines of who is affected by each type of reconstruction approach, as Cory McCartan seems to be suggesting above.

I say all this from the standpoint of someone who was asked to look at your paper and comment on it because of the bloggers here, I’ve written about the topic before and have a little research experience with it. However, I would say I have no real stake in the game: I don’t depend on Census data in my work, nor do I feel a strong need to see differential privacy succeed in the world. Maybe, as McCartan implies above, the lack of Census cooperation with researchers to help gauge whether differential privacy is helping against database reconstruction attacks puts researchers who depend on Census data between a rock and a hard place – obviously affected by the choice but unable to evaluate it directly. And so, to an outsider like myself, some of the arguments seem indirect and confusing. At any rate, I acknowlege that my lack of stakes in this debate may make it hard for me to see it the way researchers like yourself do, and appreciate the chance to learn more from those on both sides are more directly involved in the discussion.