Paul, Jonah, and Aki write:

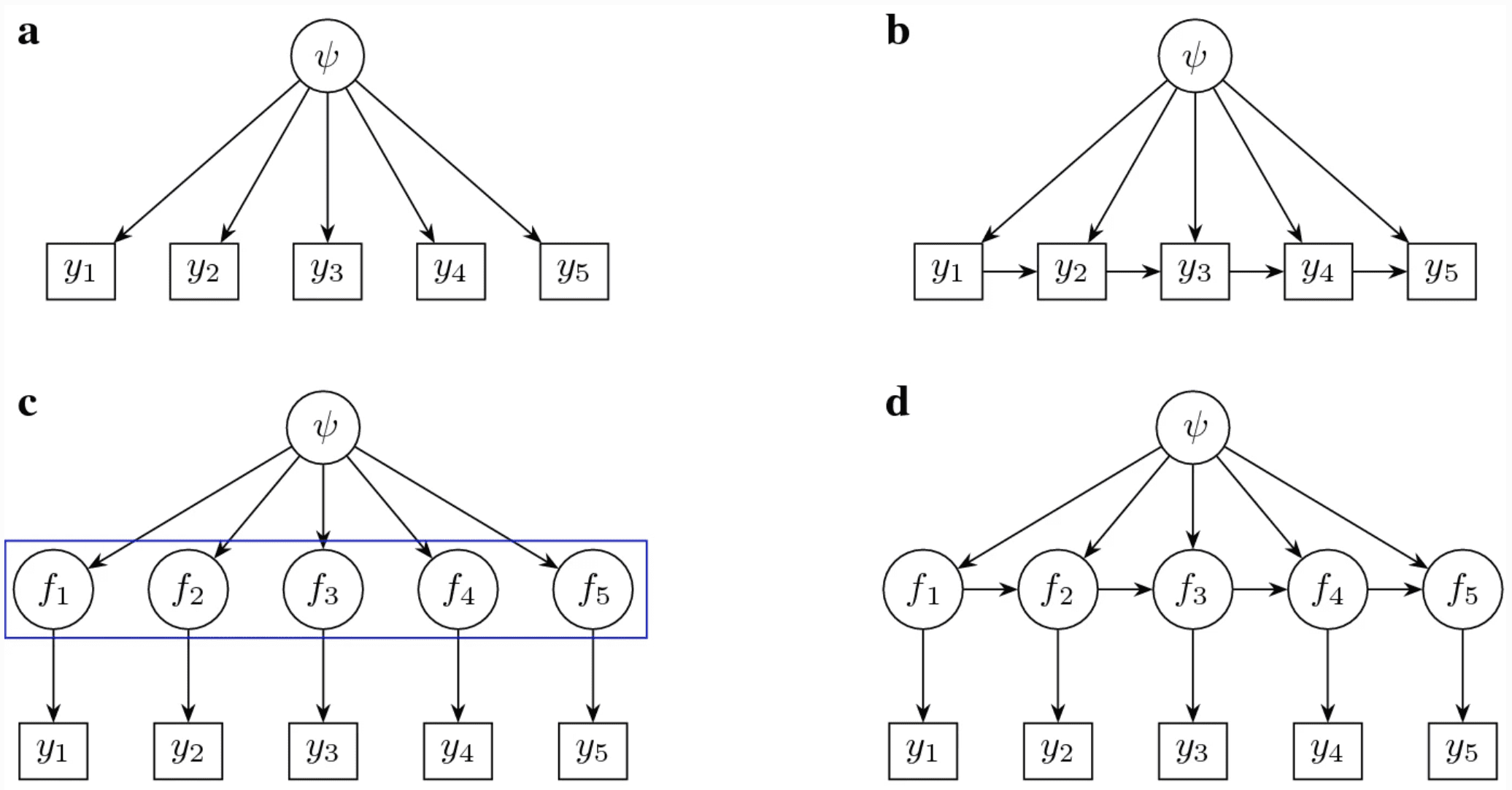

Cross-validation can be used to measure a model’s predictive accuracy for the purpose of model comparison, averaging, or selection. Standard leave-one-out cross-validation (LOO-CV) requires that the observation model can be factorized into simple terms, but a lot of important models in temporal and spatial statistics do not have this property or are inefficient or unstable when forced into a factorized form. We derive how to efficiently compute and validate both exact and approximate LOO-CV for any Bayesian non-factorized model with a multivariate normal or Student-t distribution on the outcome values. We demonstrate the method using lagged simultaneously autoregressive (SAR) models as a case study.

Aki’s post from last year, “Moving cross-validation from a research idea to a routine step in Bayesian data analysis,” connects this to the bigger picture.

Tangential to the post: I never understood the benefit to LOOCV compared to 10-fold or bootstrap validation. Training on N-1 datapoints and predicting for hold-out 1 datapoint and repeating across all datapoints seems like a way for models to be overtrained: the original (full) dataset barely changed at all!!! Maybe LOOCV is more useful for small datasets?

LOO is a way to estimate the expected loss for a single new data point, having trained the model on all of the other available data points. I’m not sure what overtrained means, if you have an overfit model that’ll show up in this score like any other, and generally when I have deployed models I don’t want to leave any training data on the table, why would I?

You don’t leave training data on the table by fitting your selected model to your full dataset. This is different than selecting a model that generalizes best, based on cross-validation results.

LOO typically has a lower variance. For estimating OOS performance, it can also lower the bias of having a smaller training set than you will actually use.

There aren’t a whole lot of general results for the behavior of CV estimators.

Somebody–from my understanding, that’s not quite correct. LOOCV has lower bias and greater variance. You can refer to Elements of Statistical Learning for trade-offs associated with LOOCV and K-Fold.

Elements is flatly wrong in that chapter. I’ve also never understood their argument about a correlated training set.

Here’s a good summary

https://stats.stackexchange.com/questions/61783/bias-and-variance-in-leave-one-out-vs-k-fold-cross-validation

Simulations usually show that variance decreases with K, but there are no general properties with cross validation.

Somebody–thank you for your comment. The bias-variance tradeoff as described in Elements is just a little confusing to me, and I just appreciate the dialogue.

There are computational trick for computing exact LOO-CV without having to refit the model (the classic version uses the hat matrix I think, can’t remember the Bayesian). So it’s a lot more efficient.