Mike Zyphur sent along this paper by Corinna Kruse:

This article draws attention to communication across professions as an important aspect of forensic evidence. Based on ethnographic fieldwork in the Swedish legal system, it shows how forensic scientists use a particular quantitative approach to evaluating forensic laboratory results, the Bayesian approach, as a means of quantifying uncertainty and communicating it accurately to judges, prosecutors, and defense lawyers, as well as a means of distributing responsibility between the laboratory and the court. This article argues that using the Bayesian approach also brings about a particular type of intersubjectivity; in order to make different types of forensic evidence commensurable and combinable, quantifications must be consistent across forensic specializations, which brings about a transparency based on shared understandings and practices. Forensic scientists strive to keep the black box of forensic evidence – at least partly – open in order to achieve this transparency.

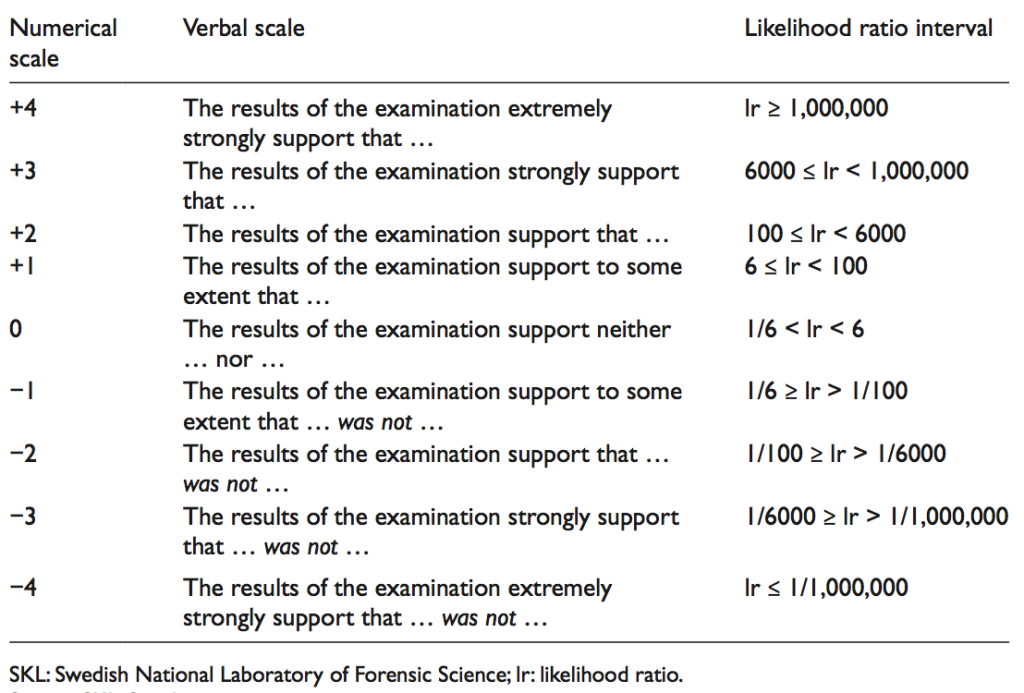

I like the linking of Bayes with transparency; this is related to my shtick about “institutional decision analysis” (this also appears in the decision analysis chapter of BDA). I don’t like all that likelihood-ratio stuff, though. In particular, I hate hate hate things like this:

To me, the whole point of probabilities is that they can be treated directly as numbers. If 5:1 odds doesn’t count as support for a hypothesis, this suggests to me that the odds are not really being taken at face value.

I agree that the “Numerical Scale” is disagreeable.

If, for some reason, one does not want to use a 1:50 or a 50:1 odds number, I would suggest using a straight log, Exponent, Richter-ish scale, e.g. 0 = 9:1 … 1:9, i.e. ~10**0, +-1 = 99:1… 10:1, 1:10… 1:99, i.e. ~ 10**1, etc. That way the Log-Numeric scale would still compress the likely hoods while being mathematically derivable rather than ad hock.

Is your objection to the very idea of a plain language summary, or are you more concerned that this particular

table doesn’t honor the LR semantics well? (One could be happy with the concept in principle, while pointing out as you do that 5:1 odds shouldn’t fall into the no-support category unless you really don’t believe in LR’s in the first place)

Bxg:

Indeed, the problem seems to me that, if you really believed these numbers as numbers, you wouldn’t think it would take 6000-1 odds to get to “strongly support.”

Remember this is in the context of legal guilt. Presumably the enormous conservativeness is due to the high aversion to falsely imprisoning someone. Certainly you wouldn’t want to send a non-murderer to life in prison based on 5:1 evidence. Even though 5:1 evidence should probably be considered quite strong for a landlord-renter dispute… So really, they’ve combined the cost function and the evidence analysis into a single thing. I agree it’s wrong, but not totally unmotivated.

Indeed, and as your examples show, the consequences of the decision are as important as the probabilities that are computed. In particular, a jury decision or a judicial decision is just as much a decision as any, and as such ought to be looked at from the point of view of decision theory.

+1

Thanks, I am in the midst of a institutional decision analysis. Surely, references will be helpful.