Michael Thaddeus writes:

Nearly forty years after their inception, the U.S. News rankings of colleges and universities continue to fascinate students, parents, and alumni. . . . A selling point of the U.S. News rankings is that they claim to be based largely on uniform, objective figures like graduation rates and test scores. Twenty percent of an institution’s ranking is based on a “peer assessment survey” in which college presidents, provosts, and admissions deans are asked to rate other institutions, but the remaining 80% is based entirely on numerical data collected by the institution itself. . . .

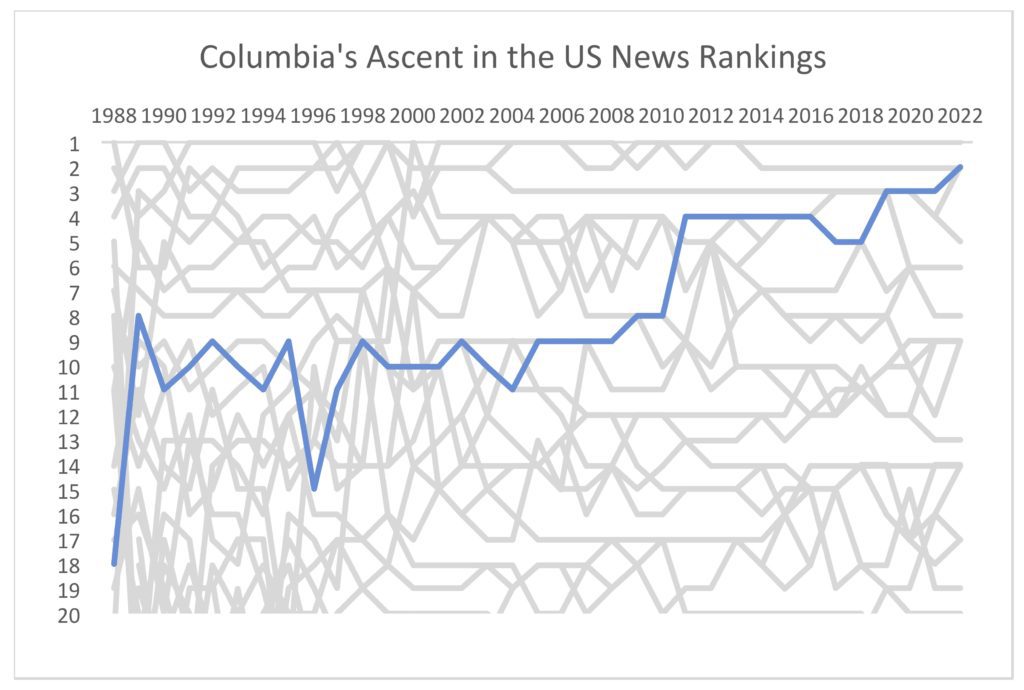

Like other faculty members at Columbia University, I have followed Columbia’s position in the U.S. News ranking of National Universities with considerable interest. It has been gratifying to witness Columbia’s steady rise from 18th place, on its debut in 1988, to the lofty position of 2nd place which it attained this year . . .

A few other top-tier universities have also improved their standings, but none has matched Columbia’s extraordinary rise. It is natural to wonder what the reason might be. Why have Columbia’s fortunes improved so dramatically? One possibility that springs to mind is the general improvement in the quality of life in New York City, and specifically the decline in crime; but this can have at best an indirect effect, since the U.S. News formula uses only figures directly related to academic merit, not quality-of-life indicators or crime rates. To see what is really happening, we need to delve into these figures in more detail.

Thaddeus continues:

Can we be sure that the data accurately reflect the reality of life within the university? Regrettably, the answer is no. As we will see, several of the key figures supporting Columbia’s high ranking are inaccurate, dubious, or highly misleading.

Dayum.

And some details:

According to the 2022 U.S. News ranking pages, Columbia reports (a) that 82.5% of its undergraduate classes have under 20 students, whereas (e) only 8.9% have 50 students or more.

These figures are remarkably strong, especially for an institution as big as Columbia. The 82.5% figure for classes in range (a) is particularly extraordinary. By this measure, Columbia far surpasses all of its competitors in the top 100 universities; the nearest runners-up are Chicago and Rochester, which claim 78.9% and 78.5%, respectively.

Although there is no compulsory reporting of information on class sizes to the government, the vast majority of leading universities voluntarily disclose their Fall class size figures as part of the Common Data Set initiative. . . .

Columbia, however, does not issue a Common Data Set. This is highly unusual for a university of its stature. Every other Ivy League school posts a Common Data Set on its website, as do all but eight of the universities among the top 100 in the U.S. News ranking. (It is perhaps noteworthy that the runners-up mentioned above, Chicago and Rochester, are also among the eight that do not issue a Common Data Set.)

According to Lucy Drotning, Associate Provost in the Office of Planning and Institutional Research, Columbia prepares two Common Data Sets for internal use . . .

She added, however, that “The University does not share these.” Consequently, we know no details regarding how Columbia’s 82.5% figure was obtained.

On the other hand, there is a source, open to the public, containing extensive information about Columbia’s class sizes. Columbia makes a great deal of raw course information available online through its Directory of Classes . . . Course listings are taken down at the end of each semester but remain available from the Internet Archive.

Using these data, the author was able to compile a spreadsheet listing Columbia course numbers and enrollments during the semesters used in the 2022 U.S. News ranking (Fall 2019 and Fall 2020), and also during the recently concluded semester, Fall 2021. The entries in this spreadsheet are not merely a sampling of courses; they are meant to be a complete census of all courses offered during those semesters in subjects covered by Arts & Sciences and Engineering (as well as certain other courses aimed at undergraduates). . . .

[lots of details]

Two extreme cases can be imagined: that undergraduates took no 5000-, 6000-, and 8000-level courses, or that they took all such courses (except those already excluded from consideration). . . . Since the reality lies somewhere between these unrealistic extremes, it is reasonable to conclude that the true . . . percentage, among Columbia courses enrolling undergraduates, of those with under 20 students — probably lies somewhere between 62.7% and 66.9%. We can be quite confident that it is nowhere near the figure of 82.5% claimed by Columbia.

Reasoning similarly, we find that the true . . . percentage, among Columbia courses enrolling undergraduates, of those with 50 students or more — probably lies somewhere between 10.6% and 12.4%. Again, this is significantly worse than the figure of 8.9% claimed by Columbia . . .

These estimated figures indicate that Columbia’s class sizes are not particularly small compared to those of its peer institutions. Furthermore, the year-over-year data from 2019–2021 indicate that class sizes at Columbia are steadily growing.

Ouch.

Thaddeus continues by shredding the administration’s figures on “percentage of faculty who are full time” (no, it seems that it’s not really “96.5%”), the “student-faculty ratio” (no, it seems that it’s not really “6 to 1”), “spending on instruction” (no, it seems that it’s not really higher than the corresponding figures for Harvard, Yale, and Princeton combined), “graduation and retention rates” (the reported numbers appear not to include transfer students).

Regarding that last part, Thaddeus writes:

The picture coming into focus is that of a two-tier university, which educates, side by side in the same classrooms, two large and quite distinct groups of undergraduates: non-transfer students and transfer students. The former students lead privileged lives: they are very selectively chosen, boast top-notch test scores, tend to hail from the wealthier ranks of society, receive ample financial aid, and turn out very successfully as measured by graduation rates. The latter students are significantly worse off: they are less selectively chosen, typically have lower test scores (one surmises, although acceptance rates and average test scores for the Combined Plan and General Studies are well-kept secrets), tend to come from less prosperous backgrounds (as their higher rate of Pell grants shows), receive much stingier financial aid, and have considerably more difficulty graduating.

No one would design a university this way, but it has been the status quo at Columbia for years. The situation is tolerated only because it is not widely understood.

Thaddeus summarizes:

No one should try to reform or rehabilitate the ranking. It is irredeemable. . . . Students are poorly served by rankings. To be sure, they need information when applying to colleges, but rankings provide the wrong information. . . .

College applicants are much better advised to rely on government websites like College Navigator and College Scorecard, which compare specific aspects of specific schools. A broad categorization of institutions, like the Carnegie Classification, may also be helpful — for it is perfectly true that some colleges are simply in a different league from others — but this is a far cry from a linear ranking. Still, it is hard to deny, and sometimes hard to resist, the visceral appeal of the ranking. Its allure is due partly to a semblance of authority, and partly to its spurious simplicity.

Perhaps even worse than the influence of the ranking on students is its influence on universities themselves. Almost any numerical standard, no matter how closely related to academic merit, becomes a malignant force as soon as universities know that it is the standard. A proxy for merit, rather than merit itself, becomes the goal. . . .

Even on its own terms, the ranking is a failure because the supposed facts on which it is based cannot be trusted. Eighty percent of the U.S. News ranking of a university is based on information reported by the university itself.

He concludes:

The role played by Columbia itself in this drama is troubling and strange. In some ways its conduct seems typical of an elite institution with a strong interest in crafting a positive image from the data that it collects. Its choice to count undergraduates only, contrary to the guidelines, when computing student-faculty ratios is an example of this. Many other institutions appear to do the same. Yet in other ways Columbia seems atypical, and indeed extreme, either in its actual features or in those that it dubiously claims. Examples of the former include its extremely high proportion of undergraduate transfer students, and its enormous number of graduate students overall; examples of the latter include its claim that 82.5% of undergraduate classes have under 20 students, and its claim that it spends more on instruction than Harvard, Yale, and Princeton put together. . . .

In 2003, when Columbia was ranked in 10th place by U.S. News, its president, Lee Bollinger, told the New York Times, “Rankings give a false sense of the world and an inauthentic view of what a college education really is.” These words ring true today. Even as Columbia has soared to 2nd place in the ranking, there is reason for concern that its ascendancy may largely be founded, not on an authentic presentation of the university’s strengths, but on a web of illusions.

It does not have to be this way. Columbia is a great university and, based on its legitimate merits, should attract students comparable to the best anywhere. By obsessively pursuing a ranking, however, it demeans itself. . . .

Michael Thaddeus is a professor of mathematics at Columbia University.

P.S. A news article appeared on this story. It features this response by a Columbia spokesman:

[The university stands] by the data we provided to U.S. News and World Report. . . . We take seriously our responsibility to accurately report information to federal and state entities, as well as to private rankings organizations. Our survey responses follow the different definitions and instructions of each specific survey.

Wow. That’s not a job I’d want to have, to be responding to news reporters who ask whether it’s true that your employer is giving out numbers that are “inaccurate, dubious, or highly misleading.” Michael Thaddeus and I are teachers at the university and we have academic freedom. If you’re a spokesman, though, you can’t just say what you think, right? That’s gotta be a really tough part of the job, to have to come up with responses in this sort of situation. I guess that’s wha they’re paying you for, but still, it’s gotta be pretty awkward.

I’ve always felt the ugrad US News rankings to be super susceptible to Goodhart’s law given how some of the criteria can be gamed, as mentioned. Though I think they’ve maybe changed things around a bit, e.g. acceptance rates are now weighted at 0? compared to how they were before, as e.g. highlighted in https://www.bostonmagazine.com/news/2014/08/26/how-northeastern-gamed-the-college-rankings/

Opening plot reminds me of a similar visualization I made a couple years back when trying to figure some of this out, if anyone’s interested: https://i.imgur.com/rXZOH8e.mp4

We’ve talked about dichotomania on here before but what about univariate-mania?

Fascinating! I hope Thaddeus’ analysis is widely circulated. I’m not sure what’s worse, the idiocy of the USNews rankings or the apparent sleaziness of Columbia, but the latter bothers me more, perhaps because higher education in general seems increasingly ethically challenged, or perhaps because I’m at a university and so in some sense complicit.

The Univ. of Oregon, by the way, has a muck-raking blog that is sometimes frustrating but overall useful for airing dirty laundry: https://uomatters.com/ . Every place should.

Are all rankings idiotic or specifically the US news rankings?

Rahul:

A ranking is three things: the numbers that go into the ranking, the ranking itself, and how it is received. I’d say it’s some sort of social problem that the U.S. News ranking is taken seriously and gets so much publicity. If it didn’t have the publicity, it would just be some crappy ranking and we could all just laugh at it.

In any case, I don’t think all rankings are problematic. If the ranking is transparent, not gamed, and just taken to be what it is and nothing more, then it can provide information. Even there, though, I’d prefer a numerical rating than a ranking. For example, if there’s a report giving 4-year or 6-year graduation rates among students at different colleges, then it can make sense to list them in order from highest to lowest, but in that case I’m more interested in a college’s graduation rate than its position on the list.

What kind of information do you think graduation rates provide? I think people want to use it to say something about the quality of the institution, but in practice it probably says much more about the academic preparation and the financial resources of the students who attend it.

Suppose I’m accepted at a university with a low graduation rate, and that I have no reason to believe I’m atypical of the other students. My estimate of my own graduation probability should be low. I’d probably rather go someplace I’m more likely to be able to graduate from.

@Phil,

Well, that might be the case, if the students who graduate from that other place are like you. If students from that other instituiton who are like you are amongst the percentage who don’t graduate, then you might not be better off, and you might be worse off (if those schools basically ignore, say, students like you).

I suspect that graduations rates are so highly correlated with how selective the school is, you wouldn’t get any additional information from it by itself (and wealth of the students is probably correlated with how selective the school is). If you could adjust for selection and wealth, then maybe graduate rates would be more informative, but are such adjustments made in rankings?

I guess my point is that a school could have a relatively low graduation rate but given the demographics of the students, it’s doing better job than one with a higher one with a different demographics.

Students don’t graduate for many reasons. A school can provide an environment that helps their students succeed. When I got my Ph.D., they asked a few of us how they could help the students. We told them. They didn’t do it.

@Joe:

Graduation rates are a function of *both* the students enrolling and the institution. Here (U. of Oregon), the 4-year graduation rate went from 50% to 61% from 2014-19. This is a very large change. Little changed in terms of students, but coherence of advising and other related things got better. [https://around.uoregon.edu/content/uos-four-and-six-year-graduation-rates-reach-new-high]

If you want to know the most important determinants of graduation rates it is tuition. Public universities have considerably lower 4 year graduation rates than private ones (with only a few exceptions). While there are some confounding variables (such as ACT scores), you will consistently find that tuition accounts for most of the variation. Unfortunately, this does not say very good things about higher education.

A very recent case involved Temple University:

https://abcnews.go.com/US/wireStory/temple-business-dean-convicted-rankings-scandal-81471751

“PHILADELPHIA — A jury convicted the former dean of Temple University’s business school on charges related to a scheme to falsely boost the school’s rankings.

The jury deliberated for less than an hour Monday before deciding Moshe Porat, 74, was guilty of federal conspiracy and wire fraud charges, the Philadelphia Inquirer reported.”

In order to put all the cheating in context, consider this famous quip:

Exasperated first person: “Does everybody cheat?”

Second person: “Don’t look at me, I don’t know everybody.”

Porat boosted the university’s online MBA program to the top spot on the U.S. News & World Report rankings for four years in a row. With help from with two subordinates, who are also charged, Porat submitted false information about student test scores, work experience and other data.”

Excellent. You have pretty smart people at the top to cheat the best, so in the end the rankings still work.

Anon:

Yeah, that’s like the story of the CS exam where the only way to pass the class is to figure out how to hack the computer and copy the answers. I guess that works well for the b-school too: the only way to win is to cheat. The journalism school could have a variant where the only way to pass is to dig up enough dirt on the instructor that he or she has no choice but to give you a good grade.

https://en.wikipedia.org/wiki/Kobayashi_Maru

You gotta know how to take the test, right? The rules and the instructions aren’t necessarily on the same page. Ahem.

According to https://en.wikipedia.org/wiki/Bill_Swiacki, back in the 1940s, Columbia

“gained national fame when his [Bill Swiacki’s] nine pass receptions led Columbia to a 21-20 victory over Army, breaking the Cadets’ 32-game winning streak.”

At least in football, it has been downhill since then.

I’ll say. I was at Macalester in the late 1980s when Columbia football failed to snatch our record away.

I have a friend who transferred in to Columbia and is now a male model, so the skepticism about transfer student ability/training checks out. (jk he is a pretty smart guy)

Why not just buy a controlling interest in US News?

On a related note, what’s the going donation size to get a kid on the, I don’t know, spelling bee team?

I am surprised that no one has commented on the well-known unreliability of

1. self reporting

and

2. the third decimal place as a deciding factor in ranking:

“The 82.5% figure for classes in range (a) is particularly extraordinary. By this measure, Columbia far surpasses all of its competitors in the top 100 universities; the nearest runners-up are Chicago and Rochester, which claim 78.9% and 78.5%, respectively.”

I worked at UPenn a while back, and there was a rankings-related incident that struck me as problematic, and has become kind of iconic for me.

The graduate education school wanted to increase its ranking. So what did it to? It raised the minimum score for incoming students.

They did nothing in terms of educational reform, to improve the ranking. Nothing to actually improve the quality of education.*

At an education school. Aaaarrrggghh.

* (at least IMO, although I suppose some people think that raising the minimum score for incoming students does improve students’ educational experience).

I wrote a book chapter about gaming US News rankings so I’m not in the least bit surprised. What a devastating report! I’m struggling to name the culprit… who’s more responsible? the parents and kids who pick school A over B because A is one rank higher on US News? the administrators who one-up each other to better their school’s image? US News staff who are providing a service that is in apparently high demand? Various organizations that do not fact-check submitted data?

No moral comparison here – the cheater and liar is the primary culprit (though there’s room for other who contribute to corruption). In an honorable world we could trust each other. Columbia’s administrators dishonor themselves and their school by their lies and then by doubling down on those lies. It’s not a victimless beauty contest, either, as the rankings may influence some students. Columbia also harms all of us, by validating the sense of corruption in the country. Columbia also harms itself, as it offsets the good it does do by inviting doubt across the entire university, and by not maintaining real metrics on performance they harm their own ability to recognize their own performance so they can best act to improve it. US News has created a data product that people like, and even if imperfect that helps students sift through their (too-many) alternatives. They describe their methods and provide supporting data. (Unlike Columbia.) It’s natural for students and parents to want comparative data to explore which school might be best for them, and US News provides comparative data, both at the sensational if least accurate summary level and (more usefully) at the detail level. This said, U.S. News does have some contribution to the lie, though, by not setting up a reasonable audit system. And we as a society contribute by being so data-averse and gullible as to not demand better data.

“By obsessively pursuing a ranking, however, it demeans itself. . . .” and I thought that you could only do that by regression!

Shameless self-promotion: For an (empirical) Bayesian take on ranking see: Invidious Comparisons: Ranking and Selection as Compound Decisions, https://arxiv.org/abs/2012.12550 forthcoming in Econometrica.

Today in the New York Times https://www.nytimes.com/2022/03/17/us/columbia-university-rank.html

“Columbia claimed that 100 percent of its faculty had “terminal degrees,” the highest in their field; Harvard, for instance, claimed 91 percent, [Dr. Thaddeus] said.”

“Columbia officials said that Dr. Thaddeus was fixated on the Ph.D., but that in many fields — like writing — that might not be the relevant degree. The 100 percent figure was rounded up, officials said…”

“The 100 percent figure was rounded up” . . . that’s hilarious!

Columbia has another relatively controversial way of admitting students with significantly lower academic scores into their undergraduate program. Admission is rolling and students can apply at anytime and they are informed of their acceptance within a few weeks. It’s called the School of General Studies. Students admitted to GS are part of the Undergraduate program and attend the same classes as the Students admitted to Columbia College. The GS is roughly 2600 undergraduates so the class size may even be more overstated than Professor Thaddeus has stated. Unfortunately for the purpose of US News reporting these undergraduates are excluded from the numbers provided to US News or Forbes etc. The average HS GPA 3.7, SAT 1260 – 1450, ACT 29-32. Average acceptance rate is 33%. If rankings are to be calculated then please do it accurately to include all undergraduate schools. No other reputable university separates the Stats for students admitted to the school of Arts and Science and then ignores the School of Engineering. This just straight up dishonest of Columbia University.

In comparing my own experience at Columbia, I find that class sizes were average of 12 students. My instructors were across the board excellent, comparing to a previous top 50 school I attended as an undergrad. Finally, I think Obama was a transfer to Columbia, so maybe not all transfers are so bad. This article by Columbia math prof. manipulates and singles out its own criteria and is highly speculative in areas as well. Always question your source!

Nicholas:

I think Columbia is a wonderful university and I don’t think Michael Thaddeus would disagree. He never claimed otherwise. Nor did he ever say that “all transfers are so bad” or even that any transfers are bad. What he demonstrated is that some of the numbers used by Columbia in their U.S. News ranking are incorrect.

I attended Columbia GS and confirm that a two-tier system does exist. We received far fewer funding resources and are kind of looked down upon (e.g., “GS is the backdoor of Columbia). It really makes little sense to segregate the undergraduate schools, other than to game the rankings as well as profiteering (a trait of professional master’s programs, I might add).

Also, let’s not forget that most classes contain significant numbers of Barnard students, yet they are conspicuously absent from these statistics/analyses.