Adam Connor-Sax sent this question to Philip Greengard:

Do you or anyone you know work with or know about (human) population forecasting in small geographies (election districts) and short timescales (2-20 years)? I can imagine just looking at the past couple years and trying to extrapolate but presumably there are models of population dynamics which one could fit and that would work better.

The thing that comes up in the literature—cohort component models—seem more geared to larger populations and larger timescales, countries over decades, say. But maybe I’m just finding the wrong sources so far.

Philip forwarded this to me, and I forwarded it to Leontine Alkema, a former Columbia colleague now at the University of Massachusetts who’s an expert in Bayesian demographic forecasting, and Leontine in turn pointed us to Monica Alexander at the University of Toronto and Emily Peterson at Emory University.

Monica and Emily had some answers!

Here’s Emily:

I can just speak to what we work on at Emory biostatistics. Lance Waller and myself have worked together with other collaborators in population estimation and forecasting for small areas (e.g., counties and census tracts) accounting for population uncertainty, mainly for US small areas but have also done some work in the UK. We have looked at the use of multiple data sources including official statistics and WorldPop across small administrative units.This works commonly combines small area estimation and measurement error models.

And Monica:

Here’s a few different approaches I’m aware of:

– A common approach is the Hamilton-Perry Method, which is sort of a cut down version of the cohort component projection method. David Swanson and others have published a lot about this, see eg https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2822904/ This is mostly deterministic projection; I’ve started some work with a student incorporating this approach into Bayesian models.

– Emily and others’ work, as she mentioned; my understanding of this is that they incorporate various data/estimates on population counts, and project those, accounting for different error sources (see eg here: https://arxiv.org/abs/2112.09813)

– A recent paper by Crystal Yu (Adrian Raftery’s student) describes a model that builds off the UN population projections approach: https://read.dukeupress.edu/demography/article/60/3/915/364580/Probabilistic-County-Level-Population-Projections

– Classical cohort component models at small geographic areas are tricky, because there’s so many parameters to estimate and the data often aren’t very good (even in the US, particularly for migration), which is why @Adam you aren’t seeing much for small areas — migration makes things hard. Leontine and I did a subnational cohort component model (see paper here: https://read.dukeupress.edu/demography/article/59/5/1713/318087/A-Bayesian-Cohort-Component-Projection-Model-to) but the focus was low- and middle-income countries, and women of reproductive age.

Adam followed up:

I’m working with an organization that directs political donations to Democratic candidates for US state-legislature. They are interested in improving their longer-term strategic thinking about which state-legislative districts (typically 40,000 – 120,000 people) may become competitive (or cease being competitive) over time and also, perhaps, in the ways that changing demographics might lead to changing campaign strategies.

From the small bit of literature search that I’ve done—which included most helpfully “Methods For Small Area Population Forecasts: State-of-the-Art and Research Needs” on which Dr. Alexander is a co-author!—I see various things I might try, likely starting with a simplified cohort-component model of some sort. I will look at all the references below.

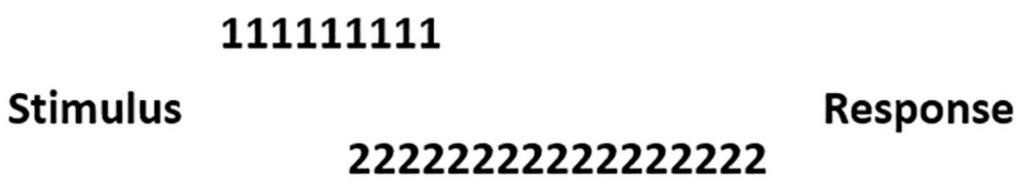

One wrinkle I am struggling with is that for political modeling (in my case, voter turnout and party preference), there are mutable characteristics, e.g., educational-attainment, which are important. But in my brief look so far, none of these models are trying to project mutable characteristics. Which makes sense, since they are not simply a product of births, deaths and migration. So, because this is all new to me, I’m not quite sure if I should be trying to find a model which is amenable to the addition of education in some way, or choose an existing model for the population growth and then somehow try to model educational-attainment shifts separately and then combine them.

BTW, Dr. Peterson, I just looked a bit at your paper “A Bayesian hierarchical small-area population model accounting for data source specific methodologies from American Community Survey, Population Estimates Program, and Decennial Census data” and that is helpful/interesting in a different way! The work Philip and I do together is about producing small area estimates of population by combining various marginals at the scale of the small area (state-legislative district) and then modeling the correlation-structure using dataat larger geographic scales (state & national). This is driven by the limits of what the ACS provides at scales below Public Use Microdata Areas. I’ve also thought some about trying to incorporate the more accurate decennial census but hadn’t gotten very far. Your paper will be a good motivation to return to that part of the puzzle!

I’m sharing this conversation with all of you for three reasons:

1. Demographic forecasting is important in social science.

2. The problem is difficult and relates to other statistical challenges.

3. It’s hard to find things in the literature, and personal connections can help a lot.

I guess that the vast majority of you don’t have the personal connections that would allow you to easily find the above references. (You have your own social networks, just not so focused on quantitative social science.) The above post is a service to all of you, also a demonstration of how these connections can work.