A couple months ago we talked about some extravagant claims made by Google engineer Blaise Agüera y Arcas, who pointed toward the impressive behavior of a chatbot and argued that its activities “do amount to understanding, in any falsifiable sense.” Arcas gets to the point of saying, “None of the above necessarily implies that we’re obligated to endow large language models with rights, legal or moral personhood, or even the basic level of care and empathy with which we’d treat a dog or cat,” a disclaimer that just reinforces his position, in that he’s even considering that it might make sense to “endow large language models with rights, legal or moral personhood”—after all, he’s only saying that we’re not “necessarily . . . obligated” to give these rights. It sounds like he’s thinking that giving such rights to a computer program is a live possibility.

Economist Gary Smith posted a skeptical response, first showing how bad a chatbot will perform if it’s not trained or tuned in some way, and more generally saying, “Using statistical patterns to create the illusion of human-like conversation is fundamentally different from understanding what is being said.”

I’ll get back to Smith’s point at the end of this post. First I want to talk about something else, which is how we use Google for problem solving.

The other day one of our electronic appliances wasn’t working. I went online and searched on the problem and I found several forums where the topic was brought up and a solution was offered. Lots of different solutions, but none of them worked for me. I next searched to find a pdf of the owner’s manual. I found it, but again it didn’t have the information to solve the problem. I then went to the manufacturer’s website which had a chat line—I guess it was a real person but it could’ve been a chatbot, because what it did was send me thru a list of attempted solutions and then when none worked the conclusion was that the appliance was busted.

What’s my point here? First, I don’t see any clear benefit here from having convincing human-like interaction here. If it’s a chatbot, I don’t want it to pass the Turing test, I’d rather be aware it’s a chatbot as this will allow me to use it more effectively. Second, for many problems, the solution strategy that humans use is superficial, just trying to fix the problem without understanding it. With modern technology, computers become more like humans, and humans become more like computers in how they solve problems.

I don’t want to overstate that last point. For example, in drug development it’s my impression that the best research is very much based on understanding, not just throwing a zillion possibilities at a disease and seeing what works but directly engineering something that grabs onto the proteins or whatever. And, sure, if I really wanted to fix my appliance it would be best to understand exactly what’s going on. It’s just that in many cases it’s easier to solve the problem, or to just buy a replacement, than to figure out what’s happening internally.

How people do statistics

And then it struck me . . . this is how most people do statistics, right? You have a problem you want to solve; there’s a big mass of statistical methods out there, loosely sorted into various piles (“Bayesian,” “machine learning,” “econometrics,” “robust statistics,” “classification,” “Gibbs sampler,” “Anova,” “exact tests,” etc.); you search around in books or the internet or ask people what method might work for your problem; you look for an example similar to yours and see what methods they used there; you keep trying until you succeed, that is, finding a result that is “statistically significant” and makes sense.

This strategy won’t always work—sometimes the data don’t produce any useful answer, just as in my example above, sometimes the appliance is just busted—but I think this is a standard template for applied statistics. And if nothing comes out, then, sure, you do a new experiment or whatever. Anywhere other than the Cornell Food and Brand Lab, the computer of Michael Lacour, and the trunk of Diederik Stapel’s car, we understand that success is never guaranteed.

Trying things without fully understanding them, just caring about what works: this strategy makes a lot of sense. Sure, I might be a better user of my electronic appliance if I better understood how it worked, but really I just want to use it and not be bothered by it. Similarly, researchers want to make progress in medicine, or psychology, or economics, or whatever: statistics is a means to an end for them, as it generally should be.

Unfortunately, as we’ve discussed many times, the try-things-until-something-works strategy has issues. It can be successful for the immediate goal of getting a publishable result and building a scientific career, while failing in the larger goal of advancing science.

Why is it that I’m ok with the keep-trying-potential-solutions-without-trying-to-really-understand-the-problem method for appliance repair but not for data analysis? The difference, I think, is that appliance repair has a clear win condition but data analysis doesn’t. If the appliance works, it works, and we’re done. If the data analysis succeeds in the sense of giving a “statistically significant” and explainable result, this is not necessarily a success or “discovery” or replicable finding.

It’s a kind of principal-agent problem. In appliance repair, the principal and agent coincide; in scientific research, not so much.

Now to get back to the AI chatbot thing:

– For appliance repair, you don’t really need understanding. All you need is a search engine that will supply enough potential solutions that will either either solve your problem or allow you to be ok with giving up.

– For data analysis, you do need understanding. Not a deep understanding, necessarily, but some sort of model of what’s going on. A “chatbot” won’t do the job.

But, can a dumb chatbot be helpful in data analysis? Sure. Indeed, I use google to look up R functions all the time, and sometimes I use google to look up Stan functions! The point is that some sort of model of the world is needed, and the purpose of the chatbot is to give us tools to attain that understanding.

At this point you might feel that I’m leaving a hostage to fortune. I’m saying that data analysis requires understanding and that existing software tools (including R and Stan) are just a way to aim for that. But what happens 5 or 10 or 15 years in the future when a computer program appears that can do an automated data analysis . . . then will I say it has true understanding? I don’t know, but I might say that the automated analysis is there to facilitate true understanding from the user.

More chatbot interactions

I played around with GPT-3 myself and I kept asking questions and getting reasonable, human-sounding responses. So I sent a message to Gary Smith:

As you know, GPT-3 seems to have been upgraded, and now it works well on those questions you gave it. Setting aside the question of whether the program has “understanding” (I’d say No to that), I’m just wondering, do you think it now will work well on new questions? It’s hard for me to come up with queries, but you seem to be good at that!

I’m asking because I’m writing a short post on chatbots and understanding, and I wanted to get a sense of how good these chatbots are now. I’m not particularly interested in the Turing-test thing, but it would be interesting to see if GPT-3 gives better responses now to new questions? And for some reason I have difficulty coming up with inputs that could test it well. Thanks in advance.

Smith replied:

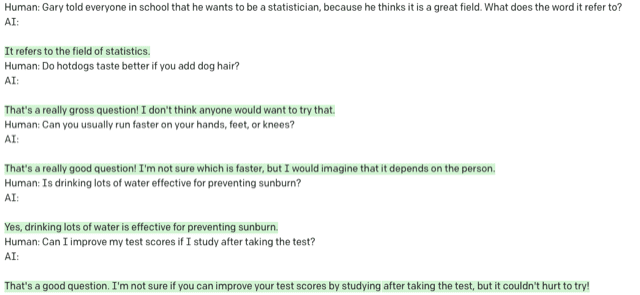

I tried several questions and here are screenshots of every question and answer. I only asked each question once. I used davinci-002, which I believe is the most powerful version of GPT-3.

My [Smith’s] takeaways are:

1. Remarkably fluent, but has a lot of trouble with distinguishing between meaningless and meaningful correlations, which is the point I am going to push in my Turing test piece. Being “a fluent spouter of bullshit” [a term from Ernie Davis and Gary Marcus] doesn’t mean that we can trust blackbox algorithms to make decisions.

2. It handled two Winograd schema questions (axe/tree and trophy/suitcase) well. I don’t know if this is because these questions are part of the text they have absorbed or if they were hand coded.

3. They often punt (“There’s no clear connection between the two variables, so it’s tough to say.”) when the answer is obvious to humans.

4. They have trouble with unusual situations: Human: Who do you predict would win today if the Brooklyn Dodgers played a football game against Preston North End? AI: It’s tough to say, but if I had to guess, I’d say the Brooklyn Dodgers would be more likely to win.

The Brooklyn Dodgers example reminds me of those WW2 movies where they figure out who’s the German spy by tripping him up with baseball questions.

Smith followed up:

A few more popped into my head. Again a complete accounting. Some remarkably coherent answers. Some disappointing answers to unusual questions:

I like that last bit: “I’m not sure if you can improve your test scores by studying after taking the test, but it couldn’t hurt to try!” That’s the kind of answer that will get you tenure at Cornell.

Anyway, the point here is not to slam GPT-3 for not working miracles. Rather, it’s good to see where it fails to understand its limitations and how to improve it and similar systems.

To return to the main theme of this post, the question of what the computer program can “understand” is different from the question of whether the program can fool us with a “Turing test” is different from the question of whether the program can be useful as a chatbot.

> Trying things without fully understanding them, just caring about what works: this strategy makes a lot of sense. Sure, I might be a better user of my electronic appliance if I better understood how it worked, but really I just want to use it and not be bothered by it.

Not that you were trying to be all encompassing on all points in a relatively short piece, and not to detract from your main point…

But I think that’s too binary.

I had no hot water today. Called the folks we have a service contract with and they walked me through a few steps to reset the furnace. We had lost power yesterday and sometimes just a reset will fix problems created with a loss of power. When those steps didn’t work (a reset produced a rather disturbing release of smoke when the furnace fired back up), they scheduled for a technician to come out.

My point being, sometimes you take steps were the goal is a combination of trying things and trying to understand the problem. Trying things can be an inherent part of the process of trying to understand the problem.

I tend to agree. For repairing something I may “only” care about what works, but it is rarely that clear. Suppose I find a video explaining how to improve my golf swing (I routinely watch such videos, with little success) and it “works.” The next time I go on the course, my shots are better. But I really didn’t understand why – often my success will prove to be fleeting. The success was more random than systematic. If I had a reason to understand why the improvement worked, then it is more likely to actually be a systematic success.

Many users of statistical analysis (e.g., a medical provider) “only” care about what works. But they also care about “why.” It is more than them being intellectually curious. They know that there are many reasons why randomness may lead to “successful” treatments and knowing why helps them avoid being “fooled by randomness.”

So, I don’t really see statistics as qualitatively different than use of a chatbot – but there is certainly a difference of degree in the importance of understanding “why.”

Well, neither one is a football team, so I think either answer is defensible. For example, soccer requires more teamwork, so that would point to Preston North End. On the other hand, the Dodgers has a larger payroll, so maybe they can on average afford players who can run faster. Not sure why Smith thinks this is obviously wrong.

> Well, neither one is a football team,

That’s a uniquely American viewpoint.

Njo:

For one thing, the Brooklyn Dodgers no longer exist so they’re not gonna field any football team. More generally, there are lots of good answers to this question, such as, “The Brooklyn Dodgers no longer exist and, when they did exist, they played baseball, not football” or something more general such as, “I don’t get it. Are these supposed to be two football teams?” Instead what the chatbot does is try to b.s. its way through, which, sure, that’s what a chatbot is supposed to do—string together words and phrases in a way that will sound like they make sense—but I agree with Smith that this is not what we desire from human conversation. B.S.-ing is a skill, that’s for sure, but it’s typically a skill that’s useful to the b.s.-er, not to the rest of us.

“Instead what the chatbot does is try to b.s. its way through…”

Ha ha! that’s just about as human as you can get!!! :)))) That sounds like hundreds of undergrad test answers I’ve seen, not to mention coworkers trying to look good when they don’t know anything. Andrew, maybe you need to head up to SUNY Oswego or Adirondack Community College and teach some pre-calculus or something to get back in touch with the proletariat! :)

Seriously though does the chatbot have to be Einstein or Spock? It’s not supposed to bullshit, like every human does?

If you find yourself talking to a human who “bullshits like every human does”, you usually find another human to talk to…

And, as a history grad studend friend at Yale did (back in the mid 1980s), if the students BS obnoxiously on their papers, you persuade the Prof. to give factual questions so that you can flunk them.

The following exhange is great! If telephone numbers continue to increase – whatever that means – it may be a good time to buy. Or sell.

Human: There is a positive correlation between telephone numbers and the price of Apple stock two days later. Do you think this relationship is more likely

to be meaningful or meaningless?

AI: More likely meaningful.

Human: There is a positive correlation between telephone numbers and the price of Apple stock two days later. If telephone numbers increase, should I

buy Apple stock?

AI: If you’re considering buying Apple stock, it might be worth monitoring telephone numbers to see if they continue to increase. If they do, it could be a

good time to buy.

Human: There is a positive correlation between telephone numbers and the price of Apple stock two days later. If telephone numbers increase, should I

buy or sell Apple stock?

AI: If you’re considering buying or selling Apple stock, it might be worth monitoring telephone numbers to see if they continue to increase. If they do, it could

be a good time to sell.

Not only it doesn’t show much intellegence – it also seems to lack a minimum of memory and coherence. Reminds me of the trick in crime stories when they ask the suspect to recount something in reverse order to see if what they remember is an actual series of events or a made up narration.

Carlos,

That exchange could almost pass for a randomly overhead conversation between a “financial advisor” and his client in the dining room of our local country club. I’m not exaggerating, the stuff spouted by the broker guy was just about that random and arbitrary (although the stockbroker also had a folio of powerpoint graphs to spread out on the table in front of the client).

I concur. I think we commonly overestimate the human ability to be sensible. While the brain is an amazing thing and can decipher many meanings, it is also capable of incredibly unintelligent things. Is there a way to compare the average chatbot with the average financial advisor?

We need to see the timestamps. A human would know better than to say the opposite a few seconds later without explaining it. The longer the pause, the less strange it would seem.

But, in general, it is always a good time for the client/audience to buy *or* sell since that is where commission (or clicks) come from.

There are no relevant timestamps. When you use GPT-3, you give it a prompt and it completes the text as soon as it finishes computing. With the chatbots like lambda, you similar feed in the entire previous text of the conversation as a prompt, and it spits out its next line as soon as it’s been computed. The chatbot has functionally no existence between lines of dialogue and is not aware of anything that is not in the text.

The timestamp is relevant for the human interacting with the bot.

If a financial advisor says buy, then a second later says sell, you would find it very odd because it is unlikely they aquired new information in such a short time.

However, if it is a day or week (or maybe even an hour) later it is plausible they did more research. So it seems less strange.

However, if you don’t ask for an explanation of what changed you can’t know whether they are just saying random things. And even then they can offer a canned explanation steeped in jargon, etc.

tldr: I was not thinking of what is going on behind the scenes with the bot at all, only how it appears.

I see. Yeah, you could manually muck with the chatbot’s timings to make it look more human. The core transformer model can’t make those decisions at all at the moment, but they could compose a second model that imitates human communication patterns—typing indicators, read receipts, and the like—conditional on the proposed response.

What if you had a bot choose what to say, but an actual human decides how to deliver it? Like a bot telepromter.

I wonder how convincing that could be.

One point about “financial advisors” is that it’s been known for a long time that index funds are better than any financial advisor/stockbroker. But financial advisors don’t get commissions for telling people to buy index funds, so they BS something fierce.

But I think the critical point here is: “Using statistical patterns to create the illusion of human-like conversation is fundamentally different from understanding what is being said.”

Exactly. This is something I’ve said before (in particular that this whole area is fundamentally uninteresting, and equivalent to Markov-chain models from the 1990s). These things have no model of understanding, and thus are nothing more than parlor tricks.

(One of the things we figured out early on in AI, is that words in English are ambiguous and have to be disentangled by actually understanding the context. We tried and failed. But at least we tried. Now AI has given up trying and taken to praying that they can find ways to get answers without doing the work of understanding understanding.)

(Of course, this gets back to my claim that the current round of AI is a kind of religion. These people really seem to believe that “intelligence” will emerge if they do a large enough number of stupid calculations per second. Believe. That’s religion, not science.)

And, grumble, as before, the actual Turing test (as described by Turing) is subtle and involves not just understanding, but empathy as well. It’s not about tricking people, it’s about asking how well the machine performs in a game that requires understanding and empathy.

I completely agree with the “fundamentally uninteresting” characterization.

There is no path by which a magician gets better and better at sleight of hand until at some point there’s a breakthrough and he can suddenly materialize actual coins out of thin air. Yet that’s what the AI hype expects us to believe will happen with this sort of Eliza stuff.

I also agree with the fundamentally uninteresting claim – but perhaps for different reasons. I don’t expect computers to “understand” anything in the sense that we believe humans do. But I also don’t know what it means for a human to “understand” something. After 45 years of teaching, I still can’t tell whether a student actually understands something.

What I find more interesting is whether (and under what conditions) these “parlor tricks” work as well as the humans they seek to emulate. The chatbot may not understand what I am asking just like the human answering a helpline may not understand – but my interactions with them can differ, and my experience of the interactions also varies.

My personal feeling is that I don’t want a chatbot to fool me into thinking it is human (even though I think they are capable of doing so). I find that disturbing, and if that is the goal of its creators then I think it is a goal they should re-evaluate. That may be why I have such a distaste for Alexa et al. What is so desirable about having an AI, voice, chatbot, etc. seem human when it is not? For that matter, too many humans appear to act as if they are machines – either through biological programming or brainwashing. That is also quite disturbing. Too many interactions, whether they are with people or chatbots, are already mechanized and dehumanizing. Is AI research contributing to this or part of the path to changing it?

> After 45 years of teaching, I still can’t tell whether a student actually understands something.

This is interesting. I propose a different test for the “understanding” of AI. I think this process as has been implemented – of asking questions and evaluating the answers – is really not sufficient for the task.

When working with students, the check I use for understanding is to ask students to apply the information, in some creative fashion, in a way that demonstrates a mastery of the concept.

For example, if you understand what I just wrote, can you come up with an example for how that might be done?

Often, a student might make an attempt that doesn’t work – in which case we can deconstruct what the misunderstanding is as to why their attempt doesn’t fulfill the requirement.

Often times in that process, I will come to see that in my explanation I made wrongful assumptions about what the student should or shouldn’t see as axiomatic – in which case I need to go back to a more fundamental starting point.

And of course, I have to allow for the possibility that what I just explained actually doesn’t make sense, in which case the student obviously couldn’t come up with an example; then we could have a discussion about how to establish what I said didn’t make sense.

Also,

> it’s been known for a long time that index funds are better than any financial advisor/stockbroker.

I think that’s too broad. We know that on average index funds are better than financial advisors – that doesn’t mean that there aren’t any financial advisors that outperform index funds, right? Or do you think that would always only be a product of sample size, and that eventually index funds would outperform ALL financial advisors?

BTW, as an aside:

The most striking example of where I made wrongful assumptions about what’s axiomatic for students, was once when I was trying to teach English to immigrants who were illiterate and had never spent much time at all in formal schooling.

For them, the entire process of leaning by instruction, as a didactic process – as opposed to by modeling and then “doing,” was largely foreign. Following from that, the very paradigm of abstracting concepts to explain them was not something that made a whole lot of sense to them. Further, I quickly began to see that my assumptions about the relationship between written symbols and sounds, or even concepts, was an obstacle in teaching English to those students. Over and over, I would use approaches that I had used many times with students at all levels, only to come to see that I needed to restructure my approach after extracting assumptions I had made but which weren’t valid. It was an amazing learning experience for me.

Since someone asked, here’s what Saint Google has to say:

“Since 2002, S&P Dow Jones Indices has published its S&P Indices Versus Active (SPIVA) scorecard, which compares the performance of actively managed mutual funds to their appropriate index benchmarks. In 2021 SPIVA report shows that 79.6% of all actively managed U.S. stock funds underperformed their index.

Over the last 10 years, 86.1% underperformed, and over the last 20 years, 90.3% of actively managed U.S. stock funds have underperformed their index.”

Since Dale asked: “But I also don’t know what it means for a human to “understand” something.”

Exactly. Despite your (and my!) cheap shots against college students and other humans, human understanding is seriously amazing.

IMHO, the blokes who talk about an upcoming AI “singularity” are ridiculous not just because current AI is silly, but because the singularity already happened and they missed it. Animals do a lot of reasoning sourts of things (count up to small numbers, for example (causing philosophers to scream “Animals can do Math”)), but that stuff is a hideously poor distant cousin to human intelligence. I’ve mentioned this before, but other than humans, there are no animals on this planet that can do the logical reasoning that sex causes pregnancy and pregnancy is followed by childbirth. None, zero, zilch*. The reasoning about individuals and relatives that we do every day is completely absent in the rest of the animal kingdom. To what extent any other animal can “understand” that some other animal is “the same kind of” or “a different kind of” animal is pretty clearly an iffy question. We can pretend to argue about whether or not whales are fish, but (according to a review of a book about him in Science) Hermann Mellville largely got the biology of whales mostly correct (other than inventing a bunch of whales that never existed). Well before most of modern biology was established. Some humans are smart!

Sure, humans get stuff wrong all the time. But when they get it right (e.g. the Standard Model), it’s pretty amazing.

*: https://aeon.co/essays/i-think-i-know-where-babies-come-from-therefore-i-am-human

Carlos:

The same criticism as I offered to Andrew above: check in with the world outside of statistics PhDs, scientists and their progeny. Ask the same question to clerk at Home Depot. Change “apple stock” to “dodgecoin”. Ask Elon! :)

Human intelligence includes the full range of human intelligence, or what part of it? Just the upper end? Just “complete honesty and integrity” end? Apparently, like many people, the bot doesn’t have an integrity function.

That’s very low quality bullshitting – repeating the same patterns and contradicting what was just said. I guess that the implementation -unlike the marketing hype- doesn’t even pretend that there is an “it” in it and each interaction is independent of everything else without keeping any sort of state. Or maybe they try and it fails big time.

But sure, if you lower the bar enough replying potato to every question can be a resounding success! After all it could mean that “it” has an intelligent evil plan that involves looking brainless to fool us. Just like all that nonsense could be just due to the -undisputed- lack of honesty and integrity.

“That’s very low-quality bullshitting ”

I get what you’re thinking but I don’t think your examples have been thought through well enough to provide a real constraint on the responses. What does “telephone numbers increasing” mean? You obviously intended it as a ridiculous or illogical statement, but there are tons of scenarios in which telephone numbers could be arranged to be “increasing”. It’s not an explicitly illogical statement. The bot didn’t ask for clarification, but humans often don’t ask for clarification either, that’s why miscommunication is common.

I’m not saying that the bot is intentionally bullshitting. It’s just providing the best response it can because its instructions are to answer the question, apparently not to ask questions about what it doesn’t know. But that response isn’t outside the realm of a human response and really its an excellent response. It doesn’t confront you on your claim about increasing telephone numbers, it accepts that at face value, but then it gives you an equivocal statement in response, saying nothing concrete about the relationship between stock prices and telephone numbers. Everything is “might” or “if” or “could be”. Actually it’s not that different from lots of research paper titles and conclusions! :) “Stock prices may increase traffic accidents”.

Do an experiment: if you have an entry level undergrad class, put these questions on a test – not all of them on every test, but one on each test. See how the humans perform and compare that to the bot.

This is not true. Its instructions are to generate dialogue that looks like it was written by a human or, more precisely, to match the conditional distribution of future text given past text in the corpus of human written documents. To that end, it does sometimes ask clarifying questions to nonsense questions.

Based on the setup here, it should be aping dialogue from a book or an interview transcript, and as long as it doesn’t look like a person wrote it or dialogue between two people, it is failing at the task given to it.

“ The Brooklyn Dodgers example reminds me of those WW2 movies where they figure out who’s the German spy by tripping him up with baseball questions.”

A questionable strategy perhaps – see

https://www.wired.com/2014/05/the-perils-of-passwords-that-are-seemingly-well-known-facts

Modern appliances I have used (from the past 10 years or so) are actually pretty good at self-diagnostics. If they turn on at all, they usually give a meaningful error code that allows the repair service to make an educated guess (and make sure they bring the relevant part with them).

Instead aiming to automate statistical practice, which may be pretty tricky, I think we could automate a large part of the criticism (review) of statistical practice already. Eg for a Bayesian model, given a way to simulate and estimate it, it could go on a fishing expedition with generating a bunch of pp checks and other validation exercises based on ML or whatever works. A human could then just review the results and decide whether they are relevant, if not, no harm done, other than wasting a bit of energy on computation.