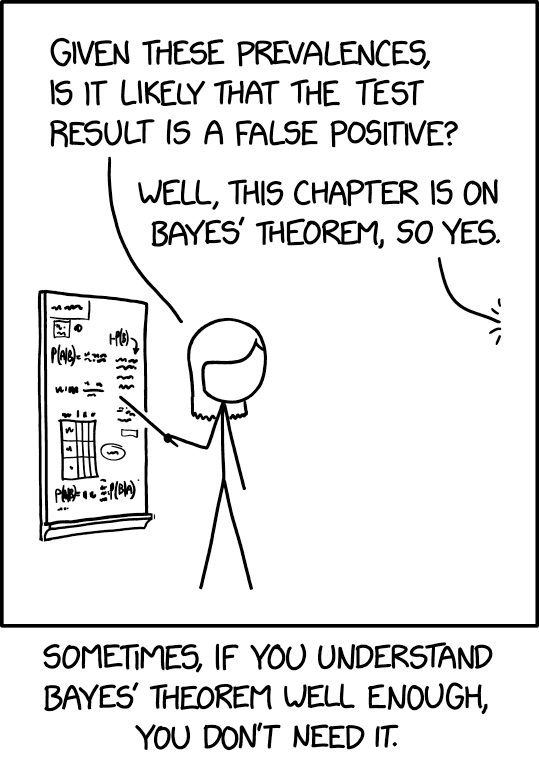

Bill Harris points to this cartoon from Randall Munroe. I think he (Munroe) is really on to something here. Not about Bayes’s theorem but about the sorts of examples that are in textbooks.

Consider the following sorts of examples that I’d say are overrepresented in textbooks (including my own; sorry!):

– Comparisons and estimates where the result is “statistically significant” and the 95% interval for the effect of interest excludes zero.

– Simpson’s paradox situation where adjusting for a factor changes the sign of an effect.

– Regression discontinuity analyses where there’s a pleasantly smooth and monotonic relationship between the predicted outcome and the running variable.

– Instrumental variables analyses where the instrument is really strong.

– Probability examples that are counterintuitive.

In short: in a textbook, most of the examples work. And when they don’t work, the failure illustrates some important point.

What’s going on here? Textbook examples are there to illustrate a method. The recommended approach is to first give two examples where the method works, then one where it fails. But we, the textbook writers, are busy, so we’ll often just give the one that works and stop. As I wrote once, “the book has a chapter on regression discontinuity designs. That’s fine. But all we see in the chapter are successes.”

Then again, on this blog, sometimes all you see are the failures. That’s not representative either

And then there are some examples such as aggregation and conditional probability where it’s just standard to demonstrate the principle with a surprising example.

As you can see, I have no overarching theory here; it just seems like a topic worth thinking about. Maybe in my next textbook I’ll try to address the issue in some way, maybe just to bring it up to remind the readers about this inevitable selection problem. I’m also reminded of the famous book, “What They Don’t Teach You at Harvard Business School.” Selection creates opportunities!

This is one of the things I really like about Light, Singer, and Willett’s book “By Design: Planning Research on Higher Education” – they often chose examples that were clear illustrations of a methodology but which had some illustrative difficulty or flaw. The overarching picture they presented of research was one of smart people trying their best under imperfect situations to study something close to what they wanted to investigate, using the best methods their circumstances would allow. That was so helpful to me as a graduate student.

This is worth pointing out. Many of my new hires have a lot of holes in their math/stats education. For instance, they know Bayesian inference but they’re unfamiliar with any type of sampling. So, my company, through necessity, teaches courses on the basics.

The courses are run through counterexamples in textbooks. That is, they learn the theorems/methodology and then implement it. Then, they have to disprove it. This is typically what they have to do on their own. Demonstrate when it fails and explain why.

What company do you work at?

At least share industry! This is interesting

My company makes software for telecoms and may start manufacturing some telecom equipment.

My stats professor in business school used to say that if he were writing a textbook, the problems at the end of each chapter would be a random mix of these:

1. Problems that were directly relevant to the material presented in the preceding chapter.

2. Problems that could be solved using just the material in earlier chapters.

3. Problems that could only be solved using material presented in subsequent chapters (presumably, students would be expected to answer, “This problem can’t be solved using the techniques we’ve learned thus far”, or some such thing).

I’m surprised that nobody has mentioned “Counterexamples in Probability” by Stoyanov yet…

I have always used messy examples in teaching that don’t neatly illustrate a method’s success. And I’ve written several textbooks – virtually none of the examples (assignment questions rather than illustrative examples) are “clean” successes. I think this is increasingly important – there is no such thing as simple data, or rather, the only simple data students will ever see is in their textbooks. The more you use real data, the less likely it is to fall into that trap. I also like the Tufte quote (paraphrased as) “data is almost always multivariate.” So many texts build things up from single variable analyses to bivariate analyses that the true multidimensional picture ends up looking like a special case or more advanced topic, rather than the reality it actually is. It’s about time that we start using multidimensional data from the very beginning – if we do that, it is not likely to end up with overly simplified examples that always “work.”

Thanks for bringing up one of my favorite pedagogy issues. I understand why it’s important to have schematic, just-so examples to illustrate abstract points; in fact, “reform” methods in quant teaching begin with such examples and work backward to the general principles. So far so good.

The problem is that the real world is messy. It’s not just about not knowing in advance whether an analytical move is correct or incorrect, although that’s one aspect. The biggest problem is the ambiguous situation, where some conditions are met and others sort of met, or when proxy measurements are sort of good but not perfect, or when different modeling strategies each have something to recommend them but also some possible flaws, etc. In my experience (as an economist with sidelines in health and environmental analysis), that’s a lot of what students will have to deal with when they leave the classroom. The highest purpose of education is to prepare them to navigate their way thoughtfully through the wicked and semi-wicked problems ahead.

And so there should always be ambiguous examples, the kind where different judgments of their credibility and limitations can be debated. In a flipped classroom that should be a primary activity. It can be set up by giving students quick (e.g. internet researchable) problems and data sources and then asking thorny questions.

Incidentally, one advantage of posing problems that don’t have clear cut analytical solutions is that it encourages confronting the analytics with contextual information. My approach was to push case studies (single digit N) with thick description as aides to grappling with analytical ambiguity.

I’ll admit that this pushing of ambiguity and how to deal with it was not so great for my teaching evaluations: there were always some students who objected to it because they wanted the shortest distance to high test scores and the ability to say they “learned” the topic, and I don’t remember a single evaluation that singled out my use of these kinds of problems as a reason for an endorsement. I don’t think there are any incentives for taking this route except the intrinsic ones.

Echoing your comment: I used to teach cost-benefit analysis and used a great British textbook (no longer in print) by Sugden and Williams. One thing that made it great was that the exercises were all messy, complex, illuminating, challenging, and realistic. The worst teaching evaluations I ever got. The most I ever learned teaching a course. Something is wrong with that picture. Perhaps more than one thing.

One year I tried teaching introductory freshman microeconomics with a running theme that involved buying a watch from a guy out of the back of a truck. I used it try and show how a lot of the theorems have transaction costs issues that make application to the real world not quite as straightforward as they look instead of discussing each concept with a different example. I thought it was great… the students, not so much.

The bottom line is that on should teach a life cycle view of statistics https://papers.ssrn.com/sol3/papers.cfm?abstract_id=2315556

While this is surely a fun example in the context in teaching statistics, I think significant problems with pedagogy permeate all disciplines. Teaching is a hard enterprise especially since “teaching well” is only ever loosely defined and is has an ever-shifting definition depending on the circumstance.

I think a not so insignificant share of the blame for our pedagogical woes is the nature of the academy itself. In my estimation it seems much more geared towards preparing students for higher level study where the cat is finally let out of the bag that things are not as clean as they were first presented. Trouble is, most people don’t get to that point and to the extent that they do, the pressures of publish/perish force students to learn what they need to do to succeed as an academic; which is not necessarily the same as producing useful contributions. Though this problem may be acute in the social sciences it is not nearly limited to it. Feynman discussed this issue in some detail when he visited schools around the world only to find that many students were masters of textbook physics but couldn’t apply it to simple problems / examples in the real world. And in my own experience / discipline, structural engineers tend to be very maleducated even when it comes to their own field of expertise.

This is a real problem and I suspect a major barrier to change is student expectation. As Dale and others shared above, heroic attempts to get students to think / learn the practicalities of their subject often leads to poor reviews. Students expect high grades or at least a pathway where hard work + some intellect = high grades but those expectations are hard to meet when one does not have an exact roadmap to provide them. Daniel Lakeland has discussed his experience as a TA on this blog several times and shared similar experiences.

If student expectations were recalibrated towards expecting to learn “useful” things instead of simply achieving high grades I would think the situation would likely be much reversed. Where teaching students things that are not really practical would be met with harsh criticism – this comes with its own set of problems but I think would be much better than the current situation – and practical teaching would be met with praise.

We teach our students that school is separate from the real world. And by doing this we are doing a service to most of our students who do not become scholars but rather moms/dads/taxpayers just trying to go about life.

> learn “useful” things instead of simply achieving high grades

That is what I tried to convince my daughter to do when she was in university, instead she focused on high marks and got the highest mark in her program and second highest in her college.

Then about 4 years after leaving university and running her own small businesses, she mentioned to me that she wished she had focused on learning “useful” things.

Not sure the same advice would be the best for those who stay in academia. When I was in university I could not care less about getting marks higher than I need to continue. Really surprised many faculty, at least at first.

Indeed, I had some sort of B+ average as an undergrad. I focused on understanding the topics I cared about, and passing the things that were pure requirements, like a year of foreign language or a class in English Literature.

My impression is that grades have gotten even more inflated, and that you can pass an undergrad degree these days without actually understanding even basic stuff, provided for a few weeks you can parrot it back.

“We teach our students that school is separate from the real world. ”

That wasn’t my experience at all in 8+ yrs in academia. It *is* true that many students *believe* that what they are taught in school is separate from the real world, but I’m not sure why. I didn’t get that at all. I’m a geologist and for all the methods we learned the assumptions that are required to make a method valid were drilled into our heads. That’s why you don’t see claims that the earth is 100ga- or 5ka-old in the geologic literature every month, while fantastic claims circulate frequently in the social sciences.

There’s nothing wrong with teaching the successful examples, but you have to include and stress the assumptions that must be met for the method to be valid. There’s a significant list of assumptions that aren’t even mentioned in most social science studies, let alone justified. A common factor that’s overlooked in many social science studies is whether the study population is representative of the real world or not. Every study should have to justify the choice of the sample – which would rule out a lot of “my 9am class” studies! Tons more, I won’t bother…

Yes. During the lectures in my long-ago statistics class, I often raised objections using what I learned later in computer science were “edge” conditions. Usually, the statistics failed in these contexts. To his credit, the instructor usually acknowledged my observations.

Later I came to understand that statisticians usually know how to use their tools and avoid these situations, and I went on to test algorithms with edge conditions – a standard practice in computer science.

I unfortunately have to teach NHST. A lot of students get really confused when they’re doing a one-tailed t-test and the sample is in the wrong direction. (So I always try to include a few of these in class and assignments!) I’ve also noticed the vast majority of textbook problems result in p-values that are really close to 0.05. I assume this is so that they’re more interesting to look up in a t-table? But again, students get really confused when they get p=0.73 or t=17… (So I try to include some of these, too.)

As for the too-clean examples, I wonder if that’s a habit from how things are taught in other fields? That’s exactly what we do in Physics, but it *works* because we’re (usually) explicit about what our simplifications are. Plus it’s often a lot easier in physics to understand the simplified model and then figure out how to modify it than it is to dive straight into the messy version. That’s probably true in Stats, too, but I’m guessing part of the problem is most people only get one semester of it, so there’s not much time to build up models to handle messier situations. Do more advanced Stats courses do this sort of thing?

” it *works* because we’re (usually) explicit about what our simplifications are. ”

Exactly – people have to know what assumptions / simplifications are required to make a test valid, and they have to address those assumptions and simplifications. No one in physics would publish some fantastic story that was based on an experiment that obviously violated all the required assumptions, because it would be shot down in heartbeat. But people publish studies that do that all the time in social sciences! It’s ridiculous. There’s no security at the door. The cranks just walk in and do what they want.

When I was in academia, I would often give a new grad student an analysis to do. And they would promptly disappear. When I finally found them, I would find out that they were struggling because they could not find a significant relationship in the data. This would lead to a long talk about (1) by the time a problem gets to me, the easy answers are all gone (2) in textbooks problems always led to finding statistically significant relationships, but textbooks were not real life, and (3) don’t go too long without making forward progress without asking for help or at least checking in

“That’s why you don’t see claims that the earth is 100ga- or 5ka-old in the geologic literature every month”

Would a geologist as late as the 1960s claim that continental drift was pseudoscience because it violated the geological assumptions of the day?

“No one in physics would publish some fantastic story that was based on an experiment that obviously violated all the required assumptions, because it would be shot down in heartbeat.”

Is it too discomfiting to suggest that this says more about physicists than physical reality?

Two little words.

Cold. Fusion.

The interesting thing about cold fusion as I understand it is that for thirty years people have been replicating the anomalous behavior but noone can explain what is actually going on.

“Would a geologist as late as the 1960s claim that continental drift was pseudoscience because it violated the geological assumptions of the day?”

No.

“Is it too discomfiting to suggest that this says more about physicists than physical reality?”

I don’t get it — what do you think this says about physicists?