Under the subject line, “A potentially dubious study making the rounds, re police shootings,” Gordon Danning links to this article, which begins:

Police use of force is a controversial issue, but the broader consequences and spillover effects are not well understood. This study examines the impact of in utero exposure to police killings of unarmed blacks in the residential environment on black infants’ health. Using a preregistered, quasi-experimental design and data from 3.9 million birth records in California from 2007 to 2016, the findings show that police killings of unarmed blacks substantially decrease the birth weight and gestational age of black infants residing nearby. There is no discernible effect on white and Hispanic infants or for police killings of armed blacks and other race victims, suggesting that the effect reflects stress and anxiety related to perceived injustice and discrimination. Police violence thus has spillover effects on the health of newborn infants that contribute to enduring black-white disparities in infant health and the intergenerational transmission of disadvantage at the earliest stages of life.

My first thought is to be concerned about the use of causal language (“substantially decrease . . . no discernible effect . . . the effect . . . spillover effects . . . contribute to . . .”) from observational data.

On the other hand, I’ve estimated causal effects from observational data, and Jennifer and I have a couple of chapters in our book on estimating causal effects from observational data, so it’s not like I think this can’t be done.

So let’s look more carefully at the research article in question.

Their analysis “compares changes in birth outcomes for black infants in exposed areas born in different time periods before and after police killings of unarmed blacks to changes in birth outcomes for control cases in unaffected areas.” They consider this a natural experiment in the sense that dates of the killings can be considered as random.

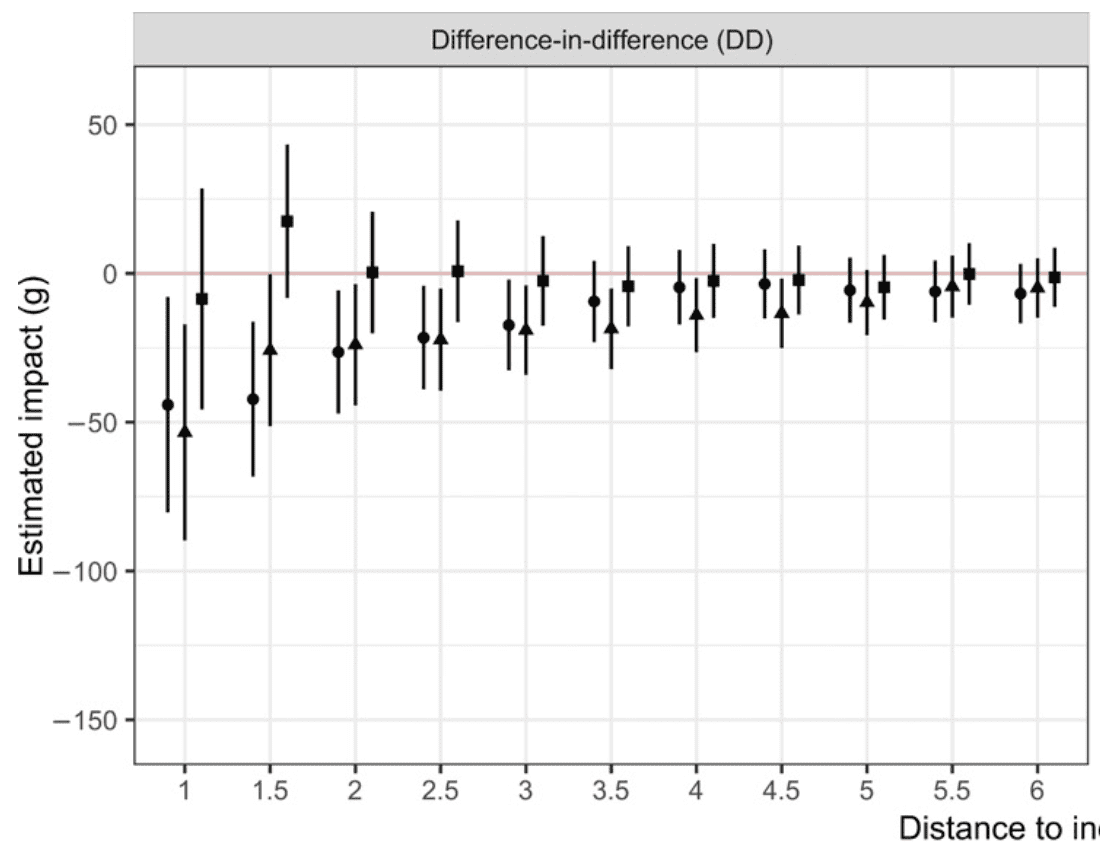

Here’s a key result, plotting estimated effect on birth weight of black infants. The x-axis here is distance to the police killing, and the lines represent 95% confidence intervals:

There’s something about this that looks wrong to me. The point estimates seem too smooth and monotonic. How could this be? There’s no way that each point here represents an independent data point.

I read the paper more carefully, and I think what’s happening is that the x-axis actually represents maximum distance to the killing; thus, for example, the points at x=3 represent all births that are up to 3 km from a killing.

Also, the difference between “significant” and “not significant” is not itself statistically significant. Thus, the following statement is misleading: “The size of this effect is substantial for exposure during the first and second trimesters. . . . The effect of exposure during the third trimester, however, is small and statistically insignificant, which is in line with previous research showing reduced effects of stressors at later stages of fetal development.” This would be ok if they were to also point out that their results are consistent with a constant effect over all trimesters.

I have a similar problem with this statement: “The size of the effect is spatially limited and decreases with distance from the event. It is small and statistically insignificant in both model specifications at around 3 km.” Again, if you want to understand how effects vary by distance, you should study that directly, not make conclusions based on statistical significance of various aggregates.

The big question, though, is do we trust the causal attribution: as stated in the article, “the assumption that in the absence of police killings, birth outcomes would have been the same for exposed and unexposed infants.” I don’t really buy this, because it seems that other bad things happen around the same time as police killings. The model includes indicators for census tracts and months, but I’m still concerned.

I recognized that my concerns are kind of open-ended. I don’t see a clear flaw in the main analysis, but I remain skeptical, both of the causal identification and of forking paths. (Yes, the above graphs show statistically-significant results for the first two trimesters for some of the distance thresholds, but had the results gone differently, I suspect it would’ve been possible to find an explanation for why it would’ve been ok to average all three trimesters. Similarly, the distance threshold allows lots of places to find statistically significant results.)

So I could see someone reading this post and reacting with frustration: the paper has no glaring flaws and I still am not convinced by its conclusion! All I can say is, I have no duty to be convinced. The paper makes a strong claim and provides some evidence—I respect that. But a statistical analysis with some statistical significance is just not as strong evidence as people have been trained to believe. We’ve just been burned too many times, and not just by the Diederik Stapels, Brian Wansinks, etc., but also by serious researchers, trying their best.

I have no problem with these findings being published. Let’s just recognize that they are speculative. It’s a report of some associations, which we can interpret in light of whatever theoretical understanding we have of causes of low birth weight. It’s not implausible that mothers behave differently in an environment of stress, whether or not we buy this particular story.

P.S. Awhile after writing this post, I received an update from Danning:

There were apparently data errors in the paper, and the author has requested a retraction. I followed the link, where the author of the original paper wrote:

After publication, a reader discovered classification errors in the openly shared data. After learning about errors, I conducted a thorough investigation focusing on a larger sample of cases that revealed more errors that are the result of 2 problems 1) Carried over from orig FE data, which I should have fixed. 2) Incorrect coding of armed cases as unarmed through Mturk because of a bug in my html code used for the initial coding scheme (later coding relied on different forms). A reanalysis of the data leads to revised findings that do not replicate the results in the original paper.

I can sympathize. It’s happened to me.

Data issues with this particular paper aside, I don’t think it’s at all frustrating for you to both a) confirm that a paper uses solid methodology and reasonable theory, and b) criticize its description/interpretation of results for being overly causal, relying too much on statistical significance, and failing to sufficiently emphasize their tentativeness without replication. A paper isn’t just a bunch of statistics and graphs. It’s also the set of theoretical inferences made from the statistical conclusions. Researchers have to do both well, although yeah, start with the solid methodology and theory–the most careful interpretation of bad data and faulty analysis is useless.

So the bad data was so strongly skewed towards the effect that it made the effect statistically significant across the entire dataset? I want to see how that sausage was made.

Very interesting. As I understand it, a large effect is about 20-25 grams on average so 50 would have been enormous! Glad to hear the investigator did the right thing when finding an error. Thanks for taking your valuable time: this is my favorite blog!

Once again, the importance of data sharing. If this data wasn’t open or shared, no one would have found these mistakes. The more I see things like this caught when publications are based on open/shared data, the less I’m inclined to trust publications based on data that isn’t available for others to review.

+1000.0253

THe Mturk thing is pretty interesting. My first reaction was, wow, this person is really having to do a lot of work! Finding documents, setting up the Mturk, getting people to go through a read them, pulling this in and doing some stats on top of that and having a social science thing to say… It’s a lot of stuff.

But it sounds like (I didn’t go back and double check exactly what the error was or how it was discovered) if this person hadn’t been in control of the whole pipeline they’d never have been able to diagnose the problems (to realize the was a mistake in the Mturk stage)

> later coding relied on different forms

This is interesting too — sounds like a review process changed and some data was updated to a new format? Along with all the HTML and whatnot, that’s a lot of moving pieces, but I guess at least the pieces were trackable enough that the check was possible.

Study idea: It would be interesting to incorporate open-science predictors like “shared data” and “shared code” in meta-analyses. You’d expect them to be predictive of more conservative effects, of course, but the precise way that effects cluster with other factors could be very informative, in comparison with (say) the file drawer problem. We’re used to talking about bias from missing papers locked in drawers, but here we’re talking about bias from published papers based on data locked in file drawers. The open science movement is likely to make the latter problem (and possibly others) more important (because people who strongly resist the movement may be more likely to use flawed data) and more detectable (because we now have a comparison group).

Maybe something like this has been/is being done already–I’d be surprised if preregistration hasn’t been used as a predictor, for example, either to argue for or against its value.

A moot point now, but I would guess there might be a confound where children living closer to the locations where someone got shot might be exposed to more Adverse Childhood Experiences (ACEe) generally – and this could explain the association between low birth weight and proximity to shootings. IOW, the shootings are a proxy for the effect of geographical locatiom.

Well, not really the effect of geographical location, but the effect of more ACEs (in association with living in a more dangerous neighborhood).

s

I think you mean: when life is tough all-around, there are both shootings and difficult births. Sounds plausible to me. But I’ve only got a Masters Degree …. in “science”.

Thanks for translating into English.

Location is controlled for with a fixed effect:

“The model includes indicators for census tracts and months, but I’m still concerned.”

The concern is there something at the tract-month level that explains both shootings and birth outcomes, like local economic/employment shocks.

The graph is more difficult to interpret than it need be, because any causal effect is entirely overshadowed by a completely predictable change in the variance of the estimates as distances increase – the number of people within a ring of X km increases linearly with X so the precision of the estimates also increases. Surely better would be to divide the observations into rings of equal population density.

Comparison with studies on other events, even completely disconnected events, such as lottery wins, car accidents, non-police involved shootings… would also make it easier to assess the graph shown.

All other issues aside, the big problem with this entire approach is that it assumes that nothing else effects birthweight!! Or rather that the effect of police shootings are so large that they outweigh every other birthweight effect. The chances that this analysis would actually find such an effect even if it were real are laughably small, because the effect would have to be massive to outweigh the dozens of other – almost certainly much larger – factors that affect birthweight.

Anon:

Yup, it’s the piranha problem.

Anon *

> it assumes that nothing else effects birthweight

Well, seems to me that it assumes proximity to shootings would outweigh all other birth weight factors associated with location, no?