This is Jessica. A couple weeks ago Priyanka Nanayakkara pointed me to the fact that Alabama is suing the Census Bureau on the grounds that by using differential privacy it is “intentionally skew[ing] the population tabulations provided to States to use for redistricting” and “forc[ing] Alabama to redistrict using results that purposefully count people in the wrong place,” and other states are backing the case. Maybe it will even escalate to the supreme court. A lot of this has been public news for months now but continues to attract attention, e.g., this week in the Washington Post. I haven’t followed all of the details of the Alabama case but have a few general thoughts from conversations with Priyanka about the recent headlines and my experiences with differential privacy for some research we’ve been doing. It’s an interesting but challenging approach.

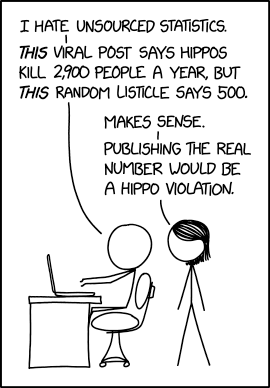

Today’s xkcd.

First, I’m not really surprised to see implications of differential privacy being fiercely debated, since my impression has been that the concept raises as many questions in practice as it answers in theory. Differential privacy is not any particular algorithm; rather it’s a mathematical definition of privacy that provides a stability guarantee on the output of a function (i.e., a query calculated on data) based on changes to the input. ε (epsilon) differential privacy is the simplest version, where ε, the privacy budget (typically some small value, e.g., 0.001), describes the difference in probability of obtaining some query result when the database contains versus doesn’t contain an individual’s information. A common randomized mechanism for achieving differential privacy is the addition of random Laplacian noise to the query result. For more background on the general concept, NIST has a pretty good blog series.

One of my first questions I had when I learned about it was, How do you choose ε? This is where the elegance breaks down and things start to feel a little wild west. To use differential privacy you have to somehow determine what is an appropriate amount of information to leak/accuracy to lose. This depends first on the type of query, or more specifically its sensitivity – what’s the maximum contribution that can be made to the query result by one single individual. But to determine sensitivity you have to know about the “universe” of possible databases. For instance, to noise a mean, you need to have a sense of the range of values in the population of people whose data might be in the database. For count queries like the Census deals most heavily with, this is less of a concern since sensitivity is 1 for a count.

To use differential privacy you also have to deal with the fact that ε is a relative measure, bounding the information gain of the user in the worst case, which is the case where the supposed attacker has complete knowledge about all individuals in the universe under consideration but doesn’t know whether a given individual is in the database. This makes the guarantee robust to arbitrary amounts of information on the part of the attacker, and is motivated by results on database reconstruction attacks, which have, shown, e.g., that answering O(n log^2 n) aggregate queries (with sufficient accuracy) on a database with n rows is enough to accurately reconstruct the entire database.

But can be hard to translate to real world settings. For instance, what you might really want is a measure of risk of reidentification of an individual who contributes data. If this risk is too high, privacy is compromised. But going from constraints on this risk to a particular epsilon is complicated again because it depends on this universe of possible values. And maybe the worst case is not the easiest to reason about, and you want to relax your assumptions of the adversary’s information. How to do that is not necessarily straightforward.

If the user has some guidelines from regulation, as in some health settings, that might help by allowing them to back calculate what ε they need to achieve constraints on risk of reidentification. But as Apple aptly demonstrated several years ago by setting what privacy researchers considered an enormous privacy budget, without clear guidelines it’s easy to embarrass yourself. Cynthia Dwork, primary author of differential privacy, has suggested creating a registry of epsilons, which might help people new to using it by providing some sense of convention.

While all this might be hard at the individual query level, you also have to consider the lifespan of the data, since every query leaks a little information. Differential privacy is proposed as part of the reusable holdout approach to multiple comparisons problem, previously discussed on this blog, which similarly requires assumptions about the extent of querying expected and pushes the hard calls to user-determined parameters.

I could go on; for instance I was also surprised when I started reading about differential privacy at the relative lack of treatment of noised uncertainty estimates, given that often you might want to infer parameters using data. At one point when I was puzzled by this, I came across this slide deck by Wasserman which provided some context on how thinking around privacy can differ among those approaching it from a computer science versus statistics perspective, where statisticians would prefer to assume that there will be statistical modeling and you can’t necessarily prespecify it all. Though it’s old (2011) so maybe there’s a more modern view now about the choice between sanitized databases and noised query results.

Despite all my comments about how bewitching differential privacy seems in practice, I don’t mean to say that it’s a worse approach than the alternatives for preserving privacy in Census data. On some level differential privacy seems like the endpoint you arrive at when you want strong privacy guarantees with a minimum of knowledge about the attacker. And I’m too much of a novice on this topic to judge how much better or worse it is than the sort of swapping of counts that the Census reportedly used to rely on. Among the reasons they switched is the finding of an internal analysis by the Bureau found that it was not hard to re-identify a non-trivial portion of the population from the 2010 Census given the old techniques and available data at the time (17%). Other analyses have estimated larger proportions.

A question that I’ve seen come up in the media coverage is, How bad can it be that personal characteristics encoded in Census questionnaire responses are leaked, at least at an individual level? It doesn’t seem hard to me to imagine how releasing certain characteristics could negatively affect people by being used to target them in various ways– e.g., combinations of gender and sex, depending on how it’s asked, or age. For the recent Census debate, the main concern in the news is related to inaccurate counts leading to missed opportunities for government assistance, and as the quotes at the start of this post imply, about redistricting decisions. I haven’t closely followed the discussion of implications here, but it seems some have argued that certain groups or regional populations could be negatively affected. I’ve seen others argue that for some redistricting concerns there is no real worry because we’re talking about aggregating Census blocks to which random noise has been added. The fact that there is a huge number of ways to aggregate, filter, etc. Census data is part of why differential privacy is hard here, and the Census uses a number of postprocessing steps to try to address inconsistencies that can arise.

Apparently the Census Bureau plans to sidestep some of this by replacing the ACS with fully synthetic data, drawing some critique related to needing to anticipate all relationships of interest to ensure they are preserved. It’s almost as though “solving” the question of the appropriate relationship between EDA and CDA could also result in solutions to all the hard privacy problems.

Also while above I talked about epsilon differential privacy since it’s the more common definition, the Census Bureau is using a slightly more complex version of differential privacy called zero concentrated differential privacy (zCDP). I believe this is because it composes better over multiple queries. The Census implementation also seems oriented toward providing more by way of interpretation, as they pair ε with another parameter δ representing the likelihood that privacy loss might exceed the upper bound represented by a particular ε. But my knowledge here is limited (and honestly, writing this post makes me feel grateful that I don’t do privacy research!) There’s some discussion of why the switch to Gaussian noise and z-CDP and ε, δ pairs starting around 14 minutes here. https://www.youtube.com/watch?v=bRIoE0rqwAw

> Apparently the Census Bureau plans to sidestep some of this by replacing the ACS with fully synthetic data

Wow, that’s…an idea. I’m guessing that they would still allow researchers to apply for access to the real data after going through all the IRB or DUA stuff? Similar to how you would need to apply now for access to the microdata. Which I guess would not be as bad then and research would still be possible. Idk I don’t really get the Census’s reasoning here but maybe I’m underestimating the ease at which people can be identified.

Interestingly, this thing (https://www.census.gov/library/fact-sheets/2021/what-are-synthetic-data.html) says

It’s always hard to interpret things like that – whether it’s genuine or if there are actually really strong tailwinds to the idea like the tweet Jessica linked to seemed to imply.

Interesting, I wasn’t aware that there was a way for researchers to sidestep whatever privatized version is publicly released. I’d be very curious if that’s allowed.

Multiple people sent me that tweet about ACS, so I assumed it’s for real. But yeah, I guess it’s not official until they print it somewhere!

On the last webinar I attended it sounded like they were still going to make the real figures available in the RDCs, but the above somewhat understates what a massive pain in the ass working in the RDCs is. You have to get a proposal approved to access one at all; no access for exploratory work is permitted. If you aren’t affiliated with a university that has one you have to burn grant money on paying to use it. You have to do a security investigation not unlike what you would need for a lower-level security clearance, including an interview with an OPM investigator. There is no access to the internet in the RDCs, so you can’t look up any obscure functions your code might need without leaving, memorizing it, and coming back. Then when you have results, you have to negotiate with the Bureau about what you’re allowed to take out, and depending on what you’re trying to take out, this process can be very painful.

The entire process is essentially unworkable unless you are TT faculty at an R1 or maybe a postdoc or graduate student of same.

+1. Though this is a good point in the sense that with all the data currently available to anyone on the web maybe there needs to be an intermediate level of access between what I can do as a casual user who wants to know what is happening in my census tract versus what I can do as a researcher who doesn’t need RDC level access but does need to do a serious analysis for research.

>Cynthia Dwork, primary author of differential privacy, has suggested creating a registry of epsilons

The registry of epsilons link goes to the wrong document. (I think?)

Oh wow, it sure does! That was not intended. Fixed.

Given that the US has a problem with gerrymandering, would the decrease in accuracy potentially be a price worth paying for less “overfitting” in redistricting? See also https://twitter.com/rubenarslan/status/1396505354339602432

If I were to guess, that’s why they’re suing

Sadly, the differential privacy noise will not make partisan gerrymandering any harder. Partisan gerrymanders rely on with election data and/or voter files, both of which are not part of the Census and so will not contain any DP error. However, for analysts who must use noisy Census data to identify and analyze a potential gerrymander, the DP noise (and the biases induced by the Census’ postprocessing system) may make it more difficult to do so.

In theory yes, but actually the people who draw election districts are well aware of the association of race and other factors with voting behavior.

Oh fun, this topic is near and dear to my heart. I have only ever been blocked on Twitter by one person, to my knowledge, and that was Sam Wang, for challenging him (fairly politely!) about this.

The big problem is, as Stephen Ruggles and others have pointed out, the Census Bureau didn’t actually reidentify people in the way I think most people would understand the concept. They could only know which people they correctly reidentified by using information that no one outside the Bureau has. If you match n% of a population but you don’t know which records comprise the n%, which is effectively what they would have had without using information no one else has access to, then what did you really accomplish? There are lots of other problems too, but this is the most fundamental one.

And don’t even get me started on the synthetic ACS, which is going to take a product that was already borderline-useless from a data quality perspective for lots of applications the product it replaced was used for and make it actually-useless.

On or about May 15, Julia Angwin, _The Markup_, interviewed Dwork about the Census and differential privacy: https://www.getrevue.co/profile/themarkup/issues/can-differential-privacy-save-the-census-602525

Hi Jessica:

Very interesting topic. A similar controversy has emerged on the ASA mailing on list…

https://community.amstat.org/communities/community-home/digestviewer/viewthread?MessageKey=27ad828e-d27b-4005-95d5-4a7bf514c56b&CommunityKey=6b2d607a-e31f-4f19-8357-020a8631b999&tab=digestviewer#bm27ad828e-d27b-4005-95d5-4a7bf514c56b

Rodney