Some twitter action

Elliott Morris, my collaborator (with Merlin Heidemanns) on the Economist election forecast, pointed me to some thoughtful criticisms of our model from Nate Silver. There’s some discussion on twitter, but in general I don’t find twitter to be a good place for careful discussion, so I’m continuing the conversation here.

Nate writes:

Offered as an illustration of the limits of “fundamentals”-based election models:

The “Time for Change model”, as specified here, predicts that Trump will lose the popular vote by ~36 points (not a typo) based on that 2Q GDP print.

On the other hand, if you ground your model in Real Disposable Income like some others do (e.g. the “Bread and Peace” model) you may have Trump winning in an epic landslide. Possibly the same if you use 3Q GDP (forecasted to be +15% annualized) instead of 2Q.

It certainly helps to use a wider array of indicators over a longer time frame (that’s what we do) but the notion that you can make a highly *precise* forecast from fundamentals alone in *this* economy just doesn’t pass the smell test.

Looking at these “fundamentals” models (something I spent a ton of time in 2012) was really a seminal moment for me in learning about p-hacking and the replication crisis. It was amazing to me how poorly they performed on actual, not-known-in-advance data.

Of course, if you’re constructing such a model *this year*, you’ll choose variables in way that just so happens to come up with a reasonable-looking prediction (i.e. it has Trump losing, but not by 36 points). But that’s no longer an economic model; it’s just your personal prior.

Literally that’s what people (e.g. The Economist’s model) is doing. They’re just “adjust[ing]” their “economic index” in arbitrary ways so that it doesn’t produce a crazy-looking number for 2020.

To support this last statement, Nate quotes Elliott, who wrote this as part of the documentation for our model:

The 2020 election presents an unusual difficulty, because the recession caused by the coronavirus is both far graver than any other post-war downturn, and also more likely to turn into a rapid recovery once lockdowns lift. History provides no guide as to how voters will respond to these extreme and potentially fast-reversing economic conditions. As a result, we have adjusted this economic index to pull values that are unprecedentedly high or low partway towards the limits of the data on which our model was trained. As of June 2020, this means that we are treating the recession caused by the coronavirus pandemic as roughly 40% worse than the Great Recession of 2008-09, rather than two to three times worse.

This is actually the part of our model that I didn’t work on, but that’s fine. The quote seems reasonable to me.

Nate continues:

Maybe these adjustments are reasonable. But what you *can’t* do is say none of the data is really salient to 2020, so much so that you have to make some ad-hoc, one-off adjustments, and *then* claim Biden is 99% to win the popular vote because the historical data proves it.

Elliott replies:

We take annual growth from many economic indicators same as you, and then we scale it with a sigmoid function so that readings outside huge recessions don’t cause massive implausible projections. That’s empirically better than using the linear growth term.

We also find that there’s a strong relationship between uncertainty and the economic index, so we’re putting much less weight on it right now than we normally would. That all sounds imminently sensible to me!

Honestly is sounds like the main difference between what you’ve done in the past and what we’re doing now is that we’re shrinking the predictions in really shitty econ times toward the prior for a really bad recession — a choice which I will happily defend.

And they get into some hypothetical bets. here’s Nate:

On the off-chance our respective employers would allow it, which they almost certainly wouldn’t in my case, could I get some Trump wins the popular vote action from you at 100:1? Or even say 40:1?

Elliott:

25:1 sure

Nate:

So that’s your personal belief? That starts to get a lot different from 100:1.

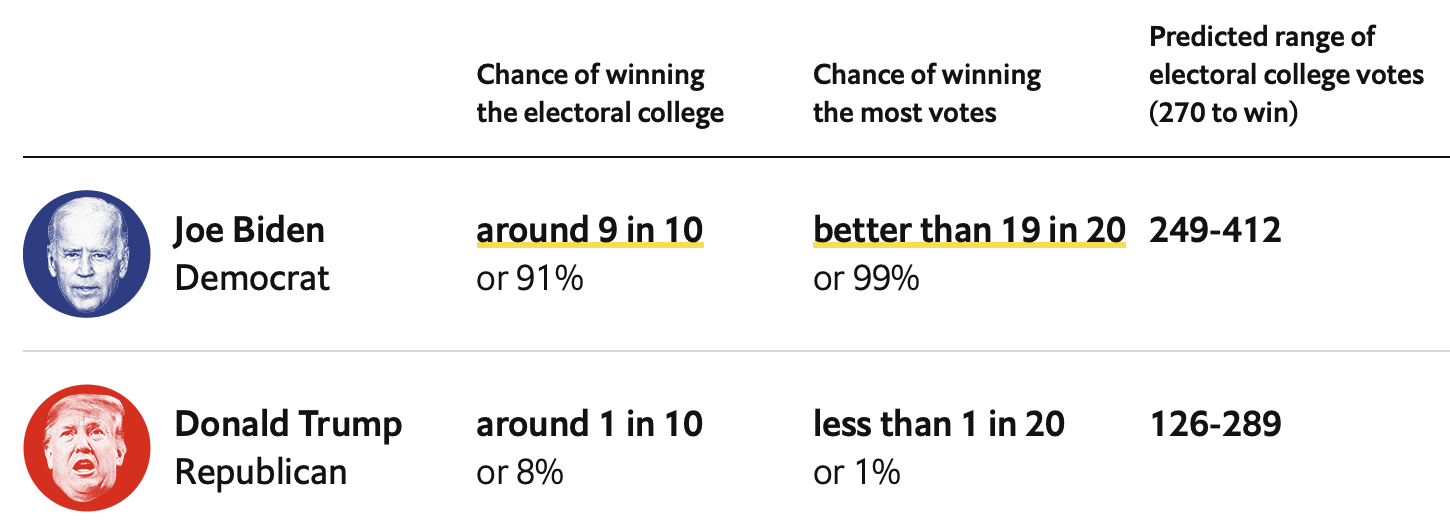

As of today, our model gives Biden a 91% chance of winning the electoral vote and a 99% chance of winning the popular vote. That 99% is rounded to the nearest percentage point, so it doesn’t exactly represent 99-to-1 odds, but Nate has a point that, in any case, this is far from 25-to-1. Indeed, we’ve been a bit uncomfortable about this 99-to-1 thing for awhile. Our model as written (and programmed) is missing some sources of uncertainty, and we’ve been reprogramming it to do better. It takes awhile to work all these things out, and we hope to have a fixed version soon. The results won’t change much, but they’ll change some.

Why won’t the results change much? As we’ve discussed before, our fundamentals model is predicting Biden to get about 54% of the two-party vote, and Biden’s at about 54% in the polls, so that gives us a forecast for his ultimate two-party vote share of . . . about 54%. Better accounting for uncertainty in the model won’t do much to the point forecast of the national vote, nor will it do much to the individual state estimates (which are based on a combination of the relative positions of the states in the 2016 election, some adjustments, and the 2016 state polls), but it can change the uncertainty in our forecast.

Unpacking the criticisms

As noted above, I think Nate makes some good points, so let me go through and elaborate on them.

1. Any method for forecasting the national election will be fragile and strongly dependent on untestable assumptions.

Yup. There have only been 58 U.S. presidential elections so far, and nobody would think that we could learn much from the performance of Martin Van Buren etc. When I started looking at election forecasting, back in the the late 80s, we were only counting elections since 1948. We’ve had a few more elections since then, but on the other hand the elections of the 1950s are seeming pretty more and more irrelevant when trying to understand modern polarized politics.

Nate gives some examples of election forecasting models that have seemed reasonable in the past but which would yield implausible predictions if applied unthinkingly to the current election, and he says he uses a wider array of indicators over a longer time frame, which is what we do too. From a statistical perspective, we have lots of reasonable potential predictors, and the right thing to do when making a forecast is not to choose one or two predictors, but to include them all, using regularization to get a stable forecast.

There is value in looking at models with just one predictor (that’s what we do in the election example in Regression and Other Stories) because it helps us understand the problem better, but when it’s time to lay your money down and make a forecast, you want to use as much information as you can.

Another twist, which we include in our model and discuss in our writeup, is partisan polarization has increased in recent decades, hence we’d expect the impact of the economy on the election to be less now than it was 40 years ago.

But no matter how you slice it, our model, like any others, has lots of researcher degrees of freedom, and I agree with Nate that you can’t make a highly precise forecast from fundamentals alone.

To flip it around, though, you have to do something. Our fundamentals-based model forecasts Biden with 54% of the two-party vote. We gave this forecast some uncertainty, but maybe not enough, which is why we’ve been revising the model. I think this is a matter of details, not a fundamental disagreement with Nate or anyone else. It would be a mistake for us or anyone to claim that their fundamentals-based model can make a highly precise vote forecast right now.

There’s one place where I think Nate was confused in his criticism, and that’s where he wrote that we “say none of the data is really salient to 2020, so much so that you have to make some ad-hoc, one-off adjustments, and then claim Biden is 99% to win the popular vote because the historical data proves it.” First, we never said the data aren’t salient to 2020! We do think that the economy should be less predictive than in earlier decades, but that’s not the same as setting a coefficient to zero. Second, our 99% does not come from the forecasting model alone! That 99% is coming from the forecast plus the polls.

Again, we do think that our forecast interval was too narrow and that our analysis did not fully account for forecasting uncertainty, and that should end up lowering the 99% somewhat. That’s one reason I think Nate’s basically in agreement with us. He appropriately reacted to this 99% number and then I think slightly misunderstood what we were doing and attributed it all to the fundamentals-based forecast. And, to be fair, the fundamentals-based forecast is the one part of our model that’s a black box without shared code.

I just want to say, again, that we need some kind of forecast. We could just say we know nothing and we’ll center it at 50%, but that doesn’t seem right either, given that Trump only received 49% last time and now he’s so unpopular and the economy is falling apart. But then again he’s an incumbent. . . . But then again the Democrats have outpolled the Republicans in most of the recent national elections. . . . Basically, what we’re doing when we have such conversations is that we’re reconstructing a forecasting model. If you want, you can say you know nothing and you’ll start with a forecast of 50% +/- 10%. But I think that’s a mistake. I wouldn’t bet on that either!

2. How to think about that 99%?

I wouldn’t be inclined to bet 99-1 on Biden winning the national vote, and apparently Elliott wouldn’t either? So what are we doing with this forecast:

There are a few answers here.

The first answer is that this is what our model produces, and we can’t very well tinker with our model every time it produces a number that seems wrong to us. It could be that the model’s doing the right thing and our intuition is wrong.

The second answer is that maybe the model does have a problem, and this implausible-seeming probability is a signal that we should try to figure out what’s wrong. That’s the strategy we recommend in Chapter 6 of our book, Bayesian Data Analysis: use your model to make lots of predictions, then look hard at those predictions and reconsider your modeling choices when the predictions don’t make sense. If a prediction “doesn’t make sense,” that implies you have prior or external information not already included (or not appropriately included) in the model, and you can do better.

This indeed is what we have done. We noticed these extreme predictions awhile ago and got worried, and we’re now in the middle of improving our model to better account for uncertainty. We’ve kept our old model up in the meantime, because we don’t think our probabilities are going to change much—again, with Biden at 54% in the forecast and 54% in the polls, there’s not so much room for movement—but we expect the new model will have a bit more posterior uncertainty.

At this point you can laugh at us for being Bayesian and having arbitrary choices, but, again, all forecasting methods will have arbitrary choices. There’s no way around it. This is life.

But there’s one more thing I haven’t gotten to, and that’s the difficulty of evaluating 99-to-1 odds. How to even think about this? Even if I fully believed the 99-to-1 odds, it’s not like I’m planning to lay down $1000 for the chance of winning $10. That wouldn’t be much of a fun bet.

I think we can move the discussion forward by using a trick from the judgment and decision making literature in psychology and moving the probabilities to 50%.

Here’s how it goes. Our forecast right now for Biden’s share of the two-party vote is 54.2% with a standard error of about 1.5%. The 50% interval is roughly +/- 2/3 of a standard error, hence [53.2%, 55.2%].

Do we think there’s a 50% chance that Biden will get between 53.2% and 55.2% of the two-party vote?

The range seems reasonable—it does seem that, given how things are going, that a share of less than 53.2% for Biden would be surprisingly low, and a share of more than 55.2% would be surprisingly high. Polarization and all that. Still and all, [53.2%, 55.2%] seems a bit narrow for a 50% interval. If I were given the opportunity to bet even money on the inside or the outside of that interval, I’d choose the outside.

So, yeah, I think the uncertainty bounds at our site are too narrow. I find it easier to have this conversation about the 50% interval than about the 99% interval.

That said, there are the fundamentals and the polls. So I don’t think the interval is so bad as all that. It just needs to be a bit wider.

At this point, we’re going in circles, interrogating our intuitions about what we would bet, etc., and it’s time to go back to our model and see if there are some sources of uncertainty that we’ve understated. We did that, and we’re going through and fixing it now. In retrospect we should’ve figured this out earlier, but, yeah, in real life we learn as we go.

Criticism is good

Let me conclude by agreeing with another of Nate’s points:

These are sharp, substantive critiques from someone who has spent more than 12 years now thinking deeply about this stuff. These models are in the public domain and my followers learn something when I make these critiques.

One of the reasons we post our data and code is so that we can get this sort of outside criticism. It’s good to get feedback from Nate and others. I wasn’t thrilled when Nate dissed MRP while not seeming to understand what MRP actually does, but his comments on our forecasting model are on point.

I still like what we’re doing. Our method is not perfect, it has arbitrary elements, but ultimately I don’t see any way around that. I guess you can call me a Bayesian. But there’s nothing wrong with Nate or anyone else reminding the world that our model has forking paths and researcher degrees of freedom. I wouldn’t want people to think our method is better than it really is.

Again, I like that Nate is publicly saying what he doesn’t like about our method. I’d prefer even more if he’d do this on a blog with a good comments section, as I feel that it’s hard to have a sustained discussion on twitter. But posting on twitter is better than nothing. Nate and others are free to comment here—but I don’t think Nate would have anything to disagree with in this particular post! He might think it’s kinda funny that we’re altering our model midstream, but that’s just the way things go in this world when our attention is divided among so many projects and we’re still learning things every day.

Another way to put it is that, given our understanding of politics, I’d be surprised if we could realistically predict the national vote to within +/- 1 percentage point, more than three months before the election. Forget about the details of our model, the crashing economy, forking paths, overfitting, polling problems, the president’s latest statements on twitter, etc. Just speaking generally, there’s uncertainty about the future, and a 50% interval that’s +/- 1 percentage point seems too narrow. If we’re making such an apparently overly precise prediction, it makes sense for outsiders such as Nate to point this out, and it makes sense for us to go into our model, figure out where this precision is coming from, and fix it. Which we’re doing.

One other thing. On the twitter thread, some people were ragging on Nate for criticizing us without having a model of his own. That’s just silly. It’s completely fine for Nate or anyone else to point out flaws, and potential flaws, in our work, without having their own model. For one thing, Nate’s done election prediction in the past and he has some real-world experience of making public uncertainty statements under deadline. But, even without that, it’s perfectly fine for outsiders to criticize, whether or not they have models of their own to share. We appreciate such criticism, wherever it’s coming from.

> So, yeah, I think the uncertainty bounds at our site are too narrow. I find it easier to have this conversation about the 50% interval than about the 99% interval.

Hmmmm. I don’t know. It seems most users of these election prediction intervals are more interested in the 99% interval though. Trump has a <1% change of winning the election (or not) is a much more actionable prediction than 50% between 52 and 53%. Further, just sitting here and thinking intuitively about what I'd expect a posterior for 2020 to look like, and I'd probably imagine something with much thicker tails than a gaussian (corresponding to unexpected late events…)… I'm not sure if your current model allows that.

Zhou:

It’s fine to say that some people care about the 99% interval. My point is that if you care about the 99% interval, one way to get to that is to look at the 50% interval, because it’s easier to build up intuition that way. This is relevant given that so much or our conversation about this model is about our intuition.

I guess I’m arguing that maybe the 50% interval should in some way be decoupled from the 99% interval.

I don’t understand at all why the 50% interval helps the model seem more reasonable. The general point that the model predicts Biden will win the popular vote with 99% certainty is still true. The fact that model predictions can be seen as less bad from one perspective doesn’t seem to suggest it’s working well.

Anon:

No, the 50% interval doesn’t helps the model seem more reasonable. The 50% interval helps me understand why the model’s prediction seems unreasonable!

Thank you to Andrew for this excellent write up.

Have y’all considered publishing the code to generate the prior (i.e. the fundamentals model)? While it is true that any model is the product of some arbitrary choices, it is difficult to evaluate that arbitrary choice when the external observer has to infer it from the forecasts being made.

The code published online, unfortunately, doesn’t explain where the fundamentals model used comes from or how it works, nor does it include the data one would need to construct the fundamentals model you’re using for 2020.

My understanding is the 538 model (and Upshot models of past) were tweaked midstream all the time. So I wouldn’t expect much critique there..

My understanding of 538’s model in the past is that they do basically the same thing you’re doing: take some fundamentals, take some polls, and put them together. You should only be disagreeing in the details. The 538 predictions for this year should be up somewhat soon so it will be interesting to see what they say when they do.

Alex:

One distinctive feature of our model is how we include information from both state and national polls, and how we allow for state and national opinion to drift during the campaign. That said, different models that use the same information should give similar forecasts. As Hal Stern says, the most important aspect of a statistical method is not what it does with the data, but what data it uses.

To this point, I recall something on this blog about why polsters were so off in 2016 was due to non-response from Trump supporters. Is there anything in your model that tries to ensure that a similar non-response bias doesn’t plague 2020 predictions?

Where did you get this idea?

I can’t find the exact post(s), but I’m pretty certain it was said that differential non-response to pollsters was an issue, and that pollsters should post-stratify on party id (to answer Jared’s question). (This is different from Trump supporters lying to pollsters, for which, if I remember correctly, there isn’t much evidence),

My understanding from their prior discussion is that the 538 model did include national and state polls and used a random slope (elasticity) + random intercept (state lean) from national mean to state mean + prediction noise term with variance based on time, obtained posterior distribution of state means and simulated the state winners directly. I don’t recall if they tried to enforce a constraint for a coherent relationship between the state means and the national mean.

Thanks for this post, Andrew. I agree that the blog is a much better place for substantial discussion than twitter.

One way I’ve thought about this issue is to disentangle the “poll averages” (prior + data) from the “forecast” (what might happen conditional on poll averages). In the context of stan, that’s combining prior information with polls for “poll averages” in the model block, then simulating potential election results, including non-sampling error and time-to-election uncertainty, in the generated quantities block.

The necessary parameters for the forecast can be estimated from prior elections.

I’m surprised that there isn’t more discussion about uncertainty in turnout and likely voter models. I expect that we will see a huge shift in turnout due to changing election circumstances (due to both the shift to mail in voting and the current state of fear around COVID-19).

There is already some pretty good evidence there is a quite a big correlation between fear of COVID-19 and political party. It wouldn’t surprise me if we see a 10% drop in turnout among people afraid of COVID-19 (which would in turn result in a ~3% drop in Democratic turnout relative to Republican turnout).

Ah, forgot to post my source for per-party differences in fear. https://www.pewresearch.org/politics/wp-content/uploads/sites/4/2020/06/PP_covid-concerns-by-party_0-02.png was a relatively recent poll which showed that 64% of democrats are afraid of COVID-19 compared to only 35% of republicans.

I think two points this and the original discussion dance around explain why we’re intuitively uncomfortable seeing any presidential election model output such high confidence:

1. How reliably can we fit the shape of the error distribution? If a thicker tailed distribution is consistent with the data, that can both reconcile the how we can be comfortable intuitively with the 50% interval and uncomfortable with 99th percentile point estimate. Corollary: Without a physical reason for the distribution shape, why should we believe we can make inferences from N datapoints about points on the inverse distribution outside of [1/N,1-1/N]?

2. How do we account for the probability of errors not contemplated by the model? As the probability of error within the universe the model knows goes to zero, the percentage of “true error” due to factors outside of it whether it be a change in underlying structure not captured by the model, another model specification error, or even coding or data errors becomes large.

Thanks to you and your collaborators for this work and your transparency!

My understanding is that you model the voteshare over time as a Brownian motion (or discrete Gaussian random walk) with a bias toward your prior. Did you ever consider using other models for the random walk, e.g., a more general Levy flight? Maybe it would be computationally intractable, but a diffusion process that allows for rare dramatic shifts could help account for news-driven swings. It seems like one possible underrated source of uncertainty in the current model is the chance of a well-timed transient shift in the polls that is too large and/or too fast to realistically occur under Brownian motion. A Levy flight could allow this sort of “macro” volatility without making the day-to-day variation unrealistically large.

Dpr:

In Stan, it’s easy enough to use any innovation distribution (our model is discrete with a change every day). We’ve played around with a long-tailed distribution with occasional jumps, but we decided to stick with Gaussian jumps because political changes don’t seem to happen all in one day. That said, sure, there are dynamics in the process that we’re not capturing with our simple model. In any case, right not the time-series model doesn’t matter much, given that the polls are already at the spot where the fundamentals are predicting.

If Ghislaine Maxwell decided to save her skin by coming up with say photographs of Trump molesting children, or Biden gets COVID and dies you can bet there will be a big jump… I think there are quite a number of risks like this that are plausible this time around which weren’t so in the past. Open street warfare with semiautomatic rifles between black and white racial organizations in Portland could be upcoming… Kim Jong un could be killed by his sister and she could launch rockets directly at South Korea, which plunges N Korea into a coup / power vacuum… China could have a major outbreak… It might be revealed that Zoom is recording UK government meetings and funneling them To the Chinese communist party… To me this kind of long tail risk is the missing piece.

+1. I’ll add unprecedented levels of vote suppression, and election sabotage as plausible long tail risks. I mean POTUS is openly wondering about postponing an election due to his own sabotage of USPS and disbelief in mail in voting, meanwhile he claims its safe enough to reopen schools. All of these election models condition on some reasonable semblance of norms being upheld. Well…we’ll see.

Daniel:

I agree with the general point. Again, these are in the error term in the same way that “Henry Kissinger negotiates with the South Vietnamese to prolong the war” or “the Kennedy organization does some vote fraud” or “Ross Perot runs for president” or “the Russians release hacked documents” or “the Supreme Court stops the vote counting in Florida” were in the error term in earlier elections.

I think Talebs point a month ago or so was that uncertainty grows pretty rapidly in time. I think his model was excessive but I do think the Dynamics are such that intervals should visibly widen in your plots, and revert to a kind of 50-50 baseline over some timeframe. that timeframe may be say 15 months so that you don’t revert very fully by Nov, but that dynamic is still there.

Think of a paper bag blowing around on sand dunes… if it’s near the bottom of a valley it will later be found at higher elevation on average. if it’s near the top of a peak it will later be found at lower elevation.

I get where you’re coming from for “large changes in 1 day unlikely” suggesting e.g. no Cauchy innovations. However, large changes do happen in a week or month. With a stable distribution between 1 and 2, could you calibrate the daily change to match empirical monthly shifts? Another way to think about it is that while daily jumps aren’t huge, they also aren’t iid. Once a big event breaks, it filters into people’s opinions over time and filters into observed polling slowly creating correlation. Big events don’t always reveal themselves at once, consider drip drip drip of Clinton email followed by Comey letter.

Even if polls and fundamentals are in the same spot now (for the point estimate) a larger variance in the change in polls will increase uncertainty in the weighted combination, which is what I thought we’re reacting to here (too tight seeming uncertainty).

Ryan:

I agree with you. Our model for time trends in opinion is too simplistic. But I agree with Nate that the fundamentals-based forecast is the largest concern in our model, or in anybody else’s.

The difference between 25:1 and 99:1 is big in terms of payoff, but (a) not as big as it seems, in a way: it’s less than a factor of 4, after all; and (b) the uncertainty distribution doesn’t have to widen all that much to move a lot of probability in the Biden vote to the other side of the 50% boundary. Also (c) there has to be something like a 1% chance either Biden or Trump will die before Election Day, let’s assume that would be a push.

I think the biggest conceptual problem I have with the approach is the effect of the pandemic. In terms of magnitude of its effect on the electorate (and the economy), the only comparable things I can think of are very rare events like the start of the Civil War, the onset of the Great Depression, and the start of U.S. involvement in WWII. I realize these are on a continuum in most important ways — there were other big depressions, other wars, even other pandemics — but this does seem like the mother of all economic shocks. In addition to the huge and somewhat unpredictable effects on people’s voting preferences, there are also large unpredictable effects on voting itself: to what extent will people be willing to go to the polls, and how much will this differ by voter preference; and to what extent will voter suppression efforts be successful (either legal ones or illegal ones). Things like hacking by foreign powers or other interested parties are also possible now, and although vote tampering has occurred in the past I think it is now possible at a scale that was never possible before, although this does not mean it will actually occur at scale.

Putting it all together: if the question was about voter _preferences_, I think a vote somewhere in the range of 99:1 in favor of Biden would be about right. There’s no way Trump is more popular now than he was four years ago, and no way Biden is less popular than Hillary was. When I say “no way”, I mean that if we had some way of actually measuring this sentiment accurately I would be willing to put 99:1 on it, up to say $9900. Eh, there’s the chance of a change between now and election day — a video of Biden in blackface, Trump rescues a baby from a burning building — so let’s make it 95:1. But as far as what will actually happen when the votes are counted, there’s gotta be more uncertainty than that. A lot more.

+1

Andrew,

Thank you very much for your willingness to address Nate Silver’s concerns, and for your openness in this blog post. Willingness to admit errors is the best of the scientific tradition, but seen far too little.

Please think about what will happen if your revised model AGAIN predicts a 99 percent or greater probability of a Trump popular vote loss, or another intuitively overconfident prediction.

If that occurs, you will have to choose between two bad options: Re-revising your model and losing credibility, or doubling down on the exceptionally confident prediction. The later option is especially worrying because it is psychological tempting, and risks prominent voices switching the focus of their criticism from Trump to Biden out of the mistaken impression that Trump is guaranteed to lose — recapitulating one of the worst Democratic strategic blunders of 2016.*

To prevent move overconfident forecasts from your model, I hope you perform a major reevaluation and revision of your work, and do not limit yourself to minor changes. Otherwise, you risk partaking in one of the worst scientific traditions, an evil sibling of the best one mentioned above: When presented with new evidence contradicting one’s theory, repeatedly revising one’s theory only to the least extent necessary to match the new expectations.

*This is a problem for the model regardless of presidential preference — predictions should reflect who is likely to win or lose, and not become part of the reason that a candidate wins or loses.

More:

It’s not clear. At some point the probability really can exceed 99%. For example, suppose you don’t want to assign 99% probability to the event that Biden receives more than half of the two-party vote. Maybe you only want to say that probability is 90%. Fine. But, then, what’s your probability that Biden receives more than 49% of the two-party vote? 48% of the vote? 45% of the vote? Etc. At some point you’ll have to bite the bullet and assign a probability of more than 99%. Probabilities of more than 99% do exist!

Thanks Andrew. I think you are incorrect when you state, “At some point you’ll have to bite the bullet and assign a probability of more than 99%.” For example, consider the modelled electoral college margin instead of the win probability itself. If you plot the margin, you have a point estimate over time, and you can include 50%, 67%, and 90% uncertainty bands, without there being any requirement that you also show 99% uncertainty bands.

From my perspective, I think it is perfectly consistent to say, “I trust the 50%, 67%, and 90% uncertainty bands of my model, but I don’t trust the 99% bands because I don’t think I am able to model tail probabilities successfully.” Presenting 99% bands or including a 99% point estimate is a choice, not a requirement.

As you point out, there are many, many researcher degrees of freedom in your model, comparatively few data points to fit with, and the available data have only partial relevance to the present. In that context, I don’t think you can reasonably model the tails. If one can’t model the tails, then one shouldn’t show them.

What do you think: If a paper in another field (for example, epidemiology) presented 99% confidence bands for a complicated trend line, would you trust those bands or would you think their exact demarcation is probably highly sensitive to modelling choices?

More:

I agree that we don’t need to officially release any 99% probability statements. But if someone pushes me to ask what probability I would bet at, then at some point I will reach the 99% threshold. For example, I’d feel very comfortable offering 99-1 odds that, in a Biden vs. Trump race, that Biden will get more than 40% of the vote! So now the question is just where you draw the line.

To put it another way, sure, reporting 99% bands is a choice. But, internally, our model does have 99% bands, and it’s completely reasonable for Nate or anyone else to criticize our model based on its implicit 99% statements.

Andrew, I’m glad we agree there’s not a requirement to release 99% probability statements. I also agree it’s worth interrogating what events the model assigns 99% probability, even if 99% probabilities are not explicitly reported because they are viewed as insufficiently robust to (semi)arbitrary modelling choices.

Additionally, I agree that probabilities over 99% do exist, as in your good example of Biden getting at least 40% of Biden-or-Trump votes. Both personal understanding and a fitted model should provide very high odds for these kind of situations, which might be called the “domain of self-evident predictions.”

But after all that agreement, here’s where I think we importantly disagree: With so few presidential elections, I think there is a gap between the domain of the self-evident and the domain where there is enough US presidential election data for models to help. Within this gap, outcomes are neither self-evident, nor is there enough data to actually check whether model predictions are reliable/calibrated. Consequently, in this gap, model predictions are caused by uncheckable modelling choices (like tail assumptions), and not caused by the data. So they are almost purely personal judgement, not data-backed.

For example, the difference between 1% and 5% Trump win probabilities seems important. Does the presidential election data itself include enough information to check the calibration of a rare event prediction like this, and show that presidential outcomes a model assigns 1% probabilities do not actually occur with 5% probabilities?

>risks prominent voices switching the focus of their criticism from Trump to Biden out of the mistaken impression that Trump is guaranteed to lose

>*This is a problem for the model regardless of presidential preference — predictions should reflect who is likely to win or lose, and not become part of the reason that a candidate wins or loses.

Wow, didn’t realize AG’s model was so influential to the outcome of a presidential election.

I should have said “the collection of presidential forecast models”, agreed.

“The later option…risks prominent voices switching the focus of their criticism from Trump to Biden out of the mistaken impression that Trump is guaranteed to lose — recapitulating one of the worst Democratic strategic blunders of 2016. To prevent move overconfident forecasts from your model…. ”

No one knows that the forecast is overconfident. That’s just a guess.

I think you’re asking a forecaster to create a model that will influence the election the way you want it to go. Is that right???

No, I’m not. Please see this text in my first comment, “This is a problem for the model regardless of presidential preference — predictions should reflect who is likely to win or lose, and not become part of the reason that a candidate wins or loses.”

iirc, besides the 2016 general, forecaster overconfidence also affected the 2020 Democratic primary (Biden undervalued by 538), the 2016 Republican primary (Trump undervalued by 538), and the 2012 general (Romney overvalued by internal forecasts, producing bad Republican blunders).

To my understanding, Andrew Gelman, Elliott Morris, and Nate Silver view the forecast as likely overconfident.

I’m trying to add this point: I’m not sure it’s possible for a model to output a 99.0% US presidential election probability in a way that is robust to semi-arbitrary modelling choices, because of limited sample sizes of prior elections, diminishing relevance of older data, and unavoidable researcher degrees of freedom in election forecasting.

As OccasionalReader says, why should we believe inferences outside [1/N,1-1/N] based on N data points? Those bounds are not exactly where the problem arises, but I think they illustrate the issue nicely.

It’s possible for presidential election models to output less extreme probabilities in an acceptably robust way, and it’s possible to output a probability of 99.999% and think that at least 99% must be reasonable. But to output 99.0%? or 98.0%? or etc?

Part of the issue is that the difference between a 1% forecasted winner and, say, a 6% forecasted winner is consequential, so we don’t want it to be highly sensitive to arbitrary modelling choices, like uncheckable tail structure assumptions. Differences between say 30% and 35% or 0.001% and 0.002% are less important to how elections are viewed.

https://www.bloomberg.com/opinion/articles/2020-07-24/u-s-election-models-have-the-same-flaws-as-in-2016

Here’s another good critique I liked

James:

I followed the link and I’m not at all convinced. He writes, “Betting markets are still around 40%, as is the estimate from Professor Philip Tetlock’s Good Judgment Project, which I consider the most reliable available.”

I see no reason why I should trust those numbers more than a model that we’ve estimated with historical data.

To put it another way, where does that 40% come from? What’s this guy’s estimate and uncertainty of Biden’s share of the two-party vote? Given the electoral college bias, maybe he’s estimating Biden gets 51% of the two-party vote. That doesn’t seem right to me, given the poor economy, Trump’s consistent unpopularity in approval polls, and Biden’s performance in head-to-head polls. This guy’s going against all this data . . . looks like slow-to-update to me.

What I like is the argument that the moves are consistent with incorporating all of the information today – you seem to have a narrowing towards Biden/Trump over time which isn’t consistent with being a probability

The market doesn’t have this property – which makes it a better forecast to Brown. Im not sure about the better, but I definitely agree – the moves are a troubling part about your forecast

James:

What do you mean, “a narrowing towards Biden/Trump over time”?

In the sense, you may have an implicit bias in your model (either in the point estimate or in the uncertainty) that allows your model to have “81 out of 137 days, and when it did go up it went up an average of 1.09 percentage points. When it went down it went down only an average of 0.73 percentage point, similar to the payouts on the craps bet”

there’s a predictable component to your evolution – either for Biden or the candidate in the lead, which doesnt behave like a probability (no predictable component)

Very good point about taking 99-to-1 odds bet.

Most people would not be willing to take the bet for a true 1% outcome at 99-to-1.

However, as mentioned by others, getting the 50% interval right doesn’t mean the 99% interval (or even 80, 90%) is right.

There is also a dynamic element in the form of “If Trump only has 1% chance of winning the popular vote, how would he react?”

The answer, of course, is that he will choose strategies/policies that have high variance, even if they are low return.

For instance, 72 hours ago, I believed that there was 99.9%+ chance that the election will be held on Nov 3rd, but now my belief is only 99%+.

Not to suggest that you should incorporate this into your model (hard to imagine how without being too ad hoc), but I think this might be part of where the discrepancy between public perception and what the model tells us comes from.

Fred:

I agree that the 99% interval is much less robust than the 50% interval. My point is that I don’t even trust our 50% interval! The questionable nature of our 99% claim is what pushed me to look more carefully at the 50% claim. In retrospect, we should’ve been looking more carefully at the 50% interval all along.

Regarding the idea of high-risk, high-return strategies: Sure, but I don’t think strategy has such an impact on the election. I think strategy is overrated.

> My point is that I don’t even trust our 50% interval!

That answers, at least in part, the questions I asked at https://statmodeling.stat.columbia.edu/2020/07/17/dispelling-confusion-about-mrp-multilevel-regression-and-poststratification-for-survey-analysis/#comment-1386479

I find interesting that the output of the model, 91% probability of a Democrat victory in the election, has actually not changed much in the last six weeks, from the time when you believed in the 88% chance of winning.

Carlos:

Neither the fundamentals-based forecast nor the polls have shifted much during the past month, so it’s no surprise that the forecast hasn’t changed much.

What I meant is that your “loss of trust” in the model is not due to the model giving a different output that is not as good/reasonable/acceptable as the previous one. It’s still (more or less) the same output but your view has changed. Which is of course not a bad thing, it’s just that it’s more difficult when you can’t blame it on the model behaving worse now.

Yes, indeed, that’s why I said we should’ve thought about this issue earlier. Nobody to blame but ourselves here.

Do any of these forecasting models include Chauvin’s knee/Floyd’s neck? Pandemic miracle cure? Pence philandering? Those unanticipated events would move the needle but no one could possibly have a model rich/imaginative enough to capture such happenings.

As the old saying goes, “There are two kinds of forecasts–lucky or lousy.”

Paul:

Some of this information percolates through to the presidential approval and trial-heat polls.

I think one of the largest sources of uncertainty is the pandemic/vaccine situation.

At least two vaccines just went into Phase III trials, so data might be available shortly before the election – so there could maybe be a “warp speed” approval just before…

I don’t think a vaccine becoming available by itself would be enough to change the outcome of the election. But not every nation has our FDA. If several other major nations are vaccinating people *already*, before the US does, and Trump gets credit for “cutting through the red tape” to get a vaccine approved (against the ingrained caution of “the bureaucracy”), THAT could change things.

Still probably not enough. But it might be. (Especially if some less affected European countries like Germany get a fall 2nd wave, so the US looks better by comparison, & the lockdown strategy proves worse than it looks right now.)

My understanding is that all such forecasts, whether explicitly or not, are conditional. *If* none of the inputs changes materially between now and November, and *if* nothing important but unanticipated (and priced into the polling or other data) occurs, then here is my forecast. We don’t have a probability model for the ensemble of events that can occur over the next three months, so all we can do is model the current moment and extend it forward conditional on an essentially unaltered environment.

Peter:

The forecast is not supposed to be conditional on nothing unusual happening. The error term in the model is supposed to account for unusual events (within the context of unusual events in the past, but, as we’ve discussed, many past elections have had unusual aspects.

But Andrew, isn’t there a difference between an exceptional factor that is known at the time of the forecast (e.g. the race or religion of a candidate) and one that is unknown but may eventuate? I think one can rationally construct a model that incorporates the impact of the first, but I don’t see how one deals with the second. How can we place a probability on an October Surprise with the potential to substantially alter the outcome?

Peter:

The point here is that those past unusual factors (races and religions of candidates, etc., are not formally included in the model. They’re implicitly all in the error term. So what our model is saying is that any possible October surprise can be thought of as a random draw from a hypothetical distribution of October effects from which previous October effects were drawn. I don’t think that’s so unreasonable; after all, political commentators have been talking about October surprises in every election since at least 1980. I agree that our model does not not include all our knowledge about what might happen in the next few months, but I don’t really see any feasible way of including that. So rather than saying our model is conditional on nothing unusual happening, I’d rather say that our model’s error term allows for unusual events, without making any special adjustment for 2020.

Nicely explained. Textbooks need to include this type of example.

Not convinced yet. I think it’s a huge leap to propose there’s a common distribution for October surprises (to pick just one example) across all the election cycles; surely their prospective likelihood and political impact will vary depending on the personalities and political factions involved. And, to take another example, a rather unprecedented pandemic (different dynamics from past ones, still not well understood) makes 2020 potentially more subject to unanticipated public health shocks, both positive and negative, than 2012 or 2016 — but how much more and how consequential?

I agree in the sense that one can, if one must, make arbitrary assumptions that allow imposing some sort of probability distribution on future events, but that strikes me as self-defeating. You’ve got a pretty good model for how the election would go if it were held today, but if you combine its well-supported error bands with arbitrary error, you’ve got arbitrary error.

In another, somewhat similar context I’ve formulated a sort of law: the sum of a well-estimated number and a poorly-estimated number is a poorly-estimated number. This makes the case for separating out the more valid parts of an analysis and not letting them get contaminated by the less valid ones. This was originally proposed for cost-benefit analysis, but I think it holds generally. In forecasting, it makes sense to me to incorporate the error you have an empirical basis for estimating and making it conditional on no intrusion from other factors for which you don’t have a basis.

> So rather than saying our model is conditional on nothing unusual happening, I’d rather say that our model’s error term allows for unusual events, without making any special adjustment for 2020.

It seems that there is something off with your model and its allowance for surprises because the width of the 95% uncertainty intervals in the “modeled popular vote on each day” chart doesn’t change.

The forecast for September 1st should allow for potential surprises in August. The forecast for October 1st should allow for potential surprises in Agust and September. The forecast for November 1st should allow for potential surprises in August, September and October.

If the uncertainty interval around the forecast for some future date reflects, at least in part, uncertainty about the things that may happen between today and that date one would (naively?) expect the interval to get wider as the horizon gets longer.

The problem with forecasting this year’s election is that while for every presidential election people say that it’s the most important election ever, this year it’s true. 😊

That said, this year really is tough because every other time the GDP dropped by 2.1 trillion in the 2nd quarter in the year of the election the incumbent got hammered.

This year he may get a Mulligan from close to 1/2 the voters.

>>The problem with forecasting this year’s election is that while for every presidential election people say that it’s the most important election ever, this year it’s true.

Strongly doubt it. Most important in the last 50 years maybe (though what about 2016?) but I really doubt more important than Andrew Jackson’s, Lincoln’s, or FDR’s. If 1860 or 1864 had gone the other way the country might not have survived as an unified nation.

I think what’s going on now looks crazier because we are living through it rather than looking back on it from historical distance.

“Strongly doubt it. Most important in the last 50 years maybe (though what about 2016?) but I really doubt more important than Andrew Jackson’s, Lincoln’s, or FDR’s.'”

Coincidentally, I was recently transcribing a journal my mother wrote in (the election year) 1940. Here is something she wrote in October 1940:

“Everyone is upset about elections + the draft. Our kind of people want Wilkie — or what we think he stands for. We are not big business — but plain — honest-to-gosh middle class people with college educations — Most of us are afraid that Roosevelt wants to be a dictator …. The draft will take all of our age people …”

Martha, thanks for sharing that. It’s fascinating!

Hello Andrew,

I have to begin by saying that I do not have an extensive understanding of election modeling. Reading your article, however, it appears to me that these changes are being made for subjective reasons. For example, Elliott not being willing to take a 100:1 bet on whether Biden will win the popular vote. Isn’t this more a reflection of a person’s tolerance for risk?

My worry is that the model is being altered to fit your subjective view of the race and its probabilities. What if the model is revised, and it gives 98% chance for Biden to win the popular vote. Would this be too high? What if it was 95%, or 90%? Where should the line be drawn? This just seems to me like a subjective adjustment to make the model fit your view of the election.

Please let me know if I am wrong about this, and how you plan to avoid bias in adjusting your model.

All the best from Canada!

Ali:

You can’t separate the subjective from the objective here. There is no modeling without choices and no probability without judgment.

+1

“There is no modeling without choices and no probability without judgment.”

A blanket statement in defense of subjectivity in statistics? That’s…surprising. :)

Obviously all research has subjective choices but choosing, say, an exponential curve fit before running a model for some theoretical reason vs. fine-tuning the priors knob afterwards because you don’t like the result are different degrees and categories of subjectivity and it’s appropriate for people to question your “judgement” in the later case.

The premise of building the model is to discover some objective truth, right? Not to reveal your personal judgement.

Jim:

Forget about the election for a moment and consider some other problem, like trying to decide whether to bet at a given odds that the Lakers will win the NBA championship this year. If I were doing this, I’d fit a statistical model. And this model would involve a lot of tuning parameters and a lot of choices. I don’t see how to avoid that. Or, sure, I could avoid it by shutting my eyes and just picking a model without careful thought—but then I wouldn’t want to bet on those numbers.

“consider some other problem”

The problem we want to consider is the one that best challenges the assertion that:

““There is no modeling without choices and no probability without judgment.”

A statistical model about which sports team will win is not a good choice for that. A better challenge would be trying to figure out why your car won’t start. This one better fits Jim’s criterion that “the premise of building the model is to discover some objective truth.”

The best approach is to build an exhaustive, tree-based model of all possible causes, and then test each plausible cause to the best of your ability. Call a friend that knows cars and get them to suggest any plausible cause you did not think of, and test those as well. Go through the repair manual and add in anything still missing, and test those possibilities too.

How does that involve choices and judgement? Now maybe you run into testing problems and you have to make subjective decisions, but subjectivity just is not baked into models like this.

Matt, I’m not sure exactly what you’re trying to model — is the the probability of each failure mode? — but the procedure you just described is loaded with choices and judgment. Which friend(s) do you call, for one thing? That’s a choice based on your judgment of who knows cars best. And it’s not like those friends have some massive database of different cars and their probability of failing in different ways, they will have informed judgment based on past experience and you’ll be trying to make advantage of that. You’re not avoiding judgment, you’re just outsourcing it.

If you want to model pulling colored balls out of an urn and trying to estimate the fraction that are blue then maybe you can do a pretty much judgment-free model, but for real-world examples you’ve gotta make choices.

“Matt, I’m not sure exactly what you’re trying to model — is the the probability of each failure mode? — but the procedure you just described is loaded with choices and judgment. Which friend(s) do you call, for one thing?”

C’mon Phil! The goal is to exhaustively list all possibilities. That is not judgement or choice. Those words have meaning.

So it’s only a model if it involves probabilities? I don’t think the rest of the world agrees with you, there are all sorts of models that only involve binary information. The model guides the testing scheme.

It is a simple, testable causation model. It replaces a random approach, which is what a model is supposed to do. This isn’t that hard.

Matt, this is like breaking RSA encryption… or solving the Traveling Salesman problem… it’s trivial! For RSA just try every factor between 2 and sqrt(N).

Matt said,

“C’mon Phil! The goal is to exhaustively list all possibilities. That is not judgement or choice. Those words have meaning.

So it’s only a model if it involves probabilities? I don’t think the rest of the world agrees with you, there are all sorts of models that only involve binary information. The model guides the testing scheme.

It is a simple, testable causation model. It replaces a random approach, which is what a model is supposed to do. This isn’t that hard.”

There are indeed situations where you can list all possibilities –i.e. situations that can be modeled by discrete models such as you discuss (e.g., tossing a fair die; or tossing a weighted die when you know the weighting). But in the real world, there are many situations where we can’t list all possibilities — situations where uncertainty is part of what the real world tosses at us, and where the uncertainty cannot be described by a discrete model. So in those situations (and there are many of them), we need to use probabilistic models. That’s just what Nature/Reality has tossed at us. We have the choice: deny reality, or use probabilistic models.

Now imagine an M-open world where the space of possibilities cannot be exhaustively delineated like this. Secondly, taking your example, don’t pretend that you’re just going to list all possible causes for a car not starting, and test them in some arbitrary order – that’s just silly. No, you’re going to condition on judgements about the relative plausibility of the different cases.

First:

Choices are made all the time in science but they are verified by testing them against reality. Here you’re using your personal judgement as reality and testing the model against that.

Second:

The current situation in the election is more analogous to the NBA finals, not the NBA season. And a mere bet on Bidden is already obvious without even looking at the polls. The election at this point is like LA Lakers vs Atlanta. So why you would fine-tune a model to simply forecast the outcome of such a lopsided contest just to bet on it is beyond me.

Third:

I think most people think of forecasters as statisticians and scientists creating models that provide objective truth, and they expect your models to provide an objective calculation of exactly how low or how high the odds are of a given outcome given the current information and past elections. If the model yields 99% certainty of a Bidden victory, they want to know that.

yeah wow I spend way to much time doing this.

Jim:

We’re not fine-tuning the model to forecast whether we think Biden will win. We’re looking carefully at the model and realizing it has room for improvement. It’s good to work on a model that lots of people are paying attention to: It gives us more motivation to get things right. I agree with you that whether it’s 99% or 94% doesn’t matter so much, as any of these probabilities are sensitive to model choices. Regarding objectivity: the idea is that we state our assumptions clearly, and our conclusions follow from that. People are free to criticize our assumptions. Regarding objective truth: This is survey research. Surveys are far from ideal. We’re not drawing balls at random from urns. But that doesn’t make them useless. There’s a big space between ideal and useless. To put it another way, not understanding surveys and statistics leads to lots of confusion. For example, search this blog for Michael Barone: that guy was an expert political journalist but it didn’t stop him from getting tangled up in numbers. Sometimes the role of scientists such as myself is not so much to provide objective truth as to make some sense of apparently contradictory data.

Andrew:

Reading over the full post more carefully, it makes a lot of sense to me. Yes there’s some degree of “subjectivity” but from the sounds of it the “subjectivity” is constrained and the model is trained on some data and tested against other data which is the necessary check on reality. So I’m not totally clear on what the “subjective” change is or would be.

I agree it’s smart to review your model and make sure the code is sound and the data is correct if you get a result that seems incorrect – or even if you don’t. But is there some objective reason to reject a 99% probability of a Biden win? It doesn’t seem outlandish given the circumstances. The last six months have been a total meltdown for Trump. Hard core Republicans are bailing on him in droves.

So I guess I’d question the idea of changing the model or parameters because the result is “too” anything unless it’s absolutely outrageous. If you go through the code or data and you find a problem, obviously it should be fixed but just because the numbers seem too high doesn’t to me feel legit. Then I’d agree with Nate in that you’re making arbitrary choices to adjust the output downward into a zone you think is believable and doing so without strong justificaction.

which is why it’s so important to educate people about *what the hell statistical results mean*

Because Bayesian Probabilities about one-off events are *NOT* “objective truths of how high the odds are…” what would it even mean to be an objective truth about how high the odds are that a *single unrepeatable thing* would occur?

They are objective truths about *what a set of model assumptions implies* about the relative reasonableness of different possible outcomes.

Except this isn’t a one off event and it is possible to make systematic model of past elections to generate an objective forecast of this one.

“Except this isn’t a one off event and it is possible to make systematic model of past elections to generate an objective forecast of this one.”

Huh? Past elections were under different circumstance than this one, so how could a systematic model of past elections be able tp generate an objective forecast of this one?

jim, you say “this isn’t a one off event” but, well, it is. This will be the first and only time Donald Trump will face Joe Biden in a presidential election in the midst of a coronavirus epidemic after the U.S. economy has entered a historically large depression. Sure, there have been other presidential elections, but this particular election will only happen once. And it’s not like flipping coins or rolling dice, where, OK, sure, each event is technically unique but the differences are tiny. This election really is different from any election that has come before….as is every election.

“Huh? Past elections were under different circumstance than this one, ”

“This will be the first and only time Donald Trump will face Joe Biden in a presidential election in the midst of a coronavirus epidemic after the U.S. economy has entered a historically large depression.”

This is the first and only time Tropical Storm Isaias has followed it’s exact storm track on the exact date with the exact same range of intensities, yet there is an objective forecast of the outcome.

A “forecast” is an objective assessment of future probability of a particular event, not a one-off personal opinion. And, unlike a tropical storm, where the opinion of nature as to how it wants the storm to end is unknown, for elections we have polls that provide the opinions and views of the agents acting on the outcome, as well as measurements of economic conditions that are known to be a primary factor impacting people’s opinions. These likely cover the necessary ground to canceling the variation between elections caused by different election conditions.

IMO there’s no reason to believe today that polls are any less or more likely to be right than usual. As at any time in the past, unexpected future events could change the polls. There’s nothing new there.

IMO if there’s anything important about this election that probably hasn’t been factored in to models and forecasts it’s the age of the candidates. Building some kind of feature in the model that accounts for current fatality rate of a given age vs the age of the candidates would be a possible route to account for COVID in a model.

> The premise of building the model is to discover some objective truth, right? Not to reveal your personal judgement.

How do you discover facts about the world though?

Bayes is sort of like:

approximately X and if X then Y

If you make this statement, plug in all the things that go into X (which are essentially the prior, the generating process, and the data), and then see Y is something that is clearly not true (such as the model implies that you can jump off a cliff and fly)… then you should go back and change X! Doing otherwise is illogical.

When you say that the model will be altered to better account for uncertainty, does that refer to you altering the priors in your model?

Great discussion.

One aspect of the discourse which has confused me (and seems VITALLY important) is that of differential non-response. A very common kneej erk reaction among the public is that “polls botched 2016 because of closeted Trump voters who wouldn’t admit their plans!”. Folks like Nate Silver tend to push back on that, with some good reason (broadly, there’s so much re-weighting going on with each poll, the narrative just *can’t* be that simple).

However, I don’t feel like this addresses the issue of temporary differential non-response *due to a bad news cycle for their candidate*! I remember when you discussed the Xbox survey of voters back in 2012, you argued this point quite strongly: differential non-response varied week-by-week depending on the positivity of the news cycle, leading to extra variance in the poll results. (Example: https://statmodeling.stat.columbia.edu/2016/08/05/dont-believe-the-bounce/). It seems like 2020 would be a perfect fit for claim–you can’t imagine a more dour parade of news stories, which might depress enthusiasm for pollster response (and yet, these people might still happily vote).

To me, this seems like a really critical point. I don’t buy the narrative that “closeted Trump voters” were some decisive factor in 2016. But the conditions in 2020 are a perfect fit for the type of differential non-response which you have previously described. Nate has said many times he doesn’t buy the claims that Trump voters are systemically underrepresented in current polls, but I only ever see him address the issue of “closeted Trump voter”, *not* the claims you made about the Xbox study.

As you say, the certainty in The Economist model is heavily based on the consistency of the terrible polling for Trump. But if this were influenced by differential non-response due to the almost unprecedently bad newscycle he’s endured over the past few months, that polling difference becomes much more fragile. And I say I’m “confused” because I’ve seen Nate talk constantly about the “closeted Trump voter” hypothesis, but basically never against the “slightly dour Trump voter who is a bit less eager to pick up the phone than he was in 2016”. Any thoughts?

Dylan:

We do have that in a model. We allow for differential nonresponse for surveys that don’t adjust for party ID. We’re using surveys that do adjust for party ID as a calibration or baseline. Trump’s also doing poorly in polls that adjust for party ID. Regarding so-called shy Trump voters, see section 5 of this paper.

I came late to this but just wanted to point out I have a similar problem with forecasting New Zealand’s September election. My latest result at http://freerangestats.info/elections/nz-2020/index.html suggests Jacinda Ardern has a 99.8% chance of winning which seems… high but not actually implausible. I probably *would* need 500 to 1 odds to bet on the Nationals winning at this point, so maybe I should just accept it as-is.

My model is structurally very similar to yours for the US election, although with a lot less polling data, less history to model the fundamentals, and no state complexities to worry about. The modelling is done in R and Stan.

In my case, we have only eight elections under the current voting system to use for establishing a “fundamentals” model as the prior before updating our understanding based on the polls. (And there’s not enough polls to say much.) So my prior is simply centred around Ardern’s Labour Party getting the average swing against governments in those either prior elections. I think I’m *possibly* using too fat a tailed distribution for that prior though, which lets the current polls dominate it. So I’m toying with tightening that and shrinking the forecast more towards historical averages (anything on election night resembling recent polling would be a literally unprecedented landslide). But at what point have become I just another pundit? Perhaps already done, but the benefit of this modelling approach is making the the process transparent.

Peter,

I very much agree with your last line: the benefit of this modeling approach is making the process transparent. Actually I don’t think that’s the only benefit of the modeling approach, but it’s an important one. If you need to place bets on an election outcome this might not matter, but in a lot of contexts it is very helpful to write down an explicit model because doing so forces you, or at least enables you, to confront your uncertainties about what parameters are important, how much you know about each, and how they interact. Once you have some insight into those, that insight can help guide you on what additional information to collect…or, for some problems, can convince you that you can’t do much better with any information you are likely to be able to get, which is at least a good thing to know even though it’s disappointing. I know you know this, I’m just agreeing.

I was just discussing the Drake equation with someone. https://en.wikipedia.org/wiki/Drake_equation A lot of people think this is totally useless because we don’t know the values for most of the important parameters, but I think it’s fine because at least it allows you to confront where your uncertainties lie and to ponder whether or how the parameter distributions can be narrowed down.