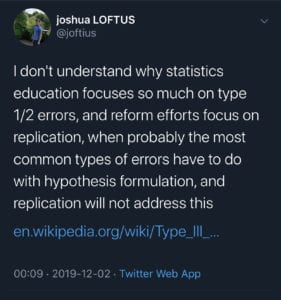

Hi! (This is Dan.) The glorious Josh Loftus from NYU just asked the following question.

Obviously he’s not heard of preregistration.

Seriously though, it’s always good to remember that a lot of ink being spilled over hypothesis testing and it’s statistical brethren doesn’t mean that if we fix that we’ll fix anything. It all comes to naught if

- the underlying model for reality (be it your Bayesian model or your null hypothesis model and test statistic) is rubbish OR

- the process of interest is poorly measured or the measurement error isn’t appropriately modelled OR

- the data under consideration can’t be generalised to a population of interest.

Control of things like Type 1, Type 2, Type S, and Type M is a bit like combing your hair. It’s great if you’ve got hair to comb, but otherwise it leaves you looking a bit silly.

What do you do in practice when you have 3. Some qualitative methods ?

Accept that you data can’t answer you question. So either come up with a new way of getting data, use some proper substantive knowledge and qualitative methods, or use your crappy data to make a guess and hope for the best ¯\ _(ツ)_/¯

+1

“Control of things like Type 1, Type 2, Type S, and Type M is a bit like combing your hair. It’s great if you’ve got hair to comb, but otherwise it leaves you looking a bit silly.”

Great analogy!

Dan:

I agree, and this relates to some discussions we’ve had (for example here), regarding the point that procedural ideas such as preregistration or statistical ideas such as Bayesian inference can be great, but in many ways these benefits are indirect, in that they can protect us from various mistakes but they don’t, in themselves, give us good science.

As you say, if your measurements are no good or if what you’re studying a null effect, it doesn’t matter how much preregistration and statistical analysis you do; at best what they’ll tell you is that you’ve been wasting your time. I strongly push against any implication that if researchers just learn good protocol (preregistration, randomization, etc) and good statistical methods, that then they’ll be able to do good science. The role of the protocol and the methods is, in many cases, just to raise the bar, to create an incentive for good science by making it harder to draw strong conclusions from bad science.

That said, learning that you’ve been wasting your time can be valuable. So I’m glad we’re promoting these methods. Just don’t want to oversell them.

+1

To me learning good statistical analysis is just a prerequisite, like you can’t really do science without knowing a natural language and how to write and read it, without knowing at least some arithmetic and algebra, without knowing at least some logic.

The purpose for me of promoting Bayesian inference using mechanistic thinking in models is that this is basically the minimum needed to get started formulating and testing scientific ideas, and if people have seen how to get started, then they’ll be able to stumble forward and eventually walk, speed walk, maybe even run.

I guess I don’t understand why the value of preregistration is diminished on this blog. It seems to me that it can help mitigate all kinds of issues, not just Types I and II errors–which don’t actually need to be mitigated, given that NHST is bad even when all assumptions are met and all data reported.

Preregistration does help with hypothesis formulation. The biggest problem I can think of in the context of inferential statistics (other than outright fraud) is the garden of forking paths. Preregistration forces us to make the “decision space” of our studies public, and prior to obtaining the data. Quoting Andrew’s linked article, it establishes “what analyses would have been done had the data been different.” Preregistration locks down both hypotheses and analyses.

It can also be used to address problems like bad measurement–researchers might be required to preregister their measures and reasonable estimates of their reliability and validity; or to disclose the study sample, its internal heterogeneity and its deviations from the populations of interest. These last two things have little to do with forking paths but we’d like to get them in writing before researchers know whether these factors will undermine any results they publish, removing an incentive later to omit them in the final article. Plus, if this is all done publicly and before the study (as opposed to the journal review process), then feedback on these things may be incorporated into the final registration.

Yes, we want science to be done better, but more fundamental than that is to be transparent, to allow the scientific community to know just how bad the science is. Weak results aren’t bad results unless their weaknesses are hidden. Put another way, our primary problem isn’t bald people going around looking silly, it’s people announcing that they’ve combed their hair without disclosing its length.

I much prefer just require people to publish their data in a useful format, and then if there is an alternative analysis to be done, and someone wants to do it, they can.

Preregistration is a hack to make p values behave ‘better’ it has no inherent value in and of itself.

I do think preregistration has a role to play in the legal system though. By preregistering what data will be collected and how, you can then make regulatory decisions based on the published data with some checks and balances for data fraud, omission, fabrication, selective reporting, etc. For situations like drug approval where there are billions of dollars in incentives to cheat, you want auditability.

Michael:

I do think preregistration has value! Here are some posts:

Preregistration: what’s in it for you?

How is preregistration like random sampling and controlled experimentation