Len Adleman and Mark Schilling sent along this paper in which they found systematic differences in results from different pollsters:

We compared polls produced by major television networks with those produced by Gallup and Rasmussen. We found that, taken as a whole, polls produced by the networks were significantly to the left of those produced by Gallup and Rasmussen.

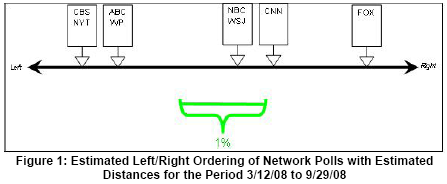

We used the available data to provide a tentative ordering of the major television networks’ polls from right to left. Our order (right to left) was: FOX, CNN, NBC (which partners with the Wall Street Journal), ABC (which partners with the Washington Post), CBS (which partners with the New York Times). These results appear to comport well with the commonly held informal perceptions of the political leanings of these agencies.

I guess this makes sense, given that these different news outlets want to make their readers happy. It still surprises me a bit–I thought all these pollsters were pros. It’s not that polling and poll adjustment are easy or automatic–a lot of subjective decisions still need to be made–but I’d think it would be possible to do this without being influenced by your political predilections or those of your audience.

P.S. Some quick comments on the presentation of the results:

– I’d combine tables 2 and 3, and tables 4 and 5.

– I’d remove the second decimal place in Tables 6, 7, 9. Anything less than 0.1 percentage point is both unmeasurable (realistically speaking) and unimportant.

– I’d recommend doing all comparisons relative to the avg of all polls rather than relative to Gallup or Rasmussen. It’s clearer to have a single comparison.

– The x-axis in Figures 2 and 3 are hard to read.

– Table 10 would be clearer as a time-series graph.

This is very interesting. What makes this finding even more striking is that each pollster is aware of the other polls. So, they are aware that have a systematic bias as they correcting and then releasing their results.

It still surprises me a bit–I thought all these pollsters were pros.

Should this imply, then, that you believe the biases are intentional? I don’t see anything in the paper (I skimmed, admittedly) that would confirm that.

Other places I read (e.g., fivethirtyeight), explain these in terms of differing underlying assumptions about the electorate. This seems reasonable to me, and doesn’t suggest unprofessionalism, just a difference of opinion between pollsters.

Phil,

I don’t know. But if you believe their analysis, then, as they point out, it’s interesting that the biases correlate with the generally-perceived slants of the different news organizations.

With all due deference to Adelman and Schilling, they should leave polling analysis to people who know what they are doing. The fivethirtyeight analysis of pollsters lays out a good overview of the issues involved (including the devilishly difficult issue of “likely voter” models given the massive voter registration increase this year), but you can’t help but laugh at a study which uses the RCP average as a baseline. The claim made in the paper: “We

could not discover sufficient information about how Real Clear Politics calculates its average to justify a formal statistical analysis, however, our results are shown

in the following table” should raise alarm bells for anyone who has followed the various polling sites this season. RCP is notorious for using poll selection criteria that raise suspicions of bias.

Pingback: Pollster biases revealed « Nachspiel at Polemarchus’