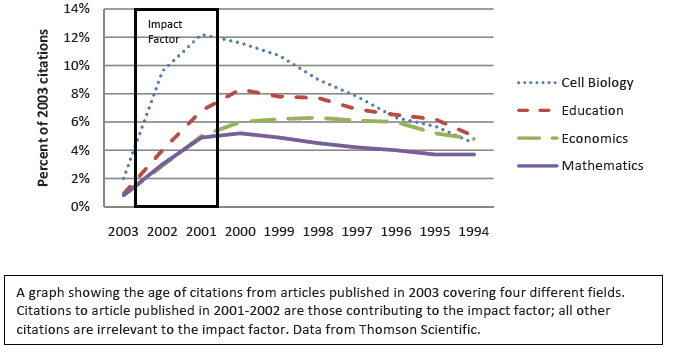

Juli sent me this article by Robert Adler, John Ewing, and Peter Taylor arguing that “impact factors” journals are overrated. I’m sympathetic to this argument because my articles are typically published in low-impact-factor journals (at least in comparison with psychology). I also like the article because it has lots of graphs, no tables. Here’s a graph for ya:

Adler et al. also criticize the so-called h-index and its kin; as they write, “These are often breathtakingly naive attempts to capture a complex citation record with a single number. Indeed, the primary advantage of these new indices over simple histograms of citation counts is that the indices discard almost all the detail of citation records, and this makes it possible to rank any two scientists. . . . Unfortunately, having a single number to rank each scientist is a seductive notion – one that may spread more broadly to a public that often misunderstands the proper use of statistical reasoning in far simpler settings.”

I find that author confuses arguments against citation counts at the department level with arguments against citation counts as the individual level. S/he interweaves the two as if arguments against one are interchangable for the other, and they are not.

Our statistical intution tells us that as the number of people in two departments grow then the usefulness of citation counts as a comparison should increase. Two individuals compared by citation count does neccesitate getting involves in the details. Two universities, however, is a whole different ball game–with groups of different sizes lost somewhere in the middle.

At some point you have to answer the question … "yada yada yada that is all nice and well but there are 30 of them and 30 of you. Why are their citation counts as a whole noticably higher than yours?" What are the answers and what are the negatives they might reflect? Are they excuses? Are they legitimet? What about if it is 100 people versus 100 people? At some point you should be able to add enough people that the deviance due to situational inequities not reflecting performance is acceptably small to you and be able focus on the fact that one group is simply better at getting cited than another group.

I really like Excel 2007's graphs. So clean. There should be more anti-aliasing in R!

Andrew, did you forget to say that you thought they should build a hierarchical model involving journals, departments, papers (which have multiple authors) and individuals? That way we can tease out the effect of having someone as a co-author or moving to a higher- or lower-ranked department.

Will the models assign a huge personal coefficient to someone like Radford Neal, who publishes tech reports that are cited more heavily than most journal papers?

What I don't understand is why anyone cares about high-impact journals. Authors cite papers, not journals or departments. In computer science, the trend is to cite acceptance rates at journals and papers on CVs.

I just got back from the Assoc for Computational Linguistics meeting, where there were four "best papers" (in different "sub areas"). The organizers said it's because the young folks needed them on their CVs for promotion. Bring on the grade inflation!

In the UK, institutional "prestige" ratings are huge factors in funding.

What this focus on extrinsic metrics is missing is the huge variance of paper and conference reviewing. Haven't they ever been on an editorial board, program committee, or grant review panel?

What my department head at Carnegie Mellon, Teddy Seidenfeld, told me when I started out on tenure track back in the day (1988) is that the tenure decision will mostly be based on asking 20 people in the field what they think about me and my research program. I'm now thinking we were the Hampshire College of academic departments, because everyone I talk to in CS these days is worried about "objective metrics" and even the old-timers like me never got the it's-all-subjective lecture.

It sounds like an interesting paper. The authors sound breathtakingly naive when they criticize indices for "discard[ing] almost all the detail of citation records." Uh, that's what indices — not to mention most statistical tools — do. It's like criticizing an umbrella for discarding the rain.

Goedel would have an h-index

Jeremiah writes "At some point you should be able to add enough people that the deviance due to situational inequities not reflecting performance is acceptably small to you and be able focus on the fact that one group is simply better at getting cited than another group." I don't follow this logic. Take two departments of psychology each with 40 faculty. One has 20 cognitive neuroscientists and a random mix of 20 researchers in other fields. The other has 20 psychometricians and a random mix of 20 researchers in other fields. Which will have the higher average citation count, impact or h-index? No matter what N you pick the citation statistics mostly tell you about what subdisciplines researchers publish in. Unless all departments and universities are homogenous (and they are not in my discipline) N is a red herring. If you think this example is unlikely I can think of departments with precisely this unbalanced profile. In fact, such an unbalanced profile may be a natural consequence of hiring practices that focus on citations and impact factors of recruits: generic job adverts will disproportionately recruit faculty from subdisciplines with high relative citation rates and impact factors.

"In the UK, institutional "prestige" ratings are huge factors in funding."

In the UK, I beg to differ the "prestige" of a department is not a huge factor – the main is our Research Assessment Exercise [RAE] rating. In fact this rating from 1-6* directly affects our funding.

WRT the first comment – I think that's utter c-word to be honest!

In football (soccer for Americans :) it's a bit like saying "I scored 20 goals last season in the first league and you scored 15, so your not as good as me!" and then getting the reply "Yes but all mine won matches and three of them where in finals!" – so there needs to be a strong degree of peer review. Just my thoughts

D UK