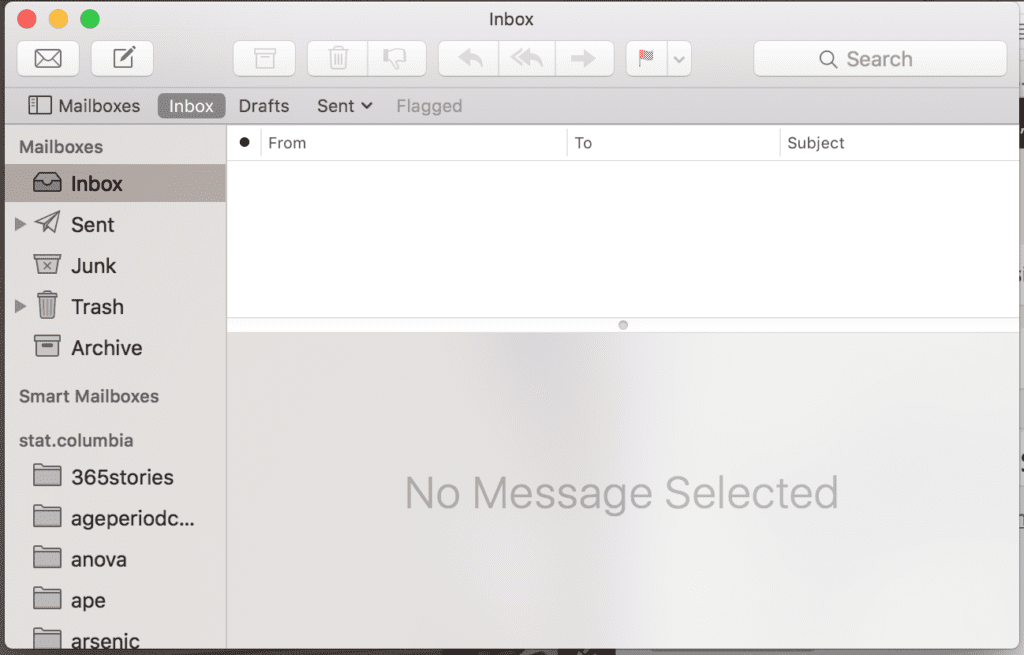

I’ve successfully emptied my inbox:

And one result was to fill up the blog through mid-January.

I think I’ve been doing enough blogging recently, so my plan now is to stop for awhile and instead transfer my writing energy into articles and books. We’ll see how it goes.

Just to give you something to look forward to, below is a list of what’s in the queue. I’m sure I’ll be interpolating new posts on this and that—there is an election going on, after all, indeed I just inserted a politics-related post two days ago. And other things come up sometime that just can’t wait. Also, my co-bloggers are free to post on Stan or whatever else they want, whenever they want.

But this is what’s on deck so far:

In policing (and elsewhere), regional variation in behavior can be huge, and perhaps can give a clue about how to move forward.

Guy Fieri wants your help! For a TV show on statistical models for real estate

The p-value is a random variable

“What can recent replication failures tell us about the theoretical commitments of psychology?”

Documented forking paths in the Competitive Reaction Time Task

Shameless little bullies claim that published triathlon times don’t replicate

Boostrapping your posterior

You won’t be able to forget this one: Alleged data manipulation in NIH-funded Alzheimer’s study

Are stereotypes statistically accurate?

Will youths who swill Red Bull become adult cocaine addicts?

Science reporters are getting the picture

Modeling correlation of issue attitudes and partisanship within states

Tax Day: The Birthday Dog That Didn’t Bark

The history of characterizing groups of people by their averages

Calorie labeling reduces obesityObesity increased more slowly in California, Seattle, Portland (Oregon), and NYC, compared to some other places in the west coast and northeast that didn’t have calorie labelingWhat’s gonna happen in November?

An ethnographic study of the “open evidential culture” of research psychology

Things that sound good but aren’t quite right: Art and research edition

Michael Porter as new pincushion

Kaiser Fung on the ethics of data analysis

One more thing you don’t have to worry about

Evil collaboration between Medtronic and FDA

His varying slopes don’t seem to follow a normal distribution

A day in the life

Letters we never finished reading

Better to just not see the sausage get made

Oooh, it burns me up

Birthdays and heat waves

Publication bias occurs within as well as between projects

Graph too clever by half

Take that, Bruno Frey! Pharma company busts through Arrow’s theorem, sets new record!

A four-way conversation on weighting and regression for causal inference

How paracompact is that?

In Bayesian regression, it’s easy to account for measurement error

Garrison Keillor would be spinning etc

“Brief, decontextualized instances of colaughter”

The new quantitative journalism

It’s not about normality, it’s all about reality

Hokey mas, indeed

You may not be interested in peer review, but peer review is interested in you

Hypothesis Testing is a Bad Idea (my talk at Warwick, England, 2:30pm Thurs 15 Sept)

Genius is not enough: The sad story of Peter Hagelstein, living monument to the sunk-cost fallacy

Bayesian Statistics Then and Now

No guarantee

Let’s play Twister, let’s play Risk

“Evaluating Online Nonprobability Surveys”

Redemption

Pro Publica Surgeon Scorecard Update

Hey, PPNAS . . . this one is the fish that got away

FDA approval of generic drugs: The untold story

Acupuncture paradox update

More p-value confusion: No, a low p-value does not tell you that the probability of the null hypothesis is less than 1/2

Multicollinearity causing risk and uncertainty

Andrew Gelman is not the plagiarism police because there is no such thing as the plagiarism police.

Cracks in the thin blue line

Politics and chance

I refuse to blog about this one

“Find the best algorithm (program) for your dataset.”

NPR’s gonna NPR

Why the garden-of-forking-paths criticism of p-values is not like a famous Borscht Belt comedy bit

Don’t trust Rasmussen polls!

Astroturf “patient advocacy” group pushes to keep drug prices high

It’s not about the snobbery, it’s all about reality: At last, I finally understand hatred of “middlebrow”

The never-back-down syndrome and the fundamental attribution error

Michael Lacour vs John Bargh and Amy Cuddy

It’s ok to criticize

“The Prose Factory: Literary Life in England Since 1918” and “The Windsor Faction”

Note to journalists: If there’s no report you can read, there’s no study

Heimlich

No, I don’t think the Super Bowl is lowering birth weights

Gray graphs look pretty

Should you abandon that low-salt diet?

Transparency, replications, and publication

Is it fair to use Bayesian reasoning to convict someone of a crime?

“Marginally Significant Effects as Evidence for Hypotheses: Changing Attitudes Over Four Decades”

Some people are so easy to contact and some people aren’t.

Should Jonah Lehrer be a junior Gladwell? Does he have any other options?

Advice on setting up audio for your podcast

The Psychological Science stereotype paradox

We have a ways to go in communicating the replication crisis

Authors of AJPS paper find that the signs on their coefficients were reversed. But they don’t care: in their words, “None of our papers actually give a damn about whether it’s plus or minus.” All right, then!

Another failed replication of power pose

“How One Study Produced a Bunch of Untrue Headlines About Tattoos Strengthening Your Immune System”

Ptolemaic inference

How not to analyze noisy data: A case study

The problems are everywhere, once you know to look

“Generic and consistent confidence and credible regions”

Happiness of liberals and conservatives in different countries

Conflicts of interest

“It’s not reproducible if it only runs on your laptop”: Jon Zelner’s tips for a reproducible workflow in R and Stan

Unintentional parody of Psychological Science-style research redeemed by Dan Kahan insight

Rotten all the way through

Some modeling and computational ideas to look into

How to improve science reporting? Dan Vergano sez: It’s not about reality, it’s all about a salary

Kahan: “On the Sources of Ordinary Science Knowledge and Ignorance”

Why I prefer 50% to 95% intervals

How effective (or counterproductive) is universal child care? Part 1

How effective (or counterproductive) is universal child care? Part 2

“Another terrible plot”

Can a census-tract-level regression analysis untangle correlation between lead and crime?

The role of models and empirical work in political science

More on my paper with John Carlin on Type M and Type S errors

Should scientists be allowed to continue to play in the sandbox after they’ve pooped in it?

“Men with large testicles”

Sniffing tears perhaps not as effective as claimed

Thinking more seriously about the design of exploratory studies: A manifesto

From zero to Ted talk in 18 simple steps: Rolf Zwaan explains how to do it!

Individual and aggregate patterns in the Equality of Opportunity research project

Unfinished (so far) draft blog posts

Deep learning, model checking, AI, the no-homunculus principle, and the unitary nature of consciousness

How best to partition data into test and holdout samples?

Abraham Lincoln and confidence intervals

“Breakfast skipping, extreme commutes, and the sex composition at birth”

Discussion on overfitting in cluster analysis

Happiness formulas

OK, sometimes the concept of “false positive” makes sense.

“A bug in fMRI software could invalidate 15 years of brain research”

Interesting epi paper using Stan

How can you evaluate a research paper?

Some U.S. demographic data at zipcode level conveniently in R

So little information to evaluate effects of dietary choices

Frustration with published results that can’t be reproduced, and journals that don’t seem to care

Using Stan in an agent-based model: Simulation suggests that a market could be useful for building public consensus on climate change

Data 1, NPR 0

Dear Major Textbook Publisher

“So such markets were, and perhaps are, subject to bias from deep pocketed people who may be expressing preference more than actual expectation”

Temple Grandin

fMRI clusterf******

How to think about the p-value from a randomized test?

Avoiding selection bias by analyzing all possible forking paths

The social world is (in many ways) continuous but people’s mental models of the world are Boolean

Science journalist recommends going easy on Bigfoot, says you should bash of mammograms instead

Applying statistical thinking to the search for extraterrestrial intelligence

An efficiency argument for post-publication review

Bayes is better

What’s powdery and comes out of a metallic-green cardboard can?

Low correlation of predictions and outcomes is no evidence against hot hand

Jail for scientific fraud?

Is the dorsal anterior cingulate cortex “selective for pain”?

Quantifying uncertainty in identification assumptions—this is important!

If I had a long enough blog delay, I could just schedule this one for 1 Jan 2026

Historical critiques of psychology research methods

p=.03, it’s gotta be true!

Objects of the class “George Orwell”

Sorry, but no, you can’t learn causality by looking at the third moment of regression residuals

“The Pitfall of Experimenting on the Web: How Unattended Selective Attrition Leads to Surprising (Yet False) Research Conclusions”

Two unrelated topics in one post: (1) Teaching useful algebra classes, and (2) doing more careful psychological measurements

Transformative treatments

Comment of the year

Migration explaining observed changes in mortality rate in different geographic areas?

Fragility index is too fragile

When you add a predictor the model changes so it makes sense that the coefficients change too.

Nooooooo, just make it stop, please!

“Which curve fitting model should I use?”

We fiddle while Rome burns: p-value edition

The Lure of Luxury

Confirmation bias

Problems with randomized controlled trials (or any bounded statistical analysis) and thinking more seriously about story time

When do stories work, Process tracing, and Connections between qualitative and quantitative research

A small, underpowered treasure trove?

Problems with “incremental validity” or more generally in interpreting more than one regression coefficient at a time

No evidence of incumbency disadvantage?

To know the past, one must first know the future: The relevance of decision-based thinking to statistical analysis

Powerpose update

Absence of evidence is evidence of alcohol?

“Estimating trends in mortality for the bottom quartile, we found little evidence that survival probabilities declined dramatically.”

SETI: Modeling in the “cosmic haystack”

There should really be something here for everyone. I don’t remember half these posts myself, and I look forward to reading them when they come out!

P.S. It’s a good thing I blog for free because nobody could pay me enough for the effort that goes into it.

Wow

Andrew,

I have been reading your blog for years. At least two of my articles are directly attributable to your blog; but for your blog I never would have written them or even thought of writing them. Your blog has definitely affected my approach to teaching, hopefully for the better (given measurement problems we may never know…) That’s a plus for my research and my teaching, and I’m sure I’m not the only one. (Now, if you could just help me with my service…. Actually, you have! You gave me some help when I was AE at a journal and had a problem.)

I was at a small, off-the-record conference last year, where the attendees mostly engaged in field experiments in development. This is millions of dollars of research money; maybe tens of millions over the years. One of the speakers clearly was heading in the direction of the “garden of forking paths”. I waited for him to say the words. When he said, “garden of forking paths” I looked around the room, and at least a third of the heads were nodding knowingly. At a coffee break, I circulated and asked several persons about your paper. They’d all read it, and they all read your blog at least occasionally. So your blog is having a demonstrable effect on policy activities.

Regards,

Bruce

I just love blog posts over (say) articles because if you say something on a blog it doesn’t go easily uncontested. By the end of the comment thread one has a good view of the flaws in the original argument because others pick it apart, sometimes mercilessly.

In that sense articles are relatively risky, you just take the author at face value. Even if others realize the absolute stupidity of some of the argument they cannot easily communicate their criticism. Each one of us has to read and analyze the argument from scratch.

In other words, my belief in the soundness (or unsoundness) of something posted on a popular blog is far greater than that in an article.

I wonder if Funding Agencies are / ever will be progressive enough to fund a good blogger’s blogging just because of the impact it has on improving science in general.

If they can fund outreach & science communication why not blogging, eh?

How about some love for the commenters? ;-)

Any advice regarding email management?

PS: I second the comment praising the impact of this blog. It helped me come to STAN earlier than I would otherwise have. Which has been great for two research projects. Plus all the material useful for teaching. Thanks!

Don’t ever spend any time filing or deleting emails. Just use a “search folder” to search for your recent emails from all accounts (things less than say 60 days old). Let stuff fall off the queue on its own.

Anything the computer can do, let it do. That includes search, spam filtering, and archiving automatically into dated folders.

Daniel:

The trouble is, I was using my inbox as a to-do list. Inbox Zero is one way of doing things right away or deciding to do them not at all.

Andrew:

I’ve been practicing inbox zero for some years now. What helped me was the book “Getting things done” (could have been written in 10 pages but authors need to make a living) and todo.txt for managing tasks https://github.com/ginatrapani/todo.txt-cli/wiki

Using inbox for tasks sounds crazy.

Gnus is a pretty decent MUA for people with lots of email to manage.

I was just thinking of suggesting that you write about the ego-depletion replication failure, but looks like your plate is already full!

http://blogs.discovermagazine.com/neuroskeptic/2016/07/31/end-of-ego-depletion/#.V6EIcvkrLIU

“Boostrapping your posterior”

Is this corporal punishment by ghosts, or just a typo?

(Somehow I managed to post this on Thanks eBay! by mistake.)

would that be “incorporeal” punishment?

Could be!

wait, what? you are gonna stop blogging for a while? that’s so not Andrew though!

Cugrad:

A couple days ago I opened up a file on my computer where I dump material that could be suitable for blogs or maybe could appear in some other formats such as op-eds, research articles, books, etc. So now when something is interesting in that way, I have it there in the file, and I can blog it whenever. (Obv no rush on that.)

The trouble was, I’d have an interesting item, I’d open up a new post on the blog, I’d paste in the item and write some amusing prose around it, this would make me think of new things, I’d paste in some links, maybe find a good image to go with everything, think of a clever title, . . . , it could take 10 minutes or a half hour or more. The time wasn’t wasted—I’d have put in the time to express myself more clearly, and I’d have explored new ideas—but it was time that I could otherwise have put into writing a book, for example. With 170 blog posts in the queue, it seemed to make sense to do some of my writing in a different form.

This blog is the best, have been reading it for years now, thanks for the effort!

Can’t wait to find out what these are about: “Men with large testicles” and “Nooooooo, just make it stop, please!”

As a newcomer to the blog, I am grateful for the towering queue that will accompany your break. I also appreciate the reasons for the break.

Reading can be hazardous, anyway. I had to stop reading your article “No Trump!” (or even thinking about it) because it made me laugh too hard. I got stitches yesterday, and the laughing started to pull at them. Something similar happened when I read “Enough with the Replication Police.” So maybe the measured pace of the blog will encourage moderation in the readers, who will spread their moderation outward to the world. That one little change will revolutionize something or other (hint: TED talk!).

Then again, there’s always the temptation to reread….

“Why the garden-of-forking-paths criticism of p-values is not like a famous Borscht Belt comedy bit”.

Can’t wait for this one! I have been reading the garden of forking path article (http://www.stat.columbia.edu/~gelman/research/unpublished/p_hacking.pdf) and i don’t understand the main point of it. I am not smart enough to follow the formula’s, but i tried to understand the other pieces of the paper.

It seems to me to want to distinguish “fishing expeditions” from “the garden of forking paths”, but i have a hard time finding out exactly how they differ.

What does it mean when researchers “compute a single test based on the data” and how does this differ from “reporting J tests and reporting the best result” (p. 2)?

The same thing in slightly different words can be found on page 3:

“And it would take a highly unscrupulous researcher to perform test after test in a search for statistical significance (which could almost certainly be found at the 0.05 or even the 0.01 level, given all the options above and the many more that would be possible in a real study). We are not suggesting that researchers generally do such a search. What we are suggesting is that, given a particular data set, it is not so difficult to look at the data and construct completely reasonable rules for data exclusion, coding, and data analysis that can lead to statistical significance – thus, the researcher needs only perform one test, but that test is conditional on the data;”

I assume that when researchers “look at the data” this implies performing statistical tests to see which ones are significant and then “construct completely reasonable rules for data exclusion, coding, and data-analysis that can lead to statistical significance”. If this is correct, then i don’t understand how this differs from “performing test after test in a search for statistical significance”.

What am i not understanding?

Anon:

You could consider the garden of forking paths to be an informal version of p-hacking. What happens sometimes is that researchers look at their data and make decisions about data coding, data exclusion, and model specification without formally running any tests. Rather, they make what seem to be reasonable decisions, but these decisions are contingent on their data: had the data been different, they could well have chosen different rules for data exclusion, coding, and analysis. They’re not performing test after test, but their analyses have the same problem.

This all comes up because sometimes I’ve heard people declare that they were not p-hacking because they only performed this one analysis on their data. But what’s relevant for interpreting these p-values is not just what analyses they happened to do, what’s also relevant is the analyses they would’ve done, had the data been different. Asking such questions can make people uncomfortable, but this sort of counterfactual is central to the definition of the p-value.

I like to give the following example:

A group of researchers plans to compare three dosages of a drug in a clinical trial. There’s no intent to compare effects broken down by sex, but the sex of the subjects is recorded as part of routine procedure. The pre-planned comparisons show no statistically significant difference between the three dosages when the data are not broken down by sex. However, since the sex of the patients is known, the researchers decide to look at a chart of the outcomes broken down by combination of sex and dosage. They notice that the results for women in the high-dosage group look much better than the results for the men in the low dosage group, and decide to perform a hypothesis test to check that out. But in looking at the chart, they have informally compared (6×5)/2 = 15 pairs of dosage×sex combinations.

Thank you Andrew for this blog. I think you are a wonderful example of a public intellectual, caring about spreading important ideas. Your impact has been far greater with this blog than with traditional scientific publications. You bring people to the scientific publications by bringing us behind the sceces. You definitelty enlightened me with your blog.