I met Seth back in the early 1990s when we were both professors at the University of California. He sometimes came to the statistics department seminar and we got to talking about various things; in particular we shared an interest in statistical graphics. Much of my work in this direction eventually went toward the use of graphical displays to understand fitted models. Seth went in another direction and got interested in the role of exploratory data analysis in science, the idea that we could use graphs not just to test or even understand a model but also as the source of new hypotheses. We continued to discuss these issues over the years; see here, for example.

At some point when we were at Berkeley the administration was encouraging the faculty to teach freshman seminars, and I had the idea of teaching a course on left-handedness. I’d just read the book by Stanley Coren and thought it would be fun to go through it with a class, chapter by chapter. But my knowledge of psychology was minimal so I contacted the one person I knew in the psychology department and asked him if he had any suggestions of someone who’d like to teach the course with me. Seth responded that he’d be interested in doing it himself, and we did it.

After we taught the class together we got together regularly for lunch and Seth told me about his efforts in self-experimentation involving sleeping hours and mood. One of his ideas was to look at large faces in the morning (he used tapes of late-night comedy monologues). This all seemed a bit sad to me, as Seth lived alone and thus did not have anyone to talk with in the morning directly. On the other hand, even those of us who live in large families do not always spend the time to sit down and talk over the breakfast table.

Seth’s self-experimentation went slowly, with lots of dead-ends and restarts, which makes sense given the difficulty of his projects. I was always impressed by Seth’s dedication in this, putting in the effort day after day for years. Or maybe it did not represent a huge amount of labor for him, perhaps it was something like a diary or blog which is pleasurable to create, even if it seems from the outside to be a lot of work. In any case, from my perspective, the sustained focus was impressive.

Seth’s academic career was unusual. He shot through college and graduate school to a tenure-track job at a top university, then continued to do publication-quality research for several years until receiving tenure. At that point he was not a superstar but I think he was still considered a respected member of the mainstream academic community. But during the years that followed, Seth lost interest in that thread of research (you can see this by looking at the dates of most of his highly-cited papers). He told me once that his shift was motivated by teaching introductory undergraduate psychology: the students, he said, were interested in things that would affect their lives, and, compared to that, the kind of research that leads to a productive academic career did not seem so appealing.

I suppose that Seth could’ve tried to do research in clinical psychology (Berkeley’s department actually has a strong clinical program) but instead he moved in a different direction and tried different things to improve his sleep and then, later, his skin, his mood, and his diet. In this work, Seth applied what he later called his “insider/outsider perspective”: he was an insider in that he applied what he’d learned from years of research on animal behavior, an outsider in that he was not working within the existing paradigm of research in physiology and nutrition.

At the same time he was working on a book project, which I believe started as a new introductory psychology course focused on science and self-improvement but ultimately morphed into a trade book on ways in which our adaptations to Stone Age life were not serving us well in the modern era. I liked the book but I don’t think he found a publisher. In the years since, this general concept has been widely advanced and many books have been published on the topic.

When Seth came up with the connection between morning faces and depression, this seemed potentially hugely important. Were the faces were really doing anything? I have no idea. On one hand, Seth was measuring his own happiness and doing his own treatments on his own hypothesis so the potential for expectation effects are huge. On the other hand, he said the effect he discovered was a surprise to him and he also reported that the treatment worked with others. Neither he nor, as far as I know, anyone else, has attempted a controlled trial of this idea.

Seth’s next success was losing 40 pounds on his unusual diet, in which you can eat whatever you want as long as each day you drink a cup of unflavored sugar water, at least an hour before or after a meal. To be more precise, it’s not that you can eat whatever you want—obviously, if you live a sedentary lifestyle and you eat a bunch of big macs and an extra-large coke each day, you’ll get fat. The way he theorized that his diet worked (for him, and for the many people who wrote in to him, thanking him for changing their lives) was that the carefully-timed sugar water had the effect of reducing the association between calories and flavor, thus lowering your weight set-point and making you uninterested in eating lots of food. I asked Seth once if he thought I’d lose weight if I were to try his diet in a passive way, drinking the sugar water at the recommended time but not actively trying to reduce my caloric intake. He said he supposed not, that the diet would make it easier to lose weight but you’d probably still have to consciously eat less.

In his self-experimentation, Seth lived the contradiction between the two tenets of evidence-based medicine:

1. Try everything, measure everything, record everything.

2. Make general recommendations based on statistical evidence rather than anecdotes.

Seth’s ideas were extremely evidence-based in that they were based on data that he gathered himself or that people personally sent in to him, and he did use the statistical evidence of his self-measurements, but he did not put in much effort to reduce, control, or adjust for biases in his measurements, nor did he systematically gather data on multiple people.

I described Seth’s diet to one of my psychologist colleagues at Columbia and asked what he thought of it. My colleague said he thought it was ridiculous. And, as with the depression treatment, Seth never had an interest in running a controlled trial, even for the purpose of convincing the skeptics. Seth and I ended up discussing this and related issues in an article for Chance, the same statistics magazine where in 2001 Seth had published his first article on self-experimentation.

Seth’s breakout success happened gradually, starting with a 2005 article on self-experimentation in Behavioral and Brain Sciences, a journal that publishes long articles followed by short discussions from many experts. Some of his findings from the ten of his experiments discussed in the article:

Seeing faces in the morning on television decreased mood in the evening and improved mood the next day . . . Standing 8 hours per day reduced early awakening and made sleep more restorative . . . Drinking unflavored fructose water caused a large weight loss that has lasted more than 1 year . . .

As Seth described it, self-experimentation generates new hypotheses and is also an inexpensive way to test and modify them. One of the commenters, Sigrid Glenn, pointed out that this is particularly true with long-term series of measurements that it might be difficult to do on experimental volunteers.

About half of the published commenters loved Seth’s paper and about half hated it. The article does not seem to have had a huge effect within research psychology (Google Scholar gives it 30 cites) but two of its contributions—the idea of systematic self-experimentation and the weight-loss method—have spread throughout the popular culture in various ways. Seth’s work was featured in a series of increasingly prominent blogs, which led to a newspaper article by the authors of Freakonomics and ultimately a successful diet book (not enough to make Seth rich, I think, but Seth had simple tastes and no desire to be rich, as far as I know). Meanwhile, Seth started a blog of his own which led to a message board for his diet that he told me had thousands of participants.

On his blog and elsewhere Seth reported success with various self-experiments, most recently a claim of improved brain function after eating half a stick of butter a day. I’m skeptical, partly because of his increasing rate of claimed successes. It took Seth close to 10 years of sustained experimentation to fix his sleep problems, but in recent years it seemed that all sorts of different things he tried were effective. His apparent success rate was implausibly high. What was going on? One problem is that sleep hours and weight can be measured fairly objectively, whereas if you measure brain function by giving yourself little quizzes, it doesn’t seem hard at all for a bit of unconscious bias to drive all your results. I also wonder if Seth’s blog audience was a problem: if you have people cheering on your every move, it can be that much easier to fool yourself.

Seth had a lot of problems with academia and a lot of legitimate gripes. He was concerned about fraud (and the way that established researchers often would rather cover up an ethical violation than expose it) and also about run-of-the-mill shabby research, the sort of random and unimportant non-findings that fill up our scientific journals. But Seth’s disconnect from the academic research world was unfortunate, for two reasons. First, others were not getting the advantages of his perspective; second, he was not engaging with the sort of serious criticism that can make one’s work better. I certainly don’t think an academic connection is necessary for someone to engage with criticism, but it provides many opportunities, for those of us lucky enough to be so situated.

Seth was a complete outsider in the psychology department at Berkeley for decades and eventually took early retirement while barely in his fifties. I was surprised: as I told Seth, being a university professor is such a cushy job, they pay you and you don’t have to do anything. He responded dryly that retirement works the same way. As things turned out, though, he did take a new job, teaching at a university in China. That worked out for him, partly because he enjoyed undergraduate teaching—as he put it, the key is to work with the students’ unique strengths, rather than to spend your time trying to mold the students into miniature versions of yourself.

In the late 1990s, my friend Rajeev and I would sometimes go to poetry slams. Our most successful effort was once when I read Rajeev’s poem aloud and he read mine. It turns out that it’s easier to be expressive with somebody else’s words. Another thing I learned at that time was that people have a lot of problem pronouncing “Rajeev.” It’s pronounced just as it’s spelled, but people kept trying to say something like “Raheev” but in a hesitant voice as if it was this super-unusual name.

Anyway, one I idea I had, but which I never carried out, was to write down a bunch of facts about Seth and read them off, one at a time, completely deadpan. The gimmick would be that I’d come up on the stage, pull out a deck of index cards and ask a volunteer in the front row to shuffle them. I’d then read them off in whatever order they came up. The idea was that Seth was such an unusual person that his facts would be interesting however they came out.

In order to preserve some anonymity, my plan was to refer to Seth as “Josh.” (I think of “Josh” as an equivalent name to Seth, just as “Samir” is an alternative-world name for Rajeev.) I’m not sure what happened to my list of Josh sentences—I never actually put them into an act—but here a few:

Josh rents rats.

Josh stares at Jay Leno in the morning.

Josh works on a treadmill.

Josh lives in the basement.

There are a bunch more that I just can’t remember. Seth used to stay with me when he’d visit NY (I moved to Columbia because my Berkeley colleagues didn’t want me around; Seth and I joked that we should trade jobs because he liked NY but Columbia would never hire him), but it’s been several years since we’ve hung out and it’s hard for me to remember some of the unusual things he’d do. OK, I do remember one thing: he would often strike up conversations with perfect strangers, for example asking someone at the next table at a restaurant what they were eating or asking someone on the subway what they were reading. Most of the time this didn’t bother me—actually, I found it interesting, as I could get some information without suffering the difficulty of talking with a stranger. One time, though, he blocked me: I don’t think he realized what was going on at the time, but I was chatting up someone at some event—I can’t recall any of the details here (it was something like 15 years ago) but I do remember that he joined in the conversation and started yakking his head off until she drifted away with no chance that I could see for recovery on my part. Afterward, I berated Seth: What were you thinking? You ruined my chances with her, etc. . . . but he’d just been oblivious, just starting one more conversation with a stranger. Seth was always interested in what people had to say. His conversational style was to ask question after question after question after question. This didn’t really show up in his online persona. It’s interesting how our patterns of writing can differ from how we speak, and how our interactions from a distance can differ from our face-to-face contacts.

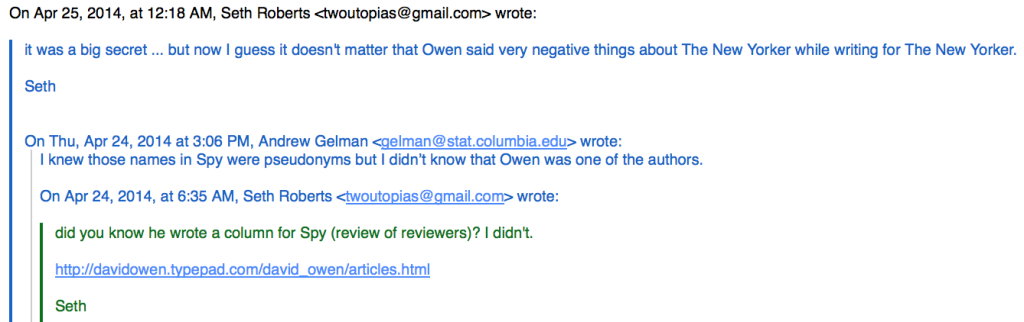

One of Seth’s and my shared interests was Spy magazine, that classic artifact of the late 1980s. When I found out that Seth had actually written for Spy, I was so impressed!

Here was our last contact, which won’t be of interest to anyone except to me because it happened to be the last time I heard from him:

My friend is gone. I miss him.